Smartphones have multiple cameras for reasons. For example, a telephoto lens for long-focus, or a wide-angle camera for better landscape shots.

But with the demand of the market that hunger for innovations, smartphone makers were ramping up their efforts to improvise the existing, and introduce new technologies as they say fit.

In the camera sector, one of which, is the Time-of-Flight camera.

Also referred as 'ToF', it consists two core parts: An illuminator to flood the field of view of the camera with light of a specific wavelength, and the camera itself to receive the data.

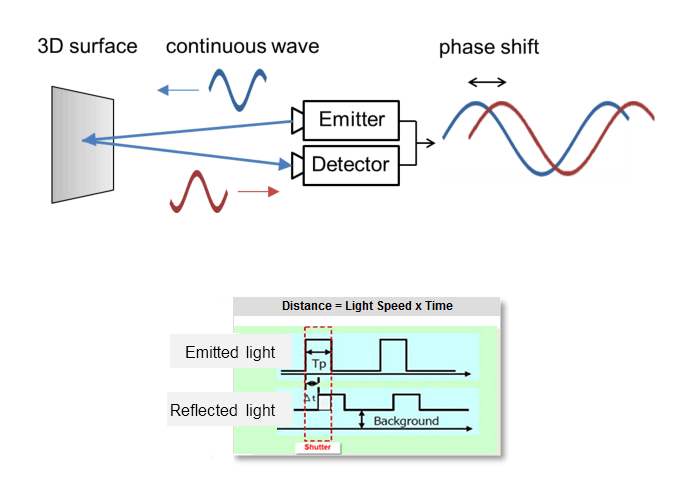

Using near-infrared light, which is invisible to the human eyes, ToF sends light from the illuminator, in which the light will bounce off the various surfaces in a scene, and travels back to the receiving sensor. By analyzing the phase of the light wave, the ToF camera’s paired processor can determine how long the light took to bounce back.

This effectively makes it capable of determining the depth of objects, in real time.

The technology provides a huge advantage over normal cameras, as it can accurately measure distances in a complete scene using just a single laser pulse.

ToF is just one of many techniques that fall under range of 3D imaging. But if compared to most of them, there are some advantages of using ToF.

This includes cost. ToF is relatively cheaper. But the technology is still great because the sensor can reach 160fps, meaning that they are good for on-the-fly videos. Then there is the amount of processing power ToF uses, which is relatively small.

While ToF is essentially a camera, it's more than just a simple camera, or to be exact, a little less than ordinary cameras.

Ordinary cameras perform at their peak when light source is plenty, or in other words, while it's not dark. But for ToF, the more the outsource light, the brightness and the rays of light from the surrounding can create noise that flood its receiving sensor.

Another downside is that, ToF cameras have a relatively lower resolution in comparison with primary cameras.

The technology can be traced back to the year 2000, where it has been used for products in civil applications. The systems can cover ranges of a few centimeters to up to several kilometers.

With its 3D-sensing capabilities, ToF can be used to measure distance and volume, as well as object scanning, indoor navigation, obstacle avoidance, gesture recognition, object tracking, and more. Light Detection and Ranging (LiDAR) sensor for example, can help autonomous cars navigate the real world, as it uses ToF to create 3D models and maps objects and surrounding environments

But on mobile phones, the ToF sensor is less powerful.

It's more useful in helping generate real-time effects in both photos and videos, like creating depth blur. With lasers capable of picking up distance, down to as small as pixel size, ToF can also assist other cameras on a smartphone to focus on low light conditions.

Furthermore, it can also be used to improve augmented reality (AR) experience.

The basic concept behind ToF operation is measuring the time it takes for light to travel to and bounce off objects.

Light travels at roughly 300 million meters per second. Using this idea, the algorithms inside the device calculates the delay between the first light pulse emitted from the sensor and the time it gets back, to judge distance.

The main difference between ToF sensor and a normal sensor is that, the former uses its own source of 'light' to understand its surroundings, while the latter relies on the light emitted from other sources to get information.

With this ability, ToF can help devices see the world in 3D, not the usual 2D, like when utilizing normal cameras.

Google, Samsung, Apple and others have their own methods to calculate depth in photos and videos. But using ordinary camera sensors, no matter how good they are, is a burden to the hardware. Forcing the camera sensors to focus in real-time, manipulating the lights, shadows and all, down to individual pixels are the surest ways to make a phone hot and drain its battery quickly.

With ToF, ordinary cameras can have some weight lifted off their shoulders because ToF can track depth at a greater rate, making it easier to track the motion in real time, rather than just surfaces, more effectively while consuming less power.

Theoretically, ToF cameras can improve to a whole new level using more capable algorithms. While the lights and all provide the information, its the computers that process the information that do the magic trick.