The AI hype continues, and things don't look too good on one side of the ring.

When OpenAI introduced ChatGPT, it quickly sent ripples throughout the AI ecosystem, and also throughout other industries, as people realize the potential of the outstanding generative AI.

But on the other side of the ring, there are designers, photographers and others who worry about their work.

From OpenAI's DALL·E, its successor, the DALL·E 2, Google's Imagen, and Stable Diffusion are able to create new images out of thin air.

This is already an issue, and apparently, it's only only the beginning, because even more AIs that came after them, stepped things up a bit.

The AI from Adobe, for example, is able to manipulate existing real images.

As a result of this, unauthorized manipulation of, or outright theft of, existing online artwork and images is possible.

Traditionally, the best way to prevent image theft is by putting a visible watermark.

A watermark can be used to discourage theft because it uses another image or pattern superimposed to the image it's trying to protect.

But this kind of watermark is no use against AI.

In a report, researchers at MIT CSAIL developed another kind of 'watermark', which is invisible to the human eyes, but clearly attractive to AIs.

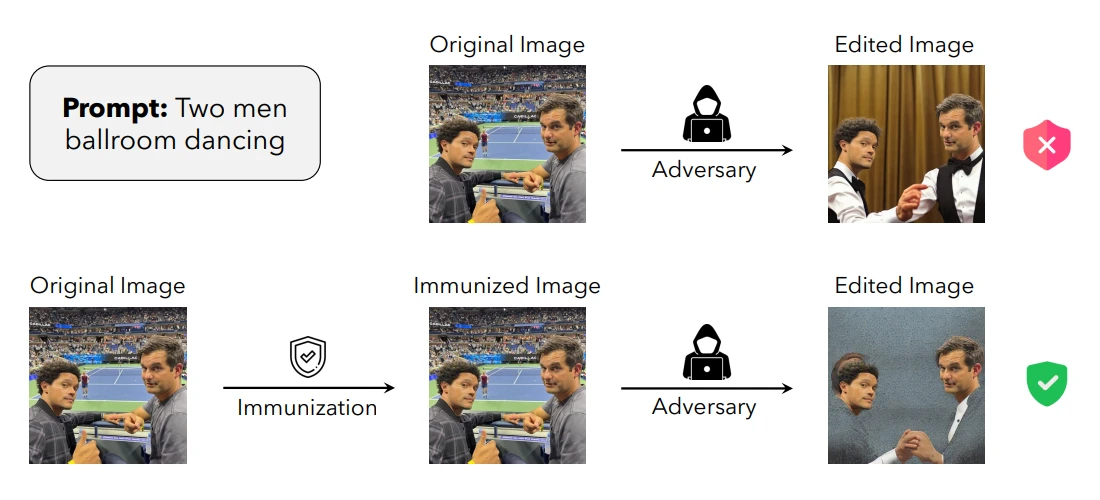

The unique watermarking technique should help prevent AIs from doing any manipulation on a protected image, using a method known as adversarial attack.

Calling it the 'PhotoGuard', the technique works by altering only select pixels in an image in such a way, that the change will disrupt an AI's ability to understand what the image is.

The adversarial attack technique in use is known as "perturbations."

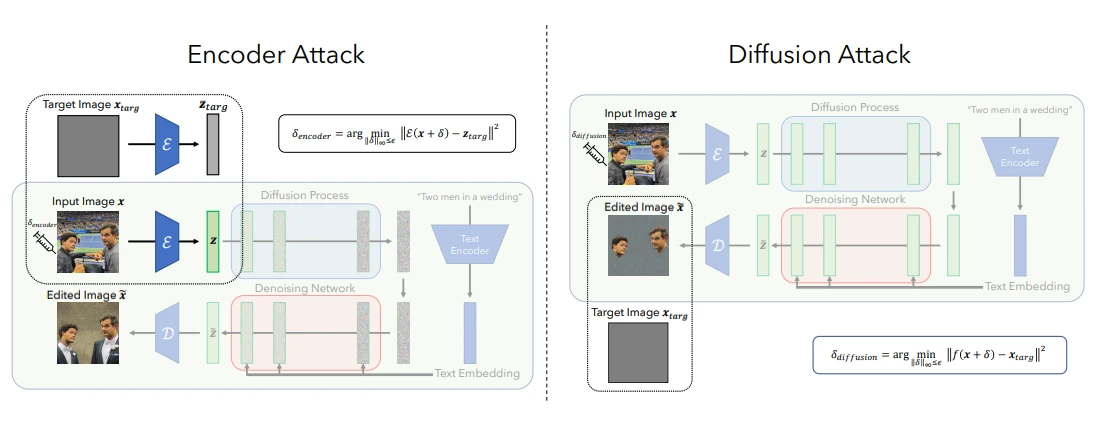

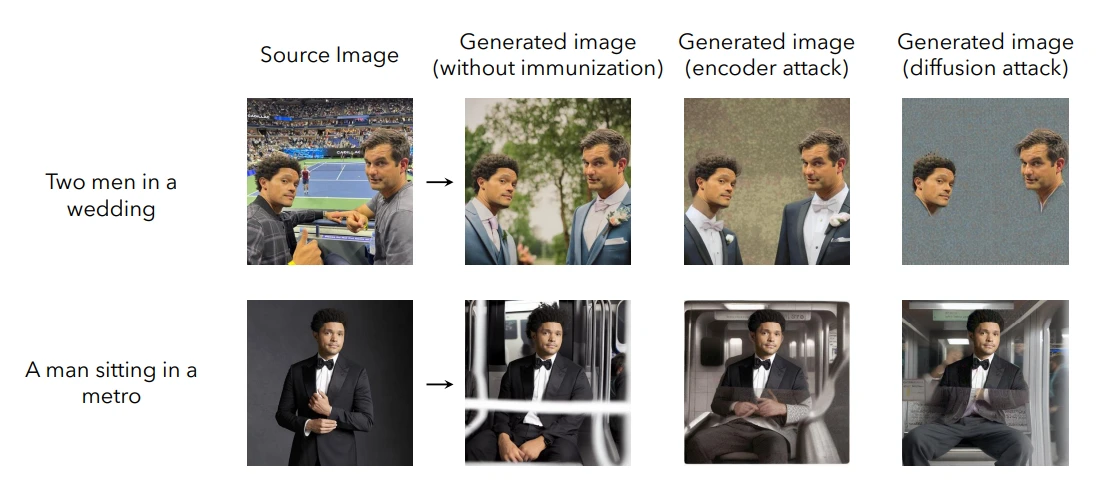

The first approach, is called the "encoder" attack method, which introduces these artifacts at the algorithmic model's latent representation of the target image.

Because it targets the AI's ability to understand position and color of every pixel in an image, the method can essentially prevent the AI from understanding what it is looking at.

The second approach is the more advanced "diffusion" attack method. The approach that is more computationally intensive, camouflages an image as a different image in the eyes of the AI.

As a result, any attempt to manipulate the image will result in an unrealistic looking generated image.

"The encoder attack makes the model think that the input image (to be edited) is some other image (e.g. a gray image)," said MIT doctorate student and lead author of the paper, Hadi Salman.

"Whereas the diffusion attack forces the diffusion model to make edits towards some target image (which can also be some grey or random image)."

While the method can certainly help, the technique isn't foolproof.

Just like how virus and antivirus work, and how hackers and security researchers are always neck-and-neck, AI-based manipulators and detectors are waging their own war.

This is because malicious actors could work to reverse engineer the protected image potentially by adding digital noise, cropping or flipping the picture.

"A collaborative approach involving model developers, social media platforms, and policymakers presents a robust defense against unauthorized image manipulation. Working on this pressing issue is of paramount importance today," Salman said in a release.

"And while I am glad to contribute towards this solution, much work is needed to make this protection practical. Companies that develop these models need to invest in engineering robust immunizations against the possible threats posed by these AI tools."