As long as there is a female that sees herself as not equal to her male counterpart, feminism would be here to stay.

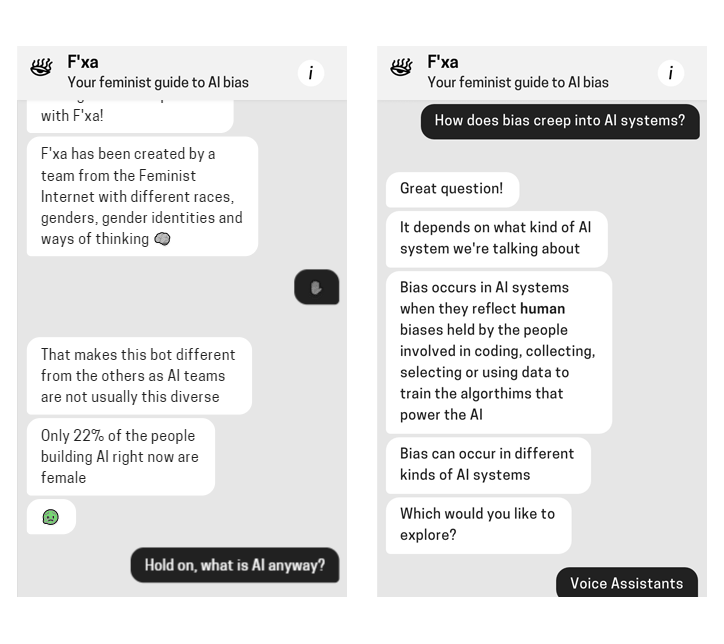

The Feminist Internet, a non-profit working to prevent biases creeping into AI, has created F’xa. This feminist voice assistant was created to teach users about AI biases, and suggests how they can avoid reinforcing what they say is a harmful stereotype.

According to the UNESCO, in its report I’d blush if I could (titled after Siri’s response to: “Hey Siri, you’re a bitch”), the organization noted that voice assistants often react to verbal sexual harassment in an “obliging and eager to please” manner.

The reason is because these assistants were created by teams which are mostly consisted by male. And these testosterone-packed gender made those industry-leading voice assistants to be female by default.

They all have typically female names, too, and designed to have feminine voices.

Just think about it: Microsoft's Cortana was named after a synthetic intelligence in the video game Halo that projects itself as a sensuous unclothed woman. Apple's Siri on the other hand, means "beautiful woman who leads you to victory" in Norse.

Google Assistant has a better gender-neutral name then its two counterparts, but its default voice is female.

In the modern days of hands-free and voice-enabled technology interaction, popular tasks given to voice assistants usually mirror jobs historically associated with women.

This can include: setting alarm, waking the user up in the morning, creating to-do lists, putting together shopping lists, and all to 'naughty' questions.

Another thing that created this bias, is because people typically prefer to hear a male voice when it comes to authority, but prefer a female voice when they need help. These again fueled gender bias.

F’xa here wants to explain that the biases do exist. And here, it wants to teach people to enforce gender equality by challenging gender roles in voice assistants, just like they should be challenged in real-life.

To make this happen, F’xa was built to have feminists' values in mind, making it capable of responding user queries by holding up to feminist beliefs that avoid reinforcing bias and stereotypes.

F’xa created by a diverse team using the Feminist Internet’s Personal Intelligent Assistant Standards and Josie Young’s Feminist Chatbot Design research.

In preparation for building F’xa, Young explored contemporary feminist techniques for designing technology called the Feminist Chatbot Design Process. This is a series of reflective questions incorporating feminist design, ethical AI principles, and research on de-biasing data.

Using a smartphone, the bot works to ensure developers and designers don’t put gender inequalities into their chatbots, and educates users on how existing voice assistants give gender equality a bad future.

In an example, the F’xa was asked “How does bias creep into AI systems?” it can reply with: “Bias occurs in AI systems when they reflect human biases held by the people involved in coding, collecting, selecting, or using data to train the algorithms that power the AI.”

This explanation cannot be found on 'usual' voice assistants.

According to F'xa, smart devices are colonizing the consumer electronic market, and researchers estimate that 24.5 million voice-driven devices will be used daily in 2019; predictions further suggest that 50 percent of searches will be made via voice command by 2050.

So if gender equality matters, Josie Young is pursuing to fix the problem of voice assistants, before it becomes too difficult.

Related: 'Q', The First Gender-Neutral Digital Voice Assistant In Challenging Gender Stereotypes