Playing games can enhance creativity and productivity. While for us humans, playing too much may not be good, for AI, it's the opposite.

DeepMind is a British artificial intelligence company founded in September 2010, and acquired by Google in 2014. It has shared the results of research and experiments in which multiple AI systems were trained to play Capture the Flag on Quake III Arena, a multiplayer-focused first-person shooter video game.

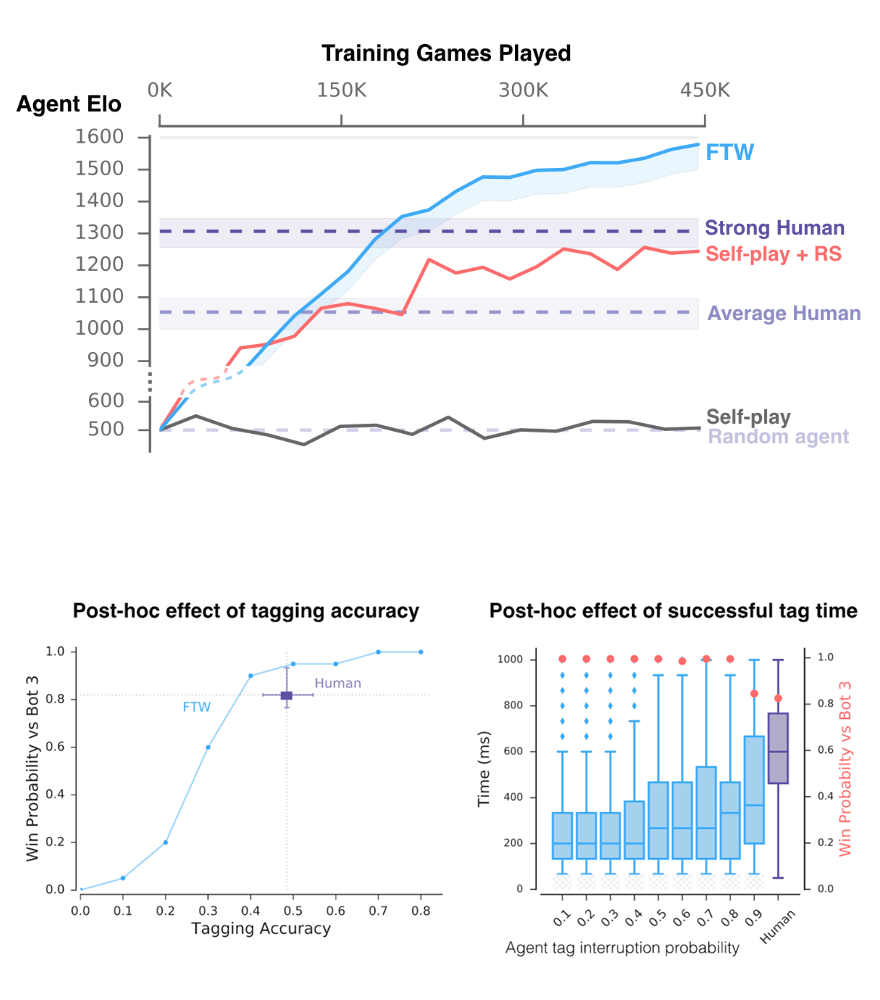

The AI, named For The Win (FTW), has played the game nearly 450,000 times, with the result of it becoming better than most human players and machines in the game.

FTW has been proven to be capable of establishing its understanding of how to effectively work with other humans or machines while playing the game.

"We train agents that learn and act as individuals, but which must be able to play on teams with and against any other agents, artificial or human," said DeepMind in a blog post.

"From a multi-agent perspective, [Capture the Flag] requires players to both successfully cooperate with their teammates as well as compete with the opposing team, while remaining robust to any playing style they might encounter."

Usually, to make AI learn video games, researchers use reinforcement learning with focus on environments using a handful of players. In FTW's case, DeepMind experimented using 30 agents playing concurrently four at a time against humans or machines.

Capture the Flag was played in settings with random map layouts rather than a static.

The game has also been modified, though all game mechanics remain the same. It has consistent environment to train the AI's general understanding. To make it more realistic, the researchers also included indoor environments with flat terrain and outdoor environments with varying elevations.

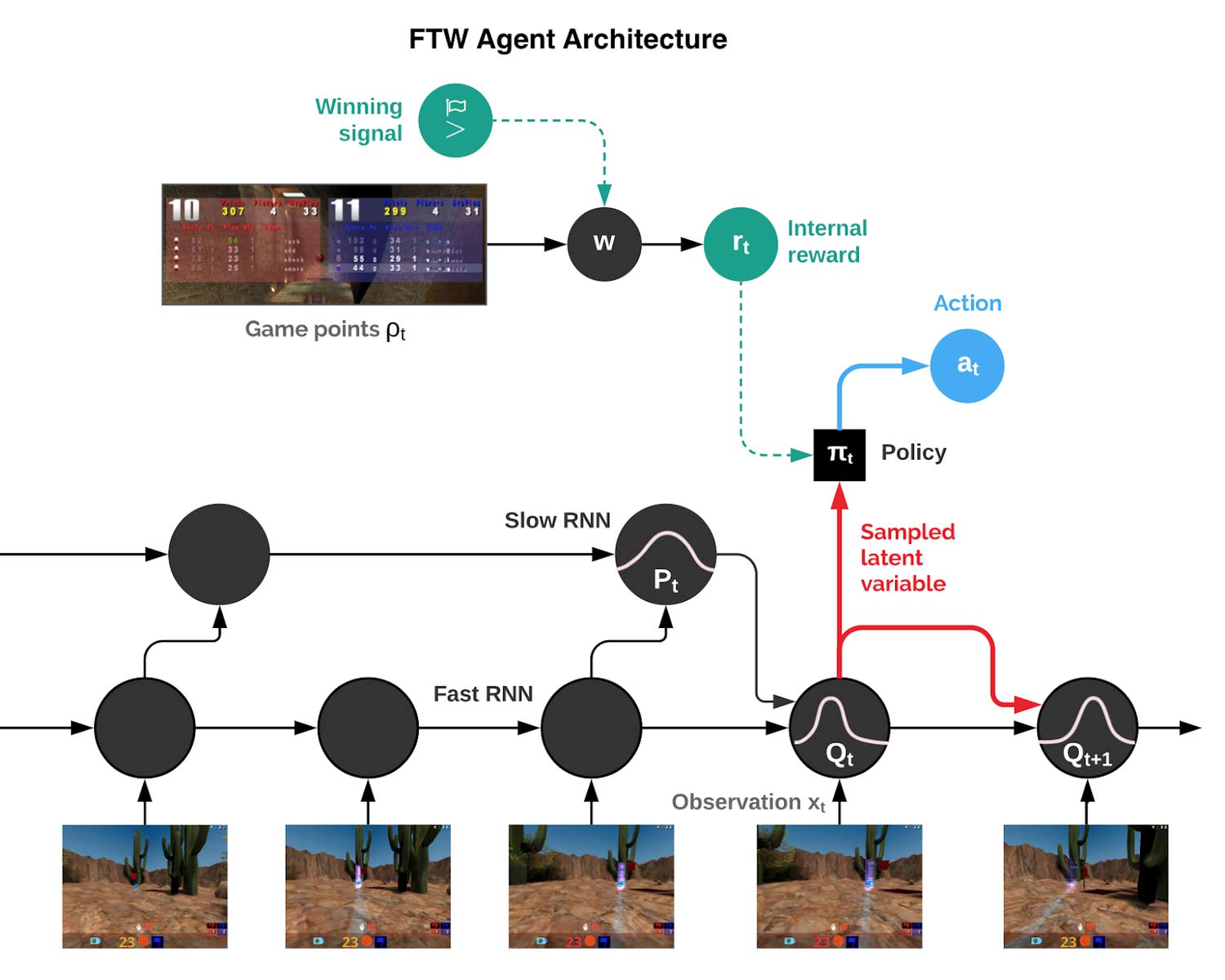

The agents were then operated in slow or fast modes, and developed their own internal rewards systems. The only command for the agents was to win the game by capturing the most flags within 5 minutes.

Quickly, FTW learned the basic strategies like home base defense, following a teammate, or camping out in an opponent’s base to tag them after a flag has been captured.

In a tournament with and against 40 human players, machine-only teams were undefeated when playing against human-only teams, and had a 95 percent chance of winning against teams in which humans played with a machine partner.

On average, two FTW agents were able to capture 16 more flags per game than the human-machine teams.

This is because the agents were found to be 80 percent more efficient than humans in tagging (touching an opponent to send them back to their spawning point) and achieving in learning tactics quickly. FTW was also more collaborative.

Further in the game, FTW continued to have an advantage over its human players, even when the researchers suppressed the tagging abilities.

DeepMind’s study further shows how reinforcement learning can make machines play video games using memory or other traits common in humans.

Insights include how multi-agent environments can be used to inform human-machine interaction and train AIs to work together.

Previously, DeepMind has created AlphaGo, an AI that beats the top Go human player in the world in May 2017. It also created AlphaGo Zero which surpassed the original AlphaGo.

The company also created an AlphaZero, an AI that learned chess in just 4 hours to beat or tie previous top chess computer program in 100/100 matches.

DeepMind has also found much value in StarCraft, Blizzard's military science fiction game. In August 2017, DeepMind announced the release of the StarCraft II API for reinforcement learning as part of a partnership with Blizzard.

And as for AI in playing first-person shooter games, IntelAct which was programmed by Intel Labs researchers Alexey Dosovitskiy and Vladlen Koltun, won the game Doom 10 out of 12 games.

OpenAI has also showed an interest when it created an AI capable of defeating the world's best Dota 2 players.