How good are most people in editing images? Apparently, not that many.

Using Adobe Photoshop, which is one of the most popular editing software, people can edit images and photos, altering them as they want. Quick fixes can be easily made using the software.

However, with the sophisticated tools the software has, people still need some skills, experience and of course, a steady hand to "flawlessly" edit images and add creative enhancements.

MIT’s Computer Science and Artificial Intelligence Lab (CSAIL) has a solution for that, by introducing an AI-assisted image editing tool that automates object selection.

"Instead of needing an expert editor to spend several minutes tweaking an image frame-by-frame and pixel-by-pixel, we’d like to make the process simpler and faster so that image-editing can be more accessible to casual users," said Yagiz Aksoy, a visiting researcher at MIT’s CSAIL.

The "magic" here involves a lot of complex algorithms and computations.

The team at CSAIL uses a neural network to process the image features and make determinations about the soft edges of an image.

Humans have the ability to differentiate things based on context. In a motorbike on a side of a road, for example, humans can easily see the bike by looking how its edges meet the background, and differentiate it from the rest.

Computers have to be taught how to do this, and it’s not a simple task.

This is because computers look at a picture based on their pixels. In "soft transitions," such as hair and grass, pixels can be shared between two different objects. In the motorbike's case, the pixels use to render the bike and the pixels that render the road, are shared at the very edges where the two meet.

"The tricky thing about these images is that not every pixel solely belongs to one object. In many cases it can be hard to determine which pixels are part of the background and which are part of a specific person," said Aksoy.

MIT’s AI takes this into account, and figured out how to make the AI autonomously split the differences.

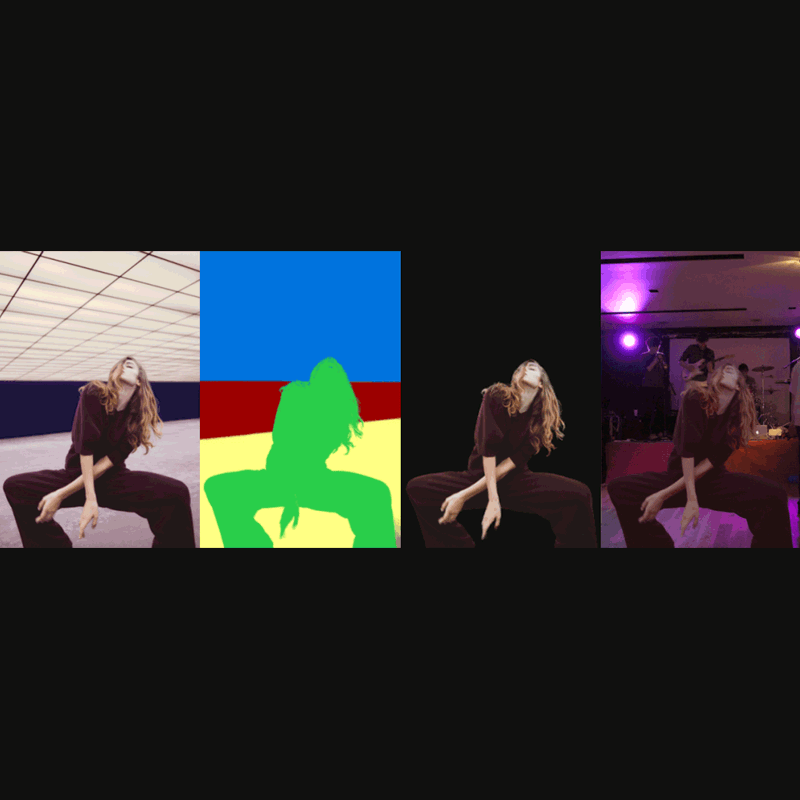

Using a technique it calls "Semantic Soft Segmentation" (or SSS), the AI separates objects in a picture by putting them into different segments, away from the background. This allows users to easily select which layer they want to alter.

The AI is unlike the Magnetic Lasso or Magic Lasso tools available on most photo editing software, as it doesn’t rely on user input for context. What this means, users don’t have to trace around an object or zoom in and catch the fine details.

"The vision is to get to a point where it just takes a single click for editors to combine images to create these full-blown, realistic fantasy worlds."

The AI studies the original image's texture and color, and combines them with information collected by a neural network about what the objects within the image actually are. The neural network processes the image features and detects those "soft transitions".

"Once these soft segments are computed, the user doesn’t have to manually change transitions or make individual modifications to the appearance of a specific layer of an image," said Aksoy. "Manual editing tasks like replacing backgrounds and adjusting colors would be made much easier."

The future of image and video editing is certainly AI, and CSAIL is showing just that.

Getting details out a picture can be tedious, time-consuming and difficult for anyone but the most seasoned of editors. In a paper, Aksoy and his colleagues demonstrated a way to use this machine learning technology to automate many parts of the editing process for photos.

But the AI the team created, requires a lot of computing power and more time to work. For example, it needs about 4 minutes to process one easy image, when a human Photoshop expert could probably beat it.

In the future, the researchers have plans to reduce the time the AI needs to compute an image from minutes to seconds, and also to make images it creates even more realistic by improving the system’s ability to match colors and handle things like illumination and shadows.

According to Aksoy, SSS in its initial iteration is focused on static images, and could be used by social platforms like Instagram and Snapchat to make their filters more realistic, like for example, changing the backgrounds on selfies or simulating specific kinds of cameras.

But the team said that there are chances for it to be used on videos in the foreseeable future.