No product is perfect, even when that product comes from one of the most famous tech companies in the world.

Apple has what it calls Siri, the digital assistant made available to its products. With it, users can interact using voice command, and make the AI do certain task for them. And this time, Apple admitted that the company inadvertently listened to those voice commands due to a bug.

This was first revealed when Apple released iOS 15.4 beta 2.

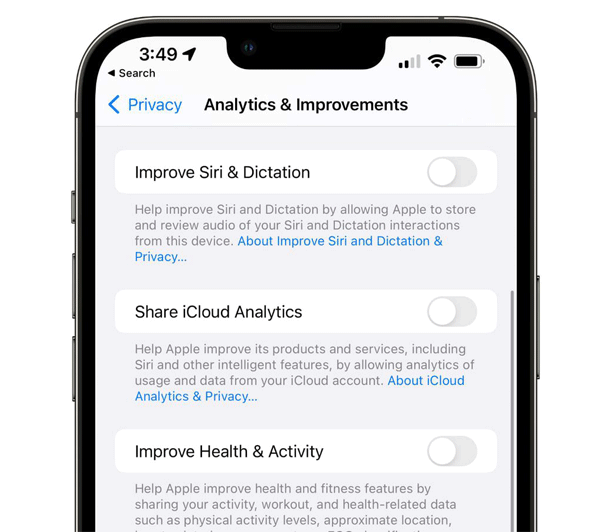

The version of the operating system started asking for users' permission to enable the 'Improve Siri & Dictation' feature.

This was when it is realized that the version of operating system fixes a bug that recorded user interactions with Siri on some Apple devices, regardless of whether users have opted out.

The bug that was first introduced in iOS 15, automatically enabled the 'Improve Siri & Dictation' setting by default.

In a newsroom post, Apple said that:

After discovering the bug, the company reportedly turned off the feature for “many” users when it released iOS 15.2, stopped Siri from recording by default, and started deleting any inadvertently collected recordings.

Apple then fully fixed the bug in the second beta of iOS 15.4.

Following the lengthy explanation, Apple issued an apology:

To prevent similar issues in the future, Apple also announced several changes to Siri’s privacy policy:

- By default, Apple will no longer retain audio recordings of Siri interactions. Instead, it will continue to use computer-generated transcripts to help Siri improve.

- Users will be able to opt in to help Siri improve by learning from the audio samples of their requests. Those who choose to participate will be able to opt out at any time.

- When users opt in, only Apple employees will be allowed to listen to audio samples of the Siri interactions. Our team will work to delete any recording which is determined to be an inadvertent trigger of Siri.

It's not a big secret anymore, that tech companies that provide digital assistant services, like Apple, Google, Amazon and Microsoft, hire human contractors to listen to recordings.

They do this so they can improve the quality of the digital assistants.

Despite the recordings are anonymized and not labeled, the issue here is that, the fact is not usually made clear to customers.

According to reports, those contractors can access to recordings that were full of private details, often due to accidental triggers, and workers were said to each be listening to up to 1,000 recording a day.

And this is certainly a privacy issue.

In Apple's case, the company gave users the option to opt out. But a bug was found automatically enabled the Improve Siri & Dictation, and made it on by default.

While Apple patched the bug and made an apology, the company didn't say anything about how many phones were affected, or when.