The future of computing is having machines to learn with artificial intelligence (AI). As tech companies are in a battle to bring the technology into mainstream, Google updates its machine learning software Tensorflow to bring more efficiency.

The future of computing is having machines to learn with artificial intelligence (AI). As tech companies are in a battle to bring the technology into mainstream, Google updates its machine learning software Tensorflow to bring more efficiency.

TensorFlow is Google's way to teach computers how to process data in ways similar to humans (neural networking). Powering many parts of Google's services, it has been open-sourced since November 2015.

On Wednesday, 13 April 2016, Google updates TensorFlow to version 0.8. While this version doesn't bring much to the surface, but it introduces many of TensorFlow's requested feature. And that is the ability to operate on multiple devices.

Previously, TensorFlow is limited to one machine at a time. With this version, TensorFlow is able to compute using many machines working in parallel - up to hundreds of machines and GPUs. So instead of limiting its processing ability on a single computer, TensorFlow can now be used in a distributed networks to handle more complicated tasks.

In short, TensorFlow had a brain, now it has brains.

Not everyone needs to run TensorFlow on hundreds, let alone thousands, of servers at a time. But with the update, they can benefit to run TensorFlow on multiple machines to accomplish more complicated task in a shorter amount of time.

And by having the source code open-sourced, Google can have TensorFlow to be developed by many researchers, scientists and businesses, improving its ability in machine learning.

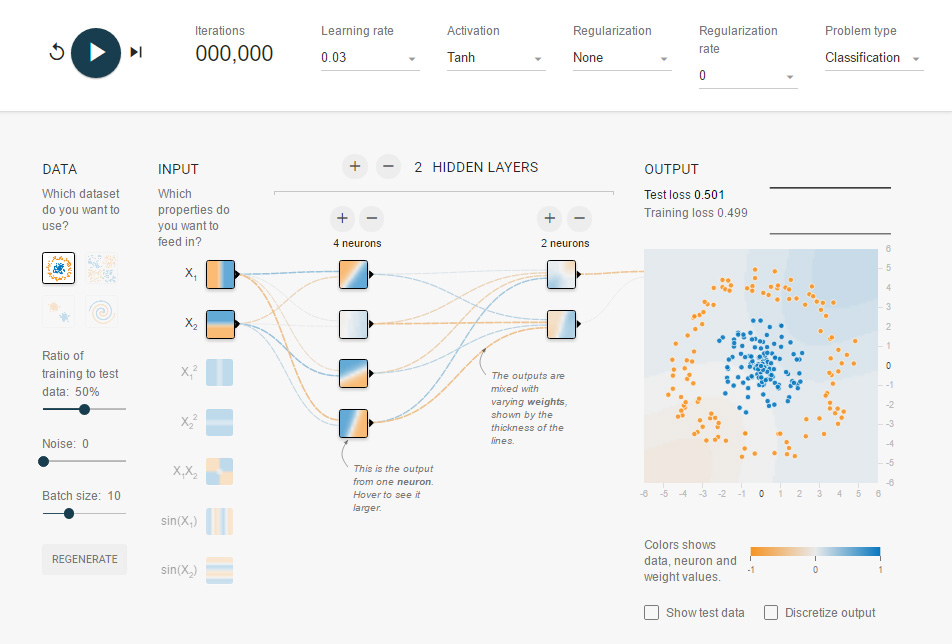

TensorFlow is complex and can be difficult to set up. If you're curious about it, Google has created an in-browser neural network powered by TensorFlow to explain how machine learning works. This way, you can experiment with some basic TensorFlow setup and deep learning (screenshot below). Google also recently announced hosted Google Cloud Machine Learning platform available to all developers.

Brains > Brain = Faster. But Is It Better?

TensorFlow was first developed to improve many of Google's services that users interact with. It has powered Translate, Photos and more. With TensorFlow, Google is able to understand and recognize users' intention in using its apps and services, and doing what it does best.

By having TensorFlow open-sourced, the public can build their own AI applications using the same software that Google uses, and that could help them create about anything from photo recognition to automated email replies. Some examples of TensorFlow implementation can be seen in a number of projects, including a program that learns how to play Pong, and a neural network that creates fake but realistic-looking Chinese characters.

Machine learning is still at its infancy. Humans at an early age can already differentiate a dog and cat by just looking at it, and this however does not apply to computers. Human brains can simply know how to do this tasks, and machines have a lot more to learn.

But the progress in which AI and machine learning advance in the last few years has grown tremendously. TensorFlow is one of which that is used to help machines perceive and process the world by emulating human brains.

Before TensorFlow, Google had a tool called DistBelief to do basically the same task. TensorFlow was built to improve and develop DistBelief into a new level of performance. Google's Sundar Pichai said that TensorFlow is up to five times faster than its predecessor. Google's improvement in the last few years can be seen partly attributed to the switch from DistBelief to TensorFlow.

Matthew Zeiler that was an intern on the DistBelief project, said that:

"Deep learning is a huge opportunity right now because it enables developers to create applications in a way that was never possible before. Neural networks are a new way of programming computers … It's a new way of handling data."

Since its availability, TensorFlow was a quick hit among developers. It didn't long until it became the most popular and the most forked project on GitHub in 2015, and was among the six open source projects that received the most attention from developers that year.

The delay for Google to update TensorFlow comes from the difficulties Google had in adapting it to the new environment outside where it was born. As TensorFlow's technical lead Rajat Monga explained, the delay in releasing a multi-server version of TensorFlow was due to the difficulties of adapting the software to be usable outside of Google's customized data centers.

"Our software stack is differently internally from what people externally use," he said. "It would have been extremely difficult to just take that and make it open source."

As good as it gets, there are (were) some things that TensorFlow lack. Previously, it can only run on one machine at a time (now resolved), the next is the competition. TensorFlow is not the only machine-learning software out there, and there are some that may do just great; TensorFlow is not unique. But backed by Google, TensorFlow does inherit Google's capabilities. Some of which are the consistency in design with results being easy to share, making it more inviting for novices. Google has made it easy to familiarize with, and developers can switch from one library to another in less effort.

Having TensorFlow to be used on multiple machines at a single time is certainly an obvious improvement to TensorFlow. After all, machine learning and AI needs a lot of data to "learn", and as the smarter it gets the hungrier it will become. Processing a huge amount of data needs a lot of resources, and of course, time. By having it run on multiple machines, TensorFlow can now finish after a few hours processing the same data that previously need days or even weeks.