User data has increasingly become more important to secure as people upload more and more of their information to the web.

And the many user data the web has, includes facial data. This kind of data can be used by, for example, government agencies around the world that use automated services to keep tabs and tabs of their citizens. If there is a photo of a person available on the internet, they can use that person's face information to train AIs, or track them using public surveillance cameras.

Facebook is one of the most popular platforms where people store their photos. And here, the social giant has devised a way to thwart this face recognition technology.

Developed by Oran Gafni, Lior Wolf and Yaniv Taigman from Facebook AI Research and Tel-Aviv University, the method involves live face 'de-identification' to slightly modify people's faces.

In a YouTube video shared by Gafni shown below, AI can tweak certain details, such as the shape of a person's mouth, or the size of their eyes.

The change can be subtle, but it can trick facial recognition technologies in a way that they won't match what they see in the footage with images of the people in their databases.

Facebook said that this technology can be used with pre-recorded contents, as well as with live videos.

According to the researchers:

"The goal is to maximally decorrelate the identity, while having the perception (pose, illumination and expression) fixed. We achieve this by a novel feed-forward encoder-decoder network architecture that is conditioned on the high-level representation of a person’s facial image. The network is global, in the sense that it does not need to be retrained for a given video or for a given identity, and it creates natural looking image sequences with little distortion in time."

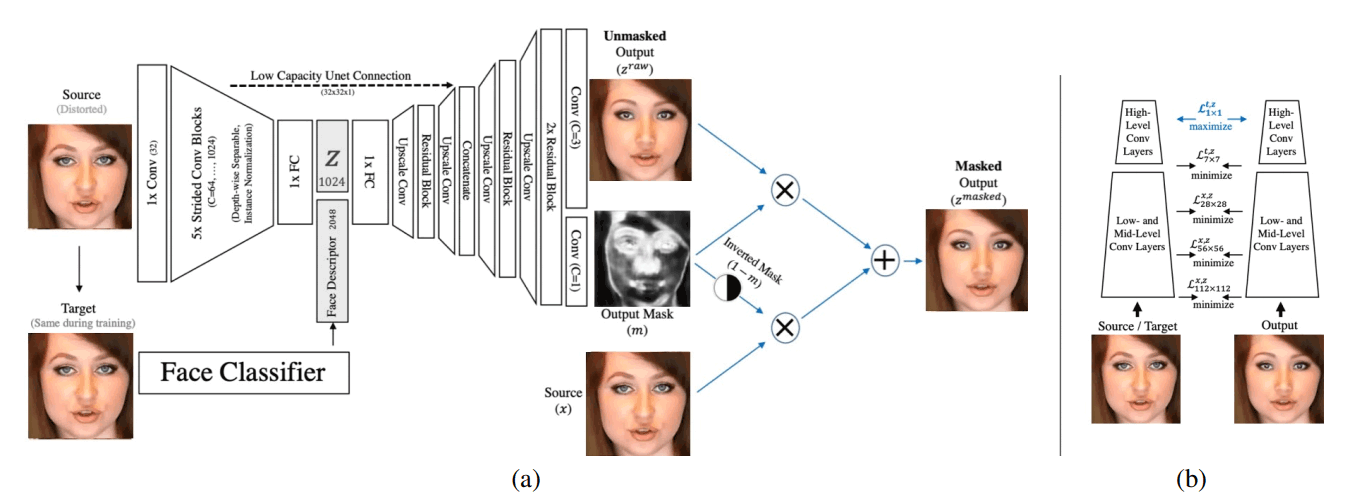

How the AI works involves an encoder-decoder architecture to generate both a mask and an image.

During the training process, the person’s face is distorted before being fed into the network. The system then generates distorted and undistorted images of a person’s face for output that can be embedded into videos.

"The generation task is, therefore, highly semantic, and the loss required to capture its success cannot be a conventional reconstruction loss."

"For the task of de-identification, we employ a target image, which is any image of the person in the video. The method then distances the face descriptors of the output video from those of the target image."

While photos can be accessed anyone on the internet, the people inside those photos should be aware that their images can be used by companies to train AIs.

One example, was when a Google contractor was caught exploiting black people to gather their face data without their knowledge.

This technology from Facebook could allow for more ethical use of video footage of people for training AI systems, which typically require several examples to learn how to emulate the content they’re fed.

By making people’s faces impossible to recognize, these AI systems can be trained without infringing the subjects’ privacy.

While this technology can be useful in the future, Facebook has no plans to use it on any of its own products.

The social network is already using its own facial recognition to identify users to help photo tagging, and alert users when others upload a photo of them.