AI can play games, and sometimes, they can play better than humans.

DeepMind is Google's subsidiary based in the UK. After successfully developing AIs that play Go and chess, the company has created an AI system that reached the highest rank in StarCraft I!, the complex and widely popular computer game by Blizzard Entertainment.

In this milestone achievement, the AI that is called 'AlphaStar', outperformed 99.8% of all registered human players, and attained the title Grandmaster status at the game.

In other words, "AlphaStar has become the first AI system to reach the top tier of human performance in any professionally played e-sport on the full unrestricted game under professionally approved conditions,” said David Silver, a researcher at DeepMind, in a blog post.

What makes it astonishing is that, the AI mastered the game after just 44 days of training (the process was carried out at high speed, but if represented in human gameplay, that is an equivalent of about 200 years).

During that moment, the AI learned from the multitude of recordings of the best human players playing StarCraft II, before going up against itself and versions of the AIs that are created intentionally to test its weaknesses.

Related: DeepMind's AlphaZero AI Masters Chess, Shogi, And Go Through Self-Play

The StarCraft II the AI played, was on one-on-one games, played by two players that compete against each other after choosing the race they wanted to be. The three races are: Zerg, Protoss and Terran, with each race having different capabilities and technologies which favor distinct defensive and offensive strategies.

The AI started with only a few units, and must gather resources which include minerals and gasses, which can be used to make new buildings, create new units and create new technologies.

The goal of the game is to overpower the opponent, which is done by destroying the opponent's buildings.

AlphaStar played the game just like how any human would, as it was given a small section of the map at the time, and can only point the in-game "camera" to an area if some of its units are based there or have traveled to it.

At any time, the combination of moves and strategies can add up to more than 100 trillion trillion possibilities. And out of that many only thousands of such choices should be taken to overwhelm the opponent.

DeepMind said that Starcraft II had posed a tougher AI challenge than that of chess and other board games, in part because its opponents' pieces were often hidden from view.

"Ever since computers cracked Go, chess and poker, the game of StarCraft has emerged, essentially by consensus from the community, as the next grand challenge for AI," Silver said. "It’s considered to be the game which is most at the limit of human capabilities."

To master and play this game, DeepMind trained AlphaStar using three separate neural networks - one for each race.

To start, AlphaStar tapped into a vast database of past recorded StarCraft II games provided by Blizzard, and used this mountain of data to train the agents in order to be able to imitate the moves of the game's strongest players.

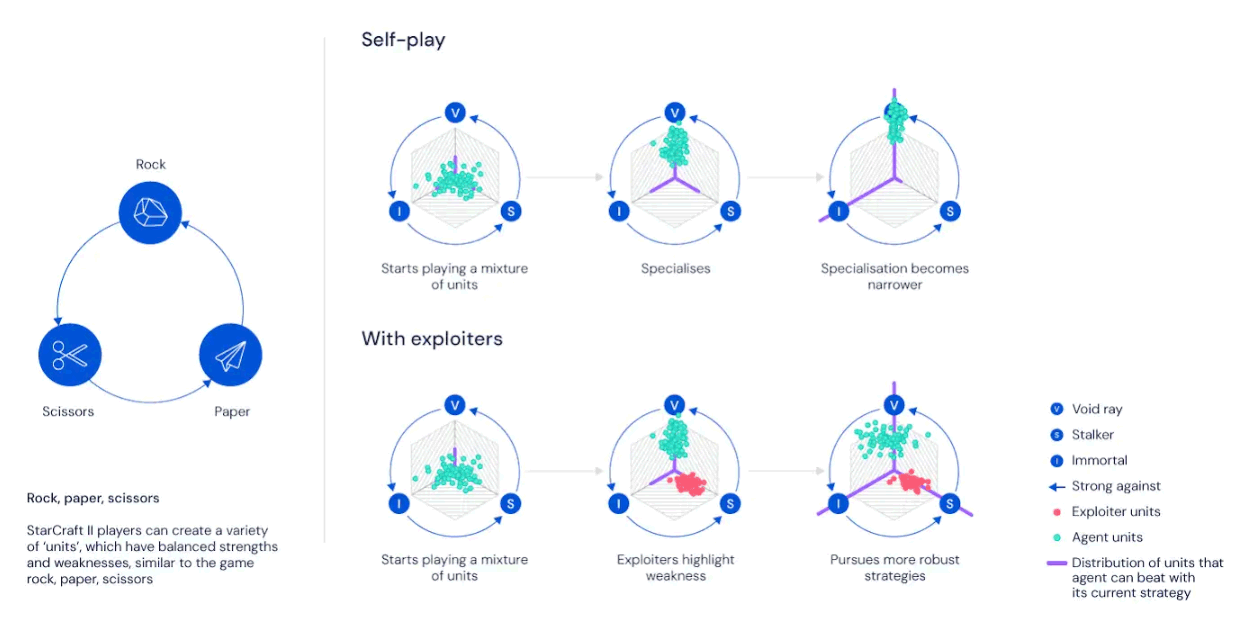

Copies of these agents were then pitted against each other to hone their skills via a technique known as reinforcement learning.

DeepMind's researchers also created "exploiter agents" to expose weaknesses in the main agents' strategies, so as to let the developers find ways to correct them.

To compete fairly, DeepMind restricted AlphaStar’s capabilities, ensuring for example that it could not perform moves at superhuman speeds, addressing previous criticisms. This also proved crucial to the AI’s success, because instead of beating humans through speed alone, it was forced to learn winning long-term strategies.

When the AI was set to play, the three trained neural networks were pitted against human players on Blizzard's Battle.net platform, without their identity being revealed until after each game.

The result was the neural networks attained Grandmaster status for each of the three races.

Some pro-gamers have mixed feelings about AlphaStar claiming the Grandmaster status, most likely because the AI is not a human.

Raza "RazerBlader" Sekha. who is one of the UK's top three StarCraft II professional players, said that the neural networks were "impressive", but occasionally failed to know how to respond. In an example, he said that when an opponent went for an army made purely by air units, AlphaStar didn't know what to do.

"It didn't adapt its play and ended up losing," he said.

Joshua "RiSky" Hayward, another UK's top player, said that AlphaStar's behavior was atypical for a Grandmaster.

"It often didn't make the most efficient, strategic decisions," he said, "but it was very good at executing its strategy and doing lots of things all at once, so it still got to a decent level.

But despite of its several weaknesses and slight disagreement about the Grandmaster title, DeepMind has indeed showed yet another achievement in AI development.

"One of the key things we're really excited about is that Starcraft raises a lot of challenges that you actually see in real-world problems," said Silver, who leads the lab's reinforcement learning research group. "We see Starcraft as a benchmark domain to understand the science of AI, and advance in our quest to build better AI systems."

DeepMind said that the development of AlphaStar would help the company develop other AI tools which should ultimately benefit humanity.

DeepMind says that examples of technologies that might one day benefit from its new insights include robots, self-driving cars and virtual assistants, which all need to make decisions based on "imperfectly observed information".

"There's still an open research question, as to how to do something like AlphaStar Zero, which could fully learn for itself without human data," he added.

And regarding whether this kind of AI could eventually be developed for military uses, considering Google's past controversy with Project Maven, Silver added that:

"To say that this has any kind of military use is saying no more than to say an AI for chess could be used to lead to military applications. Our goal is to try and build general purpose intelligences [but] there are deeper ethical questions which have to be answered by the community."

Similar to DeepMind's AI that played Quake III Arena, or the OpenAI’s Dota 2 bots that defeated human players, or Tencent's AI which can also play StarCraft II, or other game-playing agents, the goal with this type of AI research is not just to defeat humans in various games and to prove it can be done.

Instead, it’s to prove that with enough time, effort, and resources, sophisticated AI software can best humans at virtually any competitive cognitive challenge