Computers are getting smarter and smarter. With larger space to store data, more sources of inputs and better interpretation of codes within its systems, computers are getting better in doing what do best: being told. As we're exploiting AI (Artificial Intelligence) a lot more, we're also trying to make computers 'think and reason', not just do what they're told.

Computers are getting smarter and smarter. With larger space to store data, more sources of inputs and better interpretation of codes within its systems, computers are getting better in doing what do best: being told. As we're exploiting AI (Artificial Intelligence) a lot more, we're also trying to make computers 'think and reason', not just do what they're told.

As computers become smarter, they can compute tasks a lot faster than humans. On a basic level, computers are doing perfectly well, if all the parameters are explained to them well. Computers do what they're told, and that is what they do best.

Tech companies have been racing against each other in AI to create a system that can perform similar to what humans can do. One of which is the most basic understanding of environment: image identification.

Humans can recognize an image, or a picture, faster than the eyes blink, perfectly. In the past, doing this simple human task is impossible for computers. But researchers agreed that someday, computers would eventually get there.

Image identification is one of the most basic thing a person can do. But for computers, this simple task is apparently not that easy.

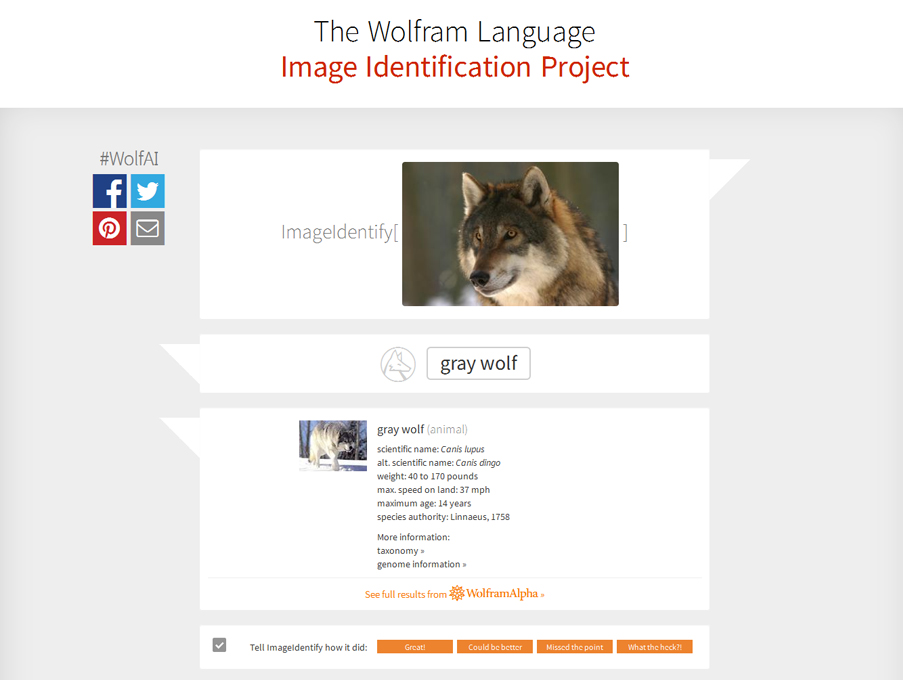

On May 13th, 2015, Wolfram Alpha, the computational knowledge engine or answer engine developed by Wolfram Research, was announcing its milestone: a system that give computers the intelligence it calls ImageIdentify.

Built into the Wolfram Language, it allows people to ask "what is this picture of?" to get an answer. On that day, the project is launched on the web to let anyone easily take any picture, and see what ImageIdentify thinks.

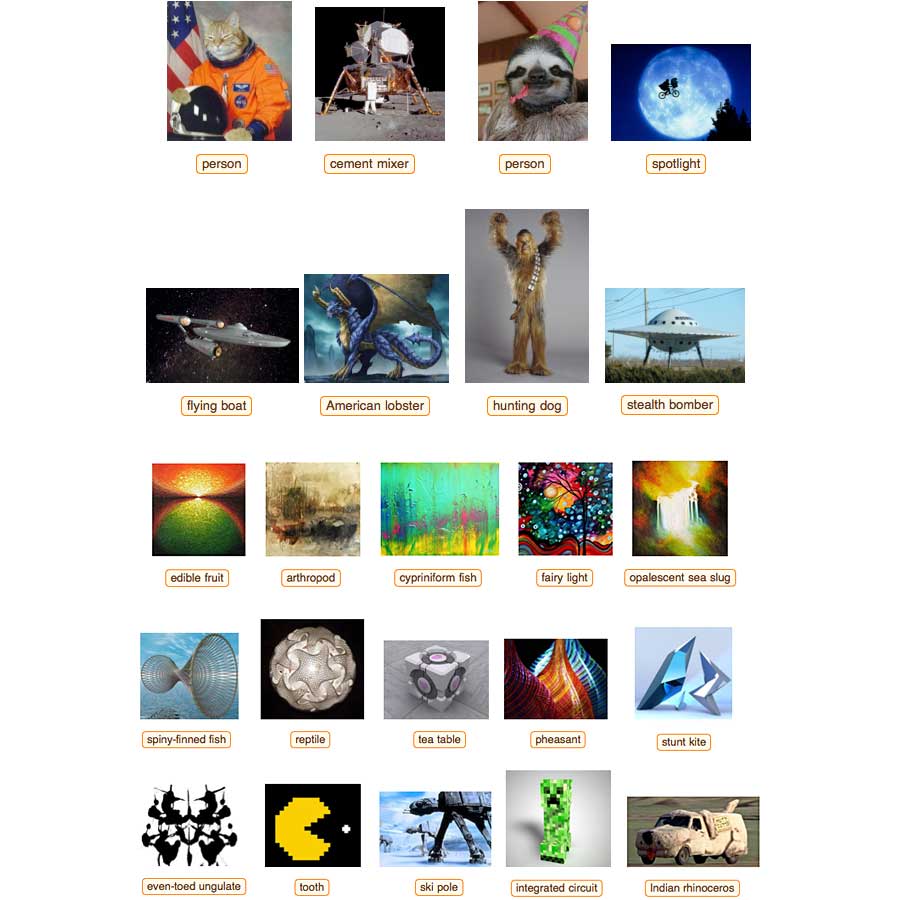

Wolfram's ImageIdentify is smart. Despite it won't always get things right, it can do remarkably well. And what's fascinates the team about it, is that they have made AI a lot closer to humans.

ImageIdentify is one practical example of AI. And with it, the team that created it have reached the point where they can integrate this kind of AI operation into the Wolfram Language to use it as a new building block for knowledge-based programming.

It’s a nice practical example of artificial intelligence. But to me what’s more important is that we’ve reached the point where we can integrate this kind of “AI operation” right into the Wolfram Language—to use as a new, powerful building block for knowledge-based programming.

With ImageIdentify built right into the Wolfram Language, it's easy for developers to create APIs, or apps, that use it. All can be integrated with cloud computation, as well as websites.

Neural Network and Creating a Digital Brain

Living things are made of cells, that if they're grouped, they would become a tissue. A group of different types of tissues will make an organ, and different organ doing something together will create a system. And a human is made up many systems working together to keep it alive.

A human brain is a complex organ. It comes with certain types of tissues molded together to be the grey matter that commands almost everything the body wants and needs. Helped by the many hormones and nerves, the organ is connected to different parts of the body, acting as sources of inputs to feed the it with information from the outside world to be processed.

The brain is probably the most sophisticated organ a living thing can have. It has networks that consist of many layers, connected by neurons.

As Wolfram has described, ImageIdentify is pretty much similar to a human brain as it consists of many layers. It can be difficult to say meaningful things about what's going on inside Wolfram's "head", but at the first two layers, one can recognize some of the features it's picking out. And they're similar to what real neurons actually do in the primary visual cortex.

No matter how similar both "brains" work, there is always a difference between computational and biological neural networks. Like for example as one of the most obvious, one feeds on nutritions carried by blood while the latter feeds on electricity. But both can be trained.

In the actual development of ImageIdentify, it was trained by few tens of millions of images as a start. And it seems that it's very comparable to the number of distinct views of objects that humans get in their first couple of years of their life.

Humans can instantly recognize a few thousand kinds of things, all pictured by nouns in human languages. The lesser the brain mass of the living thing, the lower number they can distinguish things they see.

What people at Wolfram aim with ImageIdentify, is to achieve "human-like" image identification, and effectively map images to words that exist in human languages, then this defines a certain scale of problem, which, it appears, can be solved with a "human-scale" neural network.

Wolfram Doing Things the Wolfram Way

Search engine is one of the most popular places on the web. With it, people can search for almost anything imaginable.

Reverse image search is nothing new. Many search engines have been working on it for years, and spent all of those time perfecting it. Google as the most popular search engine, has the ability to upload an image to its image search and it will return results. Other niche image search engines also have done quite similar thing.

Wolfram Alpha venturing into this territory is nothing new, but their image ImageIdentify project works the Wolfram way, without showing the data behind it, and instead showing what it thinks about the image. So what Wolfram did is not making something to understand what we think it would understand, but instead it's creating something that has its own opinion about something based on what it knows.

Some of ImageIdentify's results when it's identifying an image can be hilarious, or even absurd, but when it does guess things correctly, it can certainly be the turning point on how people would see computers in years to come.