Artificial Intelligence or AI, is one of the most anticipated technology of the future. What it does is making computers smarter by learning things the way humans do.

And DeepMind, a Google-owned division that focuses on developing AI-based technologies, is among the biggest players in the industry.

After creating numerous AIs, including one that could learn how to win board games and another that can win those games without even knowing the rules, the company is stepping things up a notch.

This time, it looks like DeepMind is experimenting AI beyond just board games.

In a blog post, the company explained how it developed a fully articulated humanoid character to traverse obstacle courses.

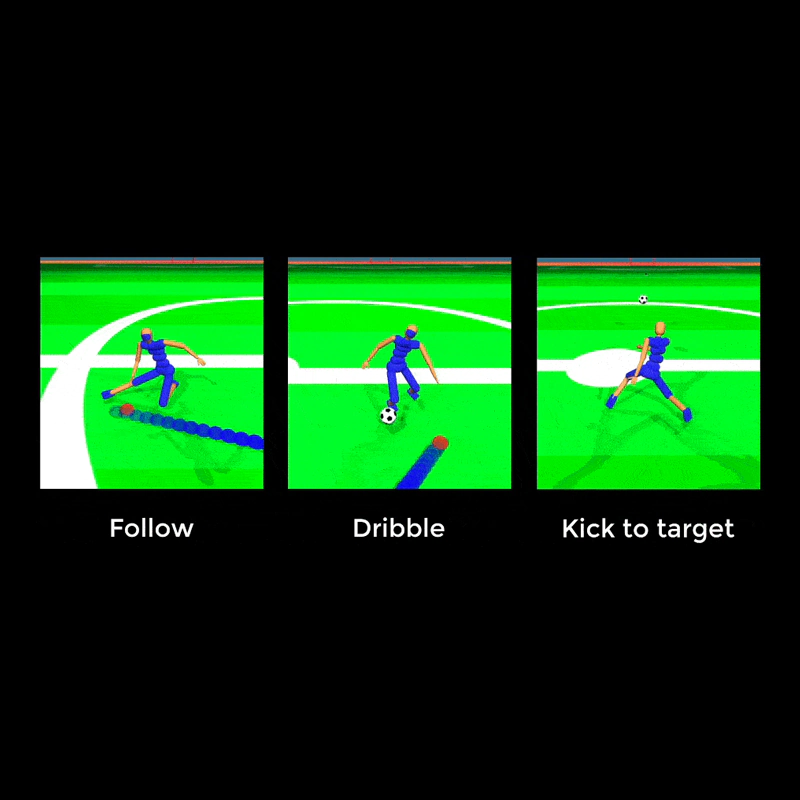

The company then used that experience and knowledge to use "human and animal motions to teach robots to dribble a ball, and simulated humanoid characters to carry boxes and play football."

Read: DeepMind Partners With Liverpool FC To Improve How 'Human Football Play'

To solve what it calls an "embodied intelligence," DeepMind demonstrated what reinforcement learning (RL) can achieve through trial-and-error.

In this case, DeepMind resuses previously learned behaviors to avoid giving the AI agent a significant amount of data to "get off the ground."

However, the result is what the company calls idiosyncratic behaviors, in which the agent finally learned to navigate obstacle courses, but did it with "amusing" movement patterns.

This happens because AI doesn't understand how joints work and how they should move to balance itself. Unlike humans and animals, computers don't have a thorough understanding of this kind of movement.

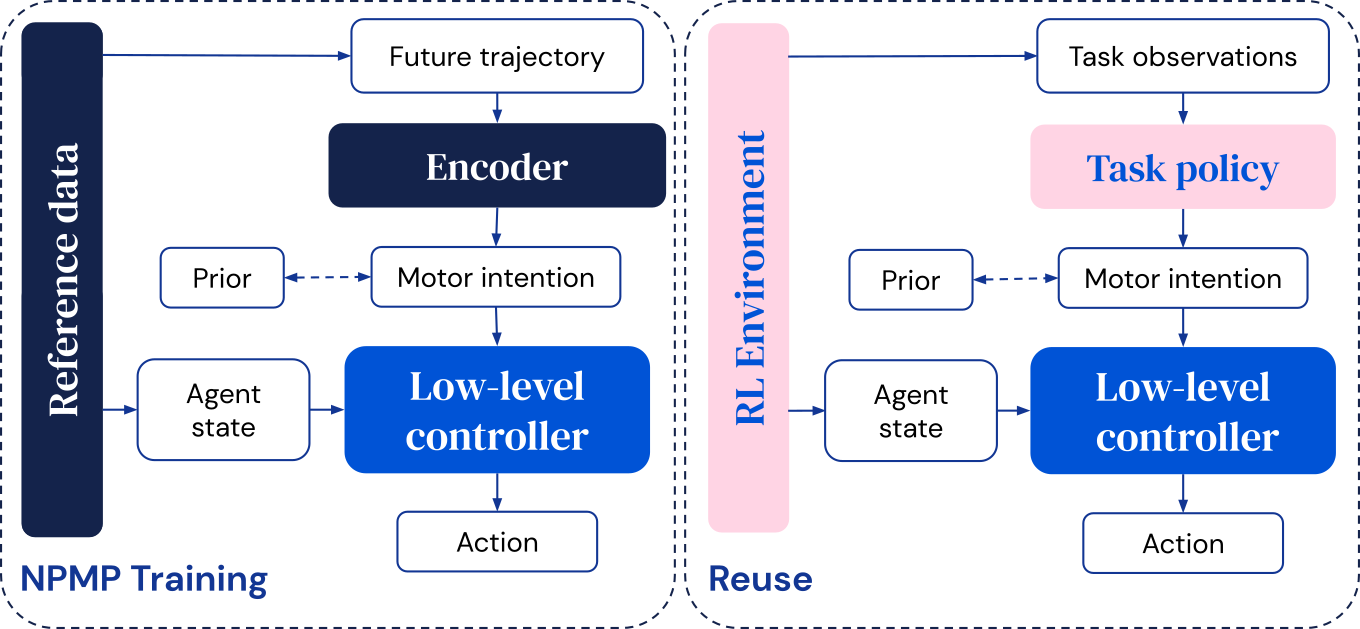

To solve both challenges, DeepMind came up with what it calls the "neural probabilistic motor primitives (NPMP)."

Per the blog post:

Long story short, the team at DeepMind essentially created an AI system that can learn how to do things like dribbling a ball, carrying boxes, and playing football inside of a physics simulator by watching videos of other agents performing those tasks.

According to the team’s research paper, DeepMind optimized teams of agents to play simulated football via reinforcement learning, constraining the solution space to that of plausible movements learned using human motion capture data.

To make this happen, DeepMind trained the robots in the simulated world that is designed to have gravity, unexpectedly slippery surfaces, and unplanned interference from other agents.

The point of the exercise isn’t to build a better football player, but instead, the method is to help the AI and its developers figure out how to optimize the agents’ ability to predict outcomes.

When the team deployed the agent, the AI could barely move its physics-based humanoid body around the field. But, by rewarding the agent every time its team scores a goal, the model is able to get the figures up, to walk clumsily at first.

Later the agent managed to learn how to run, and even play incredibly well, by learning how to predict where the ball will go.

And after several days of training, the AI is also able to predict how other agents will react to its movement.

As explained by the team at DeepMind, the result is "a team of coordinated humanoid football players that exhibit complex behavior at different scales, quantified by a range of analysis and statistics, including those used in real-world sport analytics."

"Our work constitutes a complete demonstration of learned integrated decision-making at multiple scales in a multiagent setting."

It's worth noting though, that DeepMind didn't really teach the AI how to do anything in real life.

This is because everything was done in a simulated world, and what DeepMind did, was 'brute-forcing' movement within the boundaries of that simulated world.