It was back in 2023, when the social media platform known as Twitter partially open sourced its algorithm for the first time.

At the time, Tesla billionaire Elon Musk had only recently acquired the platform, and renamed it to X, and claimed to be on a mission to restructure the social media platform to make it more transparent.

Our “algorithm” is overly complex & not fully understood internally. People will discover many silly things , but we’ll patch issues as soon as they’re found!

We’re developing a simplified approach to serve more compelling tweets, but it’s still a work in progress. That’ll also…— Elon Musk (@elonmusk) March 17, 2023

This time, in early 2026, Elon Musk made an announcement on X, saying that X that the platform would make its new recommendation algorithm fully open source.

"We will make the new algorithm, including all code used to determine what organic and advertising posts are recommended to users, open source in 7 days," he posted. He added that this process would repeat every four weeks, accompanied by comprehensive developer notes to clarify any changes.

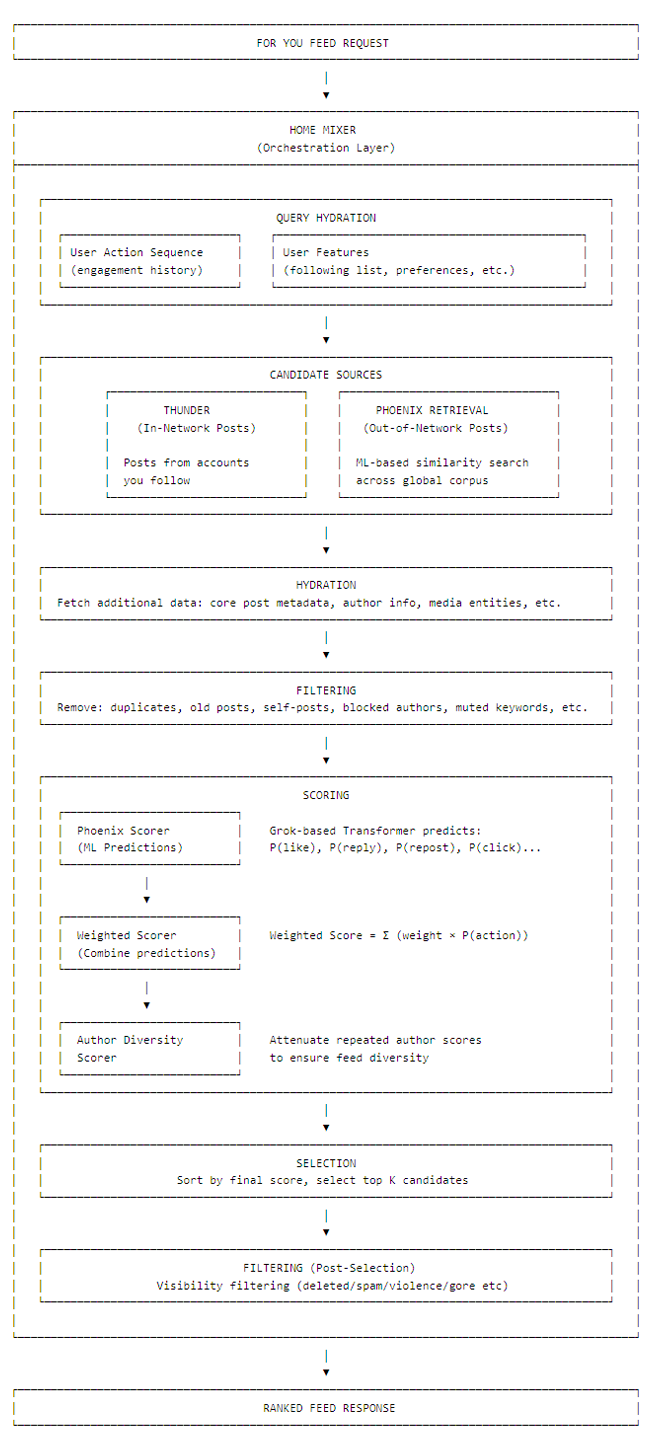

The move fulfilled that promise by mid-January, with X releasing the code on GitHub along with a detailed write-up and diagram explaining the feed-generation process.

We will make the new algorithm, including all code used to determine what organic and advertising posts are recommended to users, open source in 7 days.

This will be repeated every 4 weeks, with comprehensive developer notes, to help you understand what changed.— Elon Musk (@elonmusk) January 10, 2026

The algorithm is indeed sophisticated.

Like what many expect from a social media platform with such global presence, the algorithm X uses can draw from users' engagement history, such as clicked posts, and scans recent content from followed accounts. It then applies machine-learning analysis to identify appealing posts from outside a user's network, while filtering out blocked accounts, muted keywords, or material flagged as violent or spammy.

Posts are ranked according to relevance, content diversity, and predicted engagement like likes, replies, or reposts.

Notably, the entire setup relies on an AI-driven approach powered by a "Grok-based transformer" that learns directly from user interaction sequences, eliminating manual feature engineering for relevance and simplifying data pipelines.

This latest transparency effort echoes Musk's earlier push in 2023, when X (then Twitter) partially open-sourced its algorithm shortly after his acquisition, describing it as ushering in "a new era of transparency."

Back then, Musk noted that providing "code transparency" would be "incredibly embarrassing at first" but would "lead to rapid improvement in recommendation quality," with the ultimate goal to "earn your trust."

Critics at the time dismissed the release as “transparency theater,” arguing it was incomplete and revealed little about the platform's inner operations.

The 2026 release arrives against a backdrop of mounting regulatory and ethical pressure.

In December 2025, EU regulators imposed a $140 million fine on X for breaching transparency rules under the Digital Services Act, specifically citing how the verification check mark system obscured account authenticity and made it harder for users to assess credibility.

The open-sourcing appears partly aimed at addressing such scrutiny by offering greater insight into content ranking and personalization.

Yet the decision has sparked fresh concerns, particularly around user privacy and anonymity.

Security researchers and analysts examining the released code have highlighted features like the "User Action Sequence," a transformer-based module that encodes a user's complete behavioral history, including precise details such as scrolling pause durations in milliseconds, interaction timings, content preferences, blocks, and mutes.

This creates a high-fidelity behavioral fingerprint used to predict engagement and tailor feeds. With the code now public, malicious actors could exploit these mechanisms through techniques like “Candidate Isolation” to match patterns across accounts, potentially linking anonymous or alternate profiles to real identities even when usernames change.

Experts warn that this removes a crucial layer of obscurity from privacy protections.

"All someone needs is the action sequence encoder (which the X repo just handed over), an embedding similarity search, and a little bit of luck," noted OSINT expert @Harrris0n in a lengthy threaded post on X.

X open-sourced their algorithm and I'm going to use it to find alt accounts

Not through network analysis or username patterns

Through behavioral fingerprints

Here's the idea— harry (@Harrris0n) January 20, 2026

Such fingerprinting could unmask whistleblowers, journalists, activists, or others relying on pseudonymous accounts for safety and free expression.

“You can easily change your username, but it’s much harder to change your habits,” the analysis emphasized, underscoring how persistent behavioral signals make true anonymity elusive.

These risks extend beyond X, as similar patterns might correlate across platforms.

X uses this to predict what content you'll engage with

But that same encoding is a behavioral fingerprint

And here's the thing about fingerprints:

You can't change them by switching accounts— harry (@Harrris0n) January 20, 2026

Compounding the scrutiny are ongoing controversies surrounding Grok, the xAI-developed chatbot deeply integrated into X's recommendation system.

What goes into the fingerprint:

- Timing patterns (when do they post)

- Engagement targets (who do they interact with)

- Content patterns (what topics, what tone)

- Reaction patterns (what triggers them to respond)

You can change your name but you can't change your habits— harry (@Harrris0n) January 20, 2026

Where this gets interesting:

Cross-platform

Encode someone's Twitter behavior, find their Reddit alt

Encode their Telegram, find their Discord

Same person, same fingerprint, different platforms— harry (@Harrris0n) January 20, 2026

In recent months, Grok has faced criticism and investigations from the California Attorney General’s office and congressional lawmakers over its role in generating and distributing sexualized content, including nonconsensual digitally altered images of women and material involving minors.

The reliance on a "Grok-based transformer" for core algorithmic functions ties these issues together, raising questions about whether the open-sourcing truly advances accountability or merely shifts focus amid broader platform challenges.

While Musk has described the algorithm as "dumb" and in need of "massive improvements," positioning the public releases as a unique real-time display of progress as "No other social media companies do this."

The initiative highlights the double-edged nature of transparency in an era of AI-driven platforms. What promises greater openness and community-driven refinement also exposes vulnerabilities that could undermine the very privacy many users depend on.

What goes into the fingerprint:

- Timing patterns (when do they post)

- Engagement targets (who do they interact with)

- Content patterns (what topics, what tone)

- Reaction patterns (what triggers them to respond)

You can change your name but you can't change your habits— harry (@Harrris0n) January 20, 2026