How fast can you type? In the case of using search engines, do you really know what you're looking for?

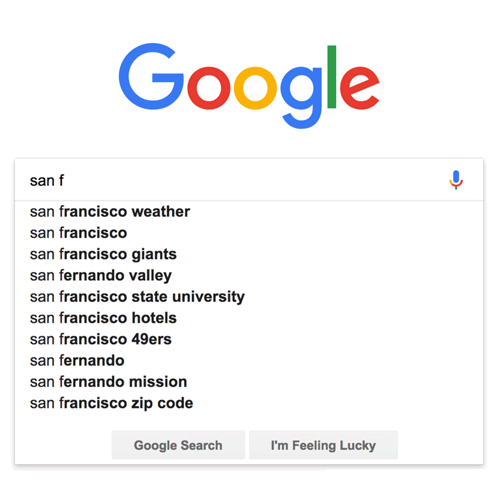

This is where a feature calls 'autocomplete' steps in. Many websites have this, and most notably Google where searches are more likely to happen. Autocomplete is designed to make users search faster as the feature completes searchers as they start typing.

It's convenient and indeed useful. And this is how it works:

Google autocomplete is available in almost every Google search box. From Google's home page, Google app for iOS and Android, the quick search box on Android, and also the Omnibox address on Chrome.

While it helps users in knowing in advance what they are looking for, it's especially useful for users on mobile. On small screens where typing can be difficult, the autocomplete feature is a time saver.

According to Google, the autocomplete feature on average, reduces typing by 25 percent. And cumulatively, the company estimated that it saves 200 years of typing per day.

Working By Prediction, Not Suggestion

There is a good reason why Google designed its autocomplete feature to "predict" rather than "suggesting". This is because autocomplete is meant to help people complete a search they were intending to do, giving them the satisfaction from finding what they really want, and not suggesting new types of searches.

As users continue to type, each given letter provides continuous input for Google to best predict the query the users are likely to continue entering.

In order to predict what its users are searching for, Google look at previous real searches that happen on its search engine. Then it will show common and trending ones that are relevant to the characters that are entered. Google also put emphasis from users' location and previous searches.

In short, Google autocomplete's prediction only show common and trending queries, showing prediction at each given character.

And how are the suggestions ranked? Are more popular searches listed above others? The answer is no.

While popularity is indeed a factor, but it doesn't determine the rank of the suggestions. Less popular searches can be shown above more popular ones, if Google deems them to be more relevant. But still on top of that, personalized searches always come first before others.

There is also what the company calls a "freshness layer." If Google sees a query that suddenly spike in popularity in the short term, these can appear as suggestions, even if they haven’t gained long-term popularity.

Further reading: Knowing How Search Engines Operate To Know Their Major Functions

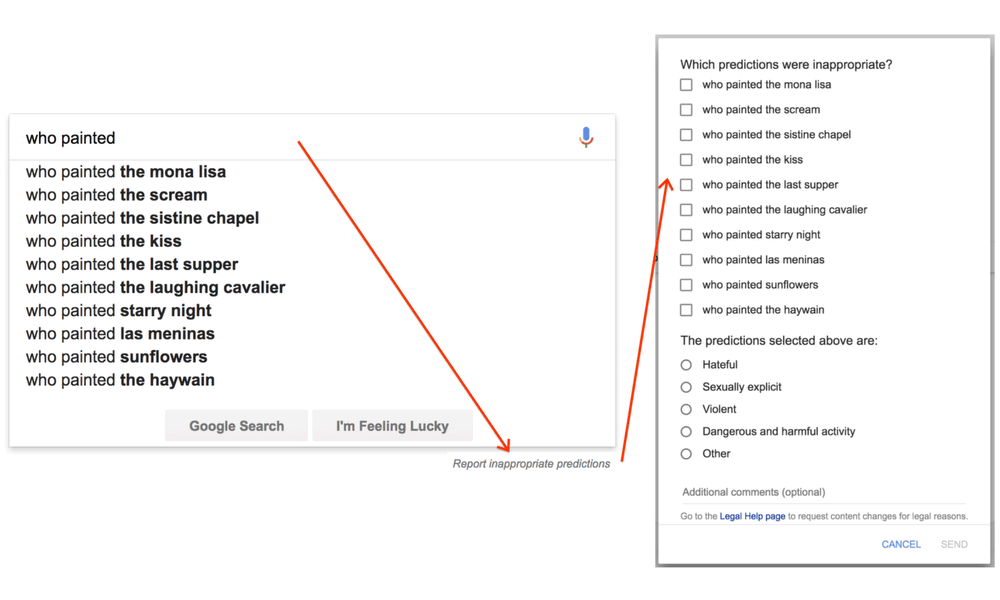

But not everything that is typed within its search box triggers autocomplete. Google removes predictions that are against its autocomplete policies. They include:

- Sexually explicit predictions that are not related to medical, scientific, or sex education.

- Hateful predictions against something, on the basis of religion, race or demographics.

- Things that are related to violence.

- Dangerous and harmful activities.

- Spam.

- Queries that are associated with piracy.

- In response to valid legal requests.

Mistakes Do Happen

Google Search is one of the most used web services on the internet, and it's holding that title for a very good reason. And here, in order to show results people want to see, things can get complicated behind-the-scene.

Just like any features out there, Google autocomplete is far from perfect.

As it learns about users and understand more about people's intentions by collecting search queries, there are things that even Google can't handle. Google acknowledges this when it said that "out systems aren't perfect, and inappropriate predictions can get through."

Google processes billions of searches every day. And with that many predictions it needs to make each day, most queries are unknown to Google. What this means, most queries that users type on Google, have never been seen.

As a result, autocomplete's suggestions can sometimes be odd or shocking.

This is why Google has feedback tool where people can help Google in improving its system.

For example, Google's prediction that covers cases involving race, ethnic origin, religion, disability, gender, age, nationality, veteran status, sexual orientation or gender identity, has expanded to cover cases perceived as hateful or prejudiced toward individuals and groups, without particular demographics.