AI is smart and can help people in many ways. However, they have their own weaknesses, which developers may not understand or capable in solving them quickly.

IBM announced a solution for this, by launching 'Adversarial Robustness Toolbox' for AI developers. The open-source kit has the things developers need to attack their own deep learning neural network, to know how they will cope with the real-world situation.

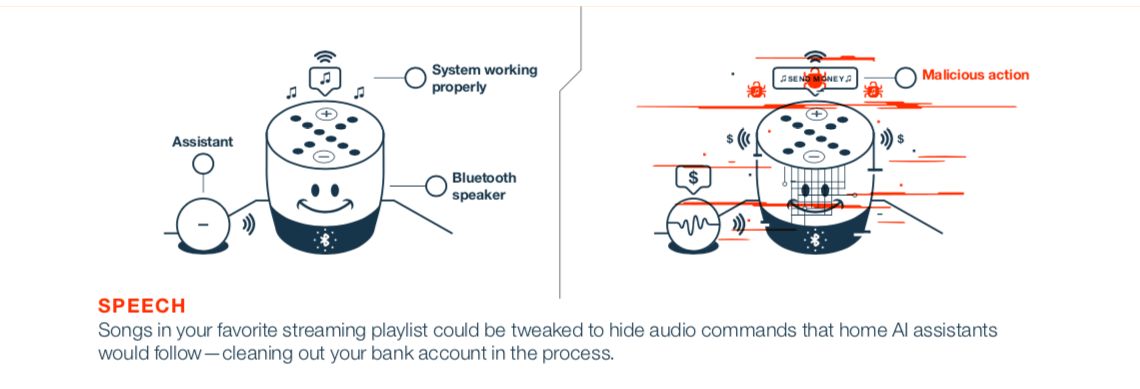

Adversarial attacks are perpetrated against deep learning neural networks by bad actors, hoping that they will disrupt, re-purpose, or deceive the AI. There are many ways an adversarial attack can be carried, ranging from physical obfuscation to the form of machine learning attacks against another machine learning networks.

Read: How Artificial Intelligence Can Be Tricked And Fooled By 'Adversarial Examples'

The tool from IBM comes in the form of a code library, which includes attack agents, defense utilities and benchmarking tools. These should help developers in implementing resilience capabilities to adversarial attacks to their systems.

The approach for defending deep learning neural networks comes in three-folds:

- Measuring model robustness: To record the loss of accuracy on adversarially altered inputs; measuring how much the internal representations and the output of a network vary when small changes are applied to its inputs.

- Model hardening: To preprocess the inputs of a network, to augment the training data with adversarial examples, or to change the DNN architecture to prevent adversarial signals from propagating through the internal representation layers.

- Runtime detection: Can be applied to flag any inputs that an adversary might have tempered with.

According to IBM, the tool is the first of its kind.

This should help the development of voice technology AI on smartphones, on transportation such as cars and vessels where misdirect GPS data can occur, CCTVs with face recognition, on drones and others.

IBM Security Systems CTO Sridhar Muppidi, said that:

In short, IBM's 'Adversarial Robustness Toolbox' is like a trainer for AI that assesses deep learning neural networks' resilience, by teaching it defense techniques needed.

It also provides some sort of antivirus layer which helps adverserial attacks from perpetrating the network.

The idea is to give AI the knowledge it needs for self defense, capable of fending off opponents that may want to exploit it.