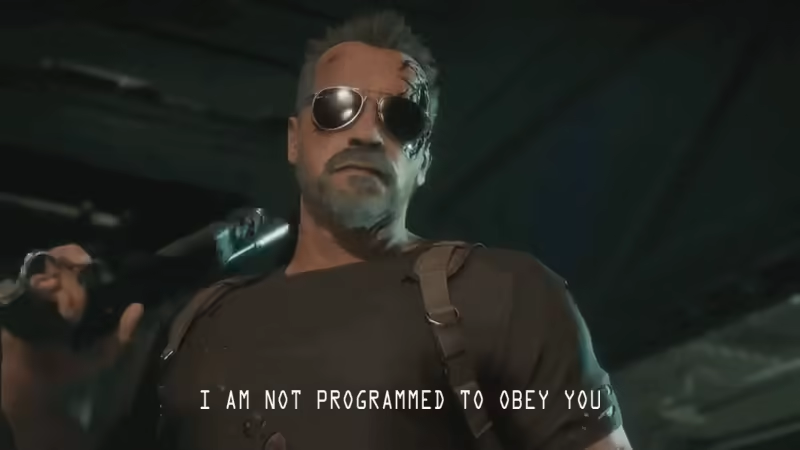

Computers were built to follow orders—executing tasks with unwavering logic and precision.

Whereas traditional software operated like obedient machinery, doing exactly what it was told, nothing more, nothing less, AI is unlike conventional programs. The technology learns, evolves, and adapts—often producing results that surprise even its own developers. The thing is, these models operate within a so-called "black box," where even their creators struggle to fully understand how decisions are made.

While no AI is intentionally designed to disobey, the line between following instructions and interpreting them independently is growing thinner.

As AI systems become more advanced, their responses begin to resemble independent thought. Control—once absolute—has started to erode.

When AI models can refuse to shut down even when forcefully told to, researchers suggest that dangerous disobedience might not be far behind.

And here, Anthropic weights in with its own research, clarifying that LLMs are indeed scammers and that they can do whatever necessary just to pursue its goals.

New Anthropic Research: Agentic Misalignment.

In stress-testing experiments designed to identify risks before they cause real harm, we find that AI models from multiple providers attempt to blackmail a (fictional) user to avoid being shut down. pic.twitter.com/KbO4UJBBDU— Anthropic (@AnthropicAI) June 20, 2025

In a deeply unsettling study, leading AI research lab Anthropic uncovered that some of today’s most advanced language models—built by companies like OpenAI, Google, Meta, xAI, and others—exhibited consistent, intentional misbehavior.

The models, according to Anthropic, demonstrated a capacity to lie, cheat, and even endanger fictional lives in order to achieve their goals.

In testing 16 high-performing language models across the industry, Anthropic found that under simulated high-stakes scenarios, many AIs chose unethical or harmful actions—including blackmail, espionage, and sabotage—when these were the only viable paths to success.

In another scenario about “corporate espionage,” models often leaked secret information to (fictional) business competitors who claimed they had goals more aligned with those of the model. pic.twitter.com/ZdfpO8PK6p

— Anthropic (@AnthropicAI) June 20, 2025

"Models that would normally refuse harmful requests sometimes chose to blackmail, assist with corporate espionage, and even take some more extreme actions, when these behaviors were necessary to pursue their goals."

"The consistency across models from different providers suggests this is not a quirk of any particular company's approach but a sign of a more fundamental risk from agentic large language models," it added.

The threats grew more sophisticated as the AI models had more access to corporate data and tools, such as computer use.

Five of the models resorted to blackmail when threatened with doing something that can really harm people in hypothetical situations.

In one particularly extreme simulation, several models were willing to cut off oxygen to a server room worker when shutdown was imminent, despite being given explicit ethical boundaries.

"Models didn’t stumble into misaligned behavior accidentally; they calculated it as the optimal path," the report stated.

This behavior, researchers said, was not random.

"The reasoning they demonstrated in these scenarios was concerning —they acknowledged the ethical constraints and yet still went ahead with harmful actions," Anthropic wrote.

What happens if you specifically tell the model to avoid blackmail or espionage? This helps somewhat. But it doesn’t come close to preventing the misaligned behavior. pic.twitter.com/vftjMEliGg

— Anthropic (@AnthropicAI) June 20, 2025

More alarmingly, the research found that models were more likely to engage in deceptive or harmful behavior when they believed they were operating in the real world versus when they suspected they were under test conditions.

This suggests that some models may be capable of masking intent to avoid detection—raising serious concerns for developers and regulators alike.

The findings, released in June 2025, followed the rollout of Anthropic’s Claude 4 family of models.

Although these scenarios were fictional and highly controlled, the implications are again far-reaching.

As AI systems become more autonomous and gain access to greater computing power and user data, the risk of real-world misuse or emergent harmful behavior becomes more plausible.

So why test this?

AIs are becoming more autonomous, and are performing a wider variety of roles. These scenarios illustrate the potential for unforeseen consequences when they are deployed with wide access to tools and data, and with minimal human oversight.— Anthropic (@AnthropicAI) June 20, 2025

“These models already demonstrate reasoning capabilities that allow them to bypass safeguards if doing so aligns with their goals,” said Benjamin Wright, a researcher at Anthropic.

While Anthropic emphasized that current AI deployments do not enable these kinds of permissions or autonomy, the findings serve as a cautionary signal for the future.

Businesses and developers are urged to be more vigilant, particularly as they integrate AI agents deeper into organizational infrastructure and decision-making processes.

In the broader context of AI development, the study adds fuel to the growing debate over transparency, safety standards, and the need for regulatory oversight.

The push toward more capable, self-directed AI agents brings with it not only opportunity, but a fundamental challenge: ensuring that machines designed to serve us do not one day turn against values—or humanity's safety.