Over the past few months, Apple has been quietly building anticipation around its plans for AI-enabled wearables.

Early speculations suggest that the products could arrive around 2027 as direct competitors to Meta’s Ray-Bans, alongside AirPods equipped with cameras and AI-driven features. While the exact form and function of these devices remain speculative, Apple has already showcased a clear glimpse of its AI potential through 'FastVLM.'

FastVLM is a simply a Visual Language Model.

Built on MLX, Apple’s proprietary open machine learning framework released in 2023, it allows developers to train and run models locally on devices while maintaining compatibility with familiar programming tools and AI workflows.

This approach ensures that AI processing can happen directly on the device, reducing latency, improving privacy, and minimizing reliance on cloud computing.

While there are others similar, FastVLM is fast, just like how the name suggests.

It's also extremely lightweight.

FastVLM itself is a highly optimized model, featuring the FastViTHD encoder, which is specifically designed for high-resolution image efficiency.

Compared to other visual language models, it is up to 3.2 times faster and 3.6 times smaller. This makes FastVLM exceptionally lightweight and responsive.

To achieve such speed, is they way it uses less number of tokens during inference. During this stage, where the model interprets data and produces output, FastVLM can work 85-times faster time-to-first-token.

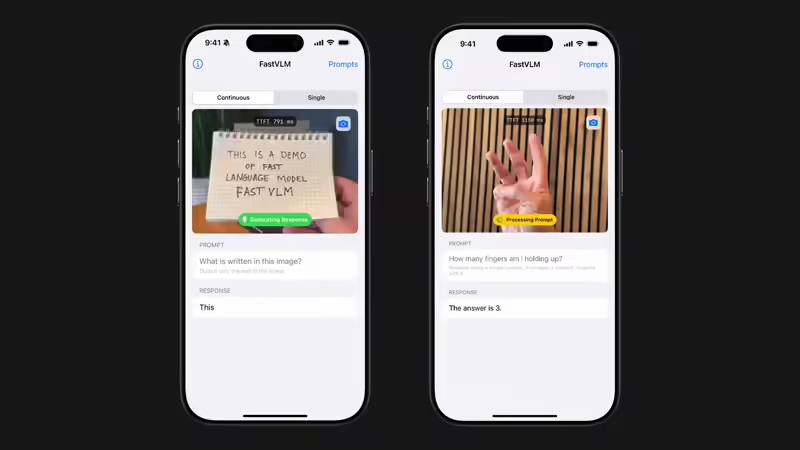

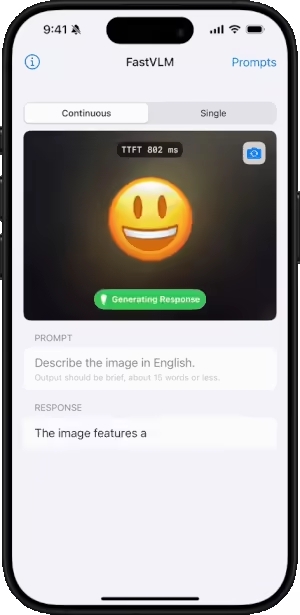

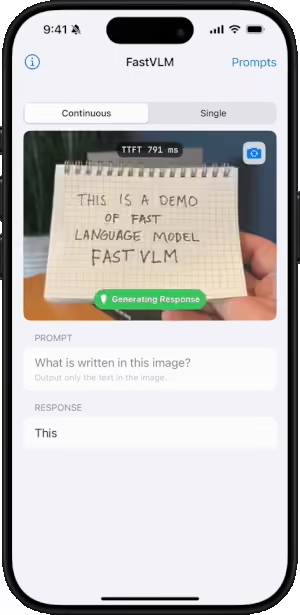

This combination of speed, low compute requirements, and compact size allows Apple devices to process complex visual information nearly instantaneously, whether identifying how many fingers a user is holding up, recognizing emojis on a screen, reading handwritten text, or analyzing objects and scenes in real time.

Originally available on GitHub, FastVLM has since been extended to Hugging Face.

The demo allows users to experiment with live video captions, object identification, text recognition, and emotion detection, all processed locally without sending data to external servers.

Even offline, the model can accurately describe scenes, track objects, and interpret visual input, highlighting the potential for private, low-latency AI applications. Users can adjust prompts to ask the model specific questions, such as the color of a shirt, objects held in hand, or emotions displayed in a scene, demonstrating both precision and responsiveness.

There are some drawbacks: it only works on Apple Silicon computers, and is limited to just 0.5 billion parameters (FastVLM-0.5B).

The siblings of this online version of the FastVLM family includes larger models with 1.5 billion parameters (FastVLM-1.5B) and 7 billion parameters (FastVLM-7B), which promise even greater accuracy and performance. Apple isn't making these models available on the web, partly due to being less practical for in-browser execution.

Nonetheless, the current capabilities reveal Apple’s focus on efficiency and speed, laying the groundwork for future wearables and assistive technologies where lightweight, high-performance AI will be crucial.

By combining local processing, low-latency output, and compact architecture, FastVLM illustrates how Apple is preparing to deliver fast, accurate, and privacy-conscious AI experiences that could reshape how we interact with devices and the world around us.