The AI industry was quiet and dull. It rarely made ripples that disrupt anything outside its own realm.

But this abruptly changed after OpenAI released ChatGPT. Since then, tech companies, large and small, race to develop the best Large Language Models, and make use of the trend to make profit.

While most products come from the West, the East is apparently not far behind.

After the likes of Kling AI and DynamiCrafter, and the MiniMax AI, the generative AI industry welcomes yet another product.

It's called 'Loopy', and that the AI is created by ByteDance, the Chinese version of TikTok.

From what ByteDance has showcased in a web page on GitHub, the AI can create things that are beyond astonishing.

Loopy is essentially a video diffusion model conditioned solely on audio.

The model is designed to generate realistic portrait avatars that move in sync with audio input. Here, Loopy can generate a variety of motions from audio alone, including non-verbal actions, emotion-driven eyebrow and eye movements, and natural head movements.

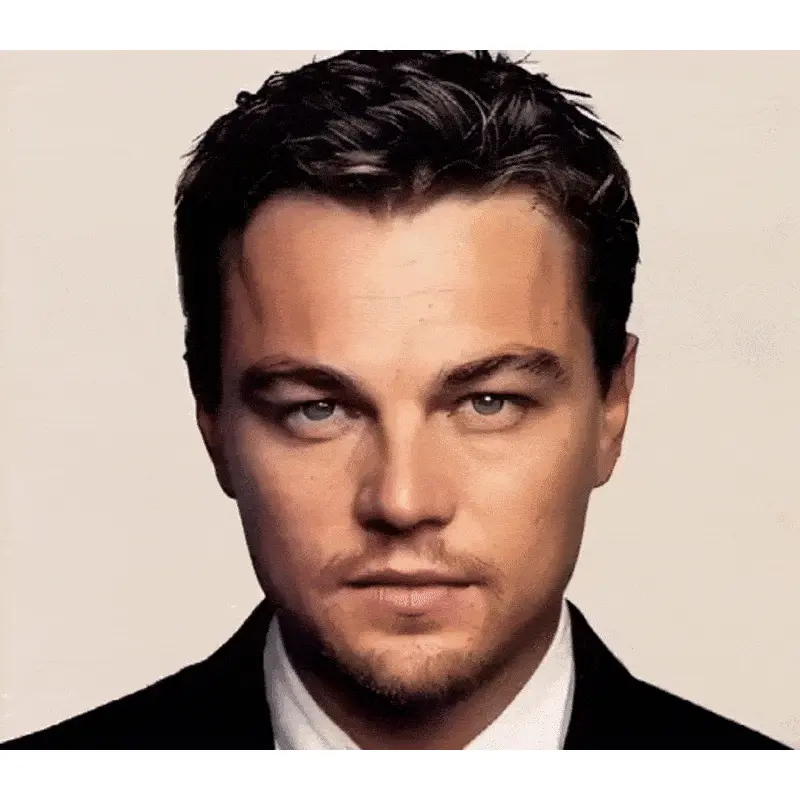

In essence, the AI is able to bring any single image to life, making it sing and talk with facial expressions from just an audio reference.

At this time, lip-syncing technology has advanced rapidly, thanks to breakthroughs in AI and machine learning.

Loopy here takes things to another level, by reshaping the possibilities of audio-to-video lipsyncing.

Whether users want to create animated avatars, realistic talking head videos, or immersive interactive experiences, Loopy offers remarkable precision in natural motion and seamless audio-visual synchronization.

Key features include:

- Temporal Module: The model includes an inter- and intra-clip temporal module, enabling it to leverage long-term motion information to learn natural motion patterns.

- Audio-to-Latents Module: This module enhances the correlation between audio and the movement of the portrait avatar.

- Motion Templates: Unlike existing methods, Loopy does not require manually specified spatial motion templates, resulting in more lifelike and high-quality avatars.

Loopy can be described as an end-to-end audio-conditioned video diffusion model.

By utilizing an innovative inter- and intra-clip temporal module alongside an audio-to-latents module, Loopy captures intricate details in both audio and facial movements, pushing beyond the limitations of previous models.

Whereas traditional lip-syncing models relied on rigid spatial templates to dictate facial motion, Loopy is able to learn from long-term motion patterns, allowing it to produce natural movements such as head gestures, eyebrow raises, and even subtle sigh.

By introducing Loopy, ByteDance is positioning itself right alongside other tech giants in the AI-powered content creation arena.

The buzz around ByteDance’s innovation stems from two key factors.

First, it’s democratizing video creation, in which it allows anyone to turn text into visually engaging videos, removing barriers for creators and marketers. Second, there’s the promise of hyper-personalized content. ByteDance’s technology could allow for videos tailored to each viewer, making content more engaging and relevant.

ByteDance’s move into AI video generation signals more than just a new direction for the company.

With Loopy's ability to capture complex motion patterns and produce high-quality, synchronized video, the AI also marks the start of a new era in digital content creation, set to revolutionize industries from entertainment to education, offering a powerful tool for AI enthusiasts, developers, and content creators.

But just with all advancements in AI that came before this, ethical considerations come into play.

Questions about authenticity, creative integrity, and the balance between human input and AI are crucial as this technology continues to grow.