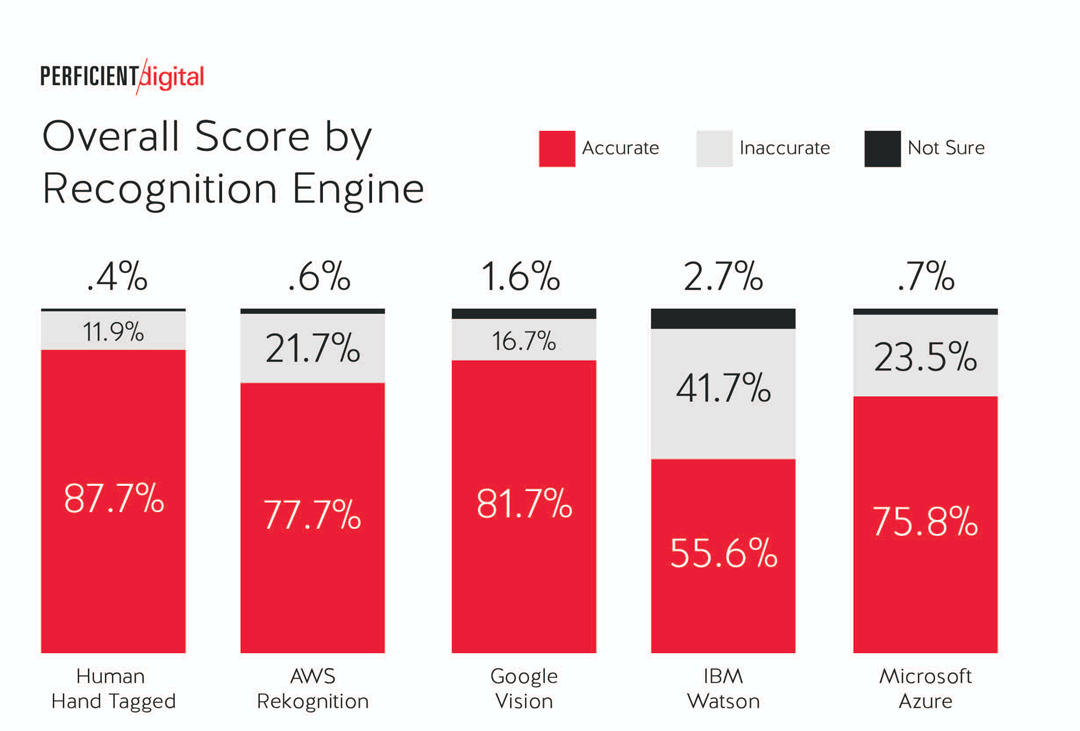

The race to create more capable image-recognition AIs continues, and it seems that Google is having an upper hand here.

According to a study by Perficient Digital, the team found that Google Vision beat out the competitors including Amazon AWS Rekognition, Microsoft Azure Computer Vision and IBM Watson in an image recognition study.

The methodology of the study involved two humans in collecting and tagging 2,000 different images in four distinct categories, that include charts, landscapes, people and products.

In other words, each category has around 500 images.

The images were then collected and tagged from November 30, 2018 through January 8, 2019.

Each human came up with and assigned five tags to describe each image.

Perficient then ran all of the 2,000 images through analysis APIs by Google Vision, Amazon AWS Rekognition, IBM Watson and Microsoft Azure Computer Vision, and looked at the results where a unique set of labels/tags for each image from each API.

The confidence metric of each API was then applied to identify the top five most confident labels for each image.

There were two ranking systems used:

1. Matching Human Descriptions Evaluation

The first system focused on identifying the image recognition engine-supplied tags that best aligned with the way that humans would describe the image.

Here, a different group of three humans, representing different age groups and genders, used the interface given by Perficient to rank the images. Each individual was presented with the image and the top five labels collected from each API, including the “Human” API.

The rankers were then tasked with selecting and ordering the best five descriptors for the image. When two or more tags were similar, graders chose the best version of that label to describe the image.

This ranking process was completed February 4-11.

2. Accuracy Evaluation

The second ranking system focused on identifying whether the tags returned by the image recognition engines were accurate, even if it was not something that humans were not likely to use in describing them.

This involves three humans rating 500 of the 2,000 images purely for tag accuracy. All of the tags from each API and the humans were displayed in a UI next to the image. As with the Matching Human Descriptions Evaluation, all of the tags were anonymized.

Each tag was tagged as either: Accurate, Inaccurate, or Unsure.

This ranking process took place from April 12, 2019 to May 9, 2019.

What the study found is that, Google Vision is the clear winner among the image recognition engines for both raw accuracy and consistency with how a human would describe an image.

IBM Watson finished last in these tests, but Perficient noted that IBM Watson excels at natural language processing, which was not the focus of this study.

As a matter of fact, IBM Watson is the only major AI vendor at the time of the test that built a full GUI (known as Watson Knowledge Studio) for custom NLP model creation, and its platform allows not only classification but custom entity extraction through this GUI.

In summary, humans can still see and explain what they are seeing to other humans better than machines.

For reasons like language specificity and a greater contextual knowledge base, humans excel here. AIs on the other hand, often focus on attributes which are not of great significance to humans.

"So while these are accurate, humans are more likely to describe what they feel makes the image unique," the researchers said.

Image recognition technology has become increasingly accurate, and better every day. But what makes the study interesting is to see that three of the four engines scored higher than human tags when the engine tags had a confidence level of 90%.

"That's a powerful statement for how far the image recognition engines have come," the researchers said.

But still, "all of the engines have a long way to go to develop the capability of describing the images in the way that a human would."