AI learns from patterns in order to understand what to do next. The more it learns from something, the better it will become in doing that thing.

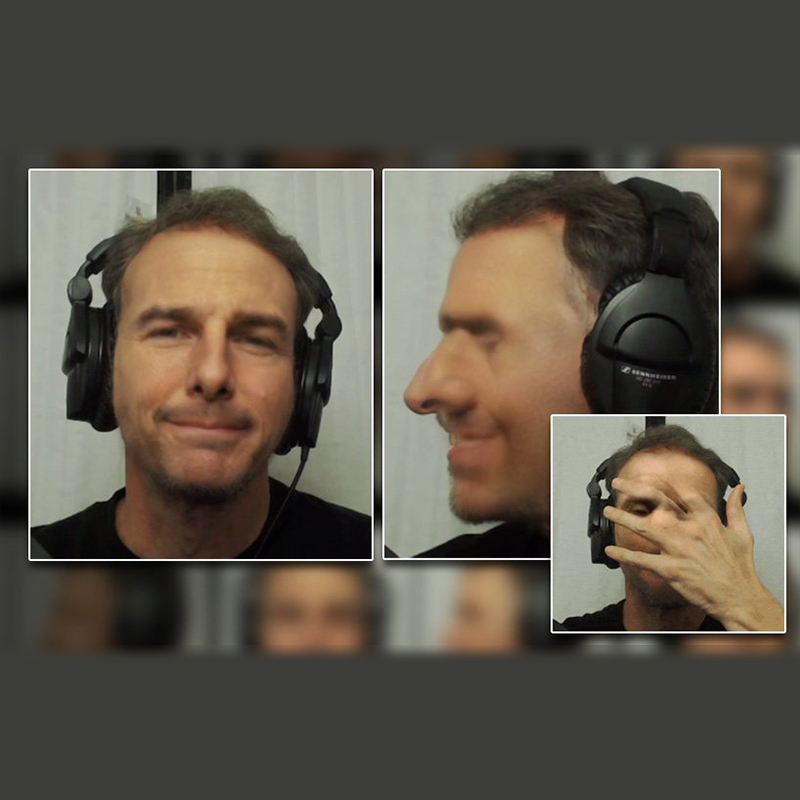

The opposite applies. The less it learns about something, the lesser its understanding about that thing. So here, in the time that deepfakes have invaded video calls, in which people can deepfake their own face on the fly, the easiest way to spot a deepfake, is by asking that person to turn sideways, or obscure their face with something.

The trick was shared by Metaphysic.ai, a London-based startup behind the viral Tom Cruise deepfakes.

As a company that creates app for creating video deepfakes, it does know a lot than just a thing or two about how AIs create deepfakes.

And here, AIs to create deepfakes are mostly trained using data on individuals looking straight ahead.

Because of this, once a deepfake video caller has his/her face rotated a full 90 degrees, the deepfake video will deteriorate.

Read: Tom Cruise Goes Viral On TikTok In Impressive Deepfake Videos

Most AIs that create deepfakes are trained using videos and images of celebrities.

Celebrities have tons of footage and photos of themselves publicly available on the internet and social media. But still, the data to train AIs to understand how these famous people look from the side, isn't that sufficient. And even if the data is enough, not many people train their deepfake AIs using that kind of material.

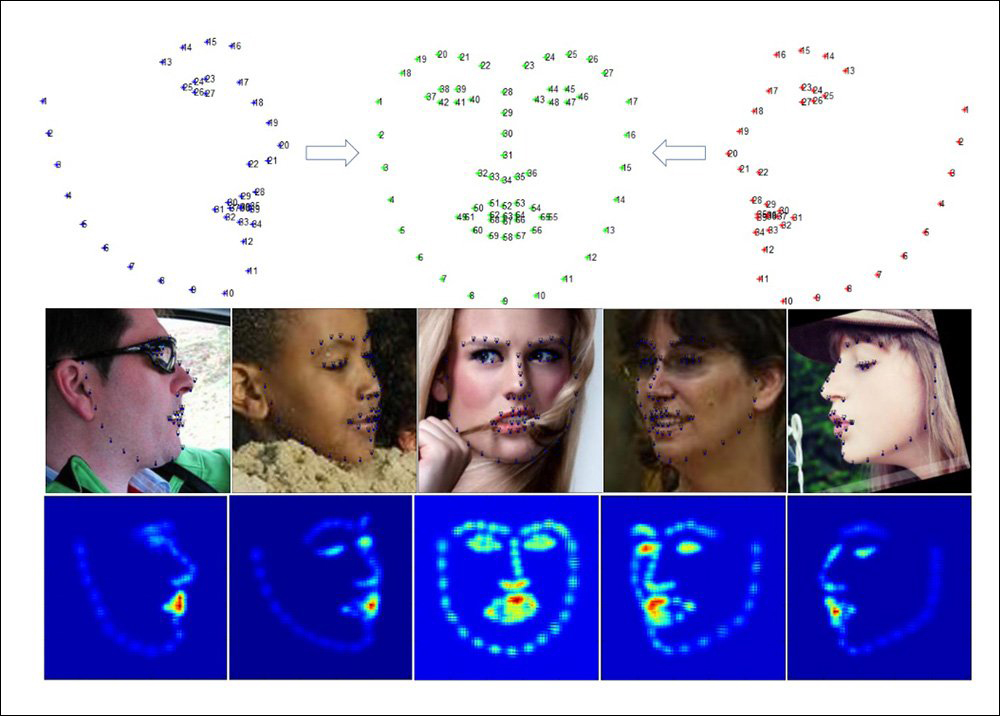

Another thing is that, the team at Metaphysic.ai also believes that the issue stems from the fact that AI software to create deepfakes has fewer reference points to estimate lateral views of faces when the subject's head is looking sideways.

Because of this, when a subject using deepfake turns his or head sideways, the AI algorithm that has half as many landmarks for profiles as for front-on views, simply cannot guess how the next image on the frame should look.

The end result is that, things will get distorted, and the spell is broken.

"Typical 2D alignment packages consider a profile view to be 50% hidden, which hinders recognition, as well as accurate training and subsequent face synthesis," explained Metaphysic.ai‘s Martin Anderson.

"Frequently the generated profile landmarks will ‘leap out’ to any possible group of pixels that may represent a ‘missing eye’ or other facial detail that’s obscured in a profile view."

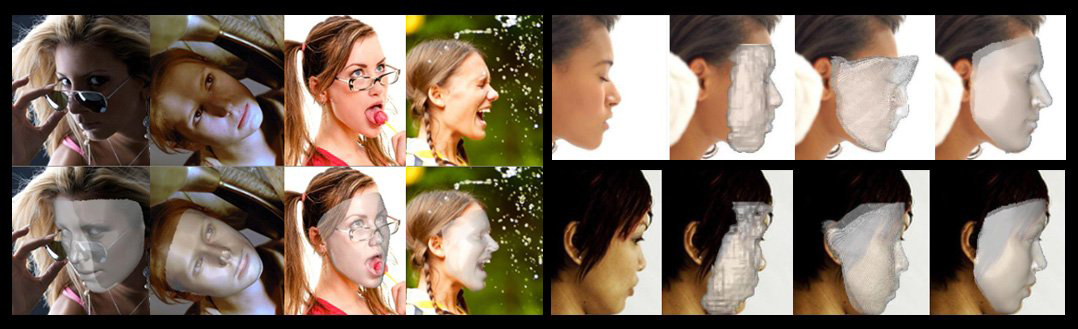

While creating deepfakes that can properly generate faces from the sides is possible, it would definitely take a lot more work.

Because deepfakes may not have enough training data and landmarks to generate realistic lateral views, it may require extensive post-processing to patch the flaw.

Another way, would be using a hyper-realistic CGI head, specifically created for the purpose of providing the ‘missing’ angles in a training dataset for a deepfake model.

"However, deepfakes have been capable, almost from the start, of producing more realistic and convincing images than CGI can, because they’re based on real data rather than artistic interpretation," the researchers said. "Therefore, not only is this a backward approach to the challenge, but it would require a level of industry-standard artistry even to ‘fail well’."

Even when all issues have been addressed, there is the resolution issue.

Read: 'Instant NeRF' AI From Nvidia Can Create 3D Scenes From 2D Photos In Seconds

In the absence of high-quality profile images as source training input, novel-view synthesis systems such as NeRF, Generative Adversarial Networks (GANs) and Signed Distance Fields (SDF) may not be able to provide the necessary level of inference and detail in order to accurately imitate a person’s profile views – at least, the researchers said.

While there are also more ways to improve deepfakes, the thing is, spotting them has become increasingly hard.

Spotting deepfakes may not be 'that' hard if someone is expecting a material to be a deepfake. The thing is, the real threat of deepfakes come from the fact that they are shared to people who are not expecting the thing they see as deepfakes.

"The real threat of such attacks could be that we are not expecting them, and are likely to actually aid deepfake attackers by dismissing artefacts and glitches that might have raised our state of alert if we were more aware of how fallible video and audio content is becoming," said the researcher.

As a matter of fact, this is often the case. Rarely do videos that are deepfakes made intentionally to deceive, are captioned as deepfakes.