Artificial Intelligence can do a lot of things, but unfortunately, their capabilities are limited to their learning materials. Therefore, AIs' work can be biased, and that is a bad thing.

Ritika Gunnar, VP of IBM Watson Data and AI, said that the lack of trust and transparency with AI models are holding back enterprise deployments at scale and in production. Or simply, many AI developments are halted due to concerns about how the real-time decision making can harm a business.

"It's a real problem and trust is one of the most important things preventing AI at scale in production environments," she said.

IBM wants to provide a solution by launching a software service that scans AI systems as they work in real time in order to detect biases and provide explanations when automated decisions are being made.

This is kind of a trust and transparency system that runs in the IBM cloud and works with a wide variety of popular machine learning frameworks and AI-build environments, including IBM's own Watson, as well as Tensorflow, SparkML, AWS SageMaker, and AzureML.

IBM said that the tool can be customized by the client to specific needs through programming to take into account the "unique decision factors of any business workflow."

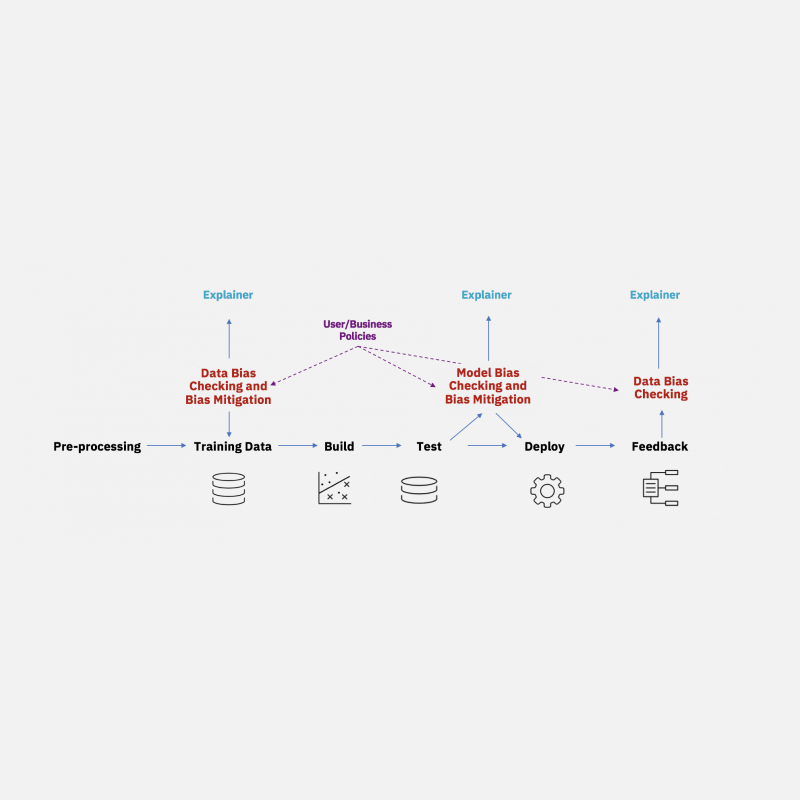

The system works in automation through SaaS, and explains to researchers about their AI's decision making, and detects biases in AI models at runtime. What this means as the AI makes decision, the system should be able to capture "potentially unfair outcomes as they occur", as explained by IBM.

To detect any bias, the system analyzes AI's decision by scanning which factors weighted the decision in one direction or the other. The concluding factor the system will make is based on the AI's confidence.

If the system finds any bias, it can also recommend data to add to the model to help mitigate the issue.

And to manage the development of AIs, IBM said that the software can also keep records of the models' accuracy, performances and fairness, along with the lineage of the AI systems. Here, researchers can easily trace and recall the AI "for customer service, regulatory or compliance reasons."

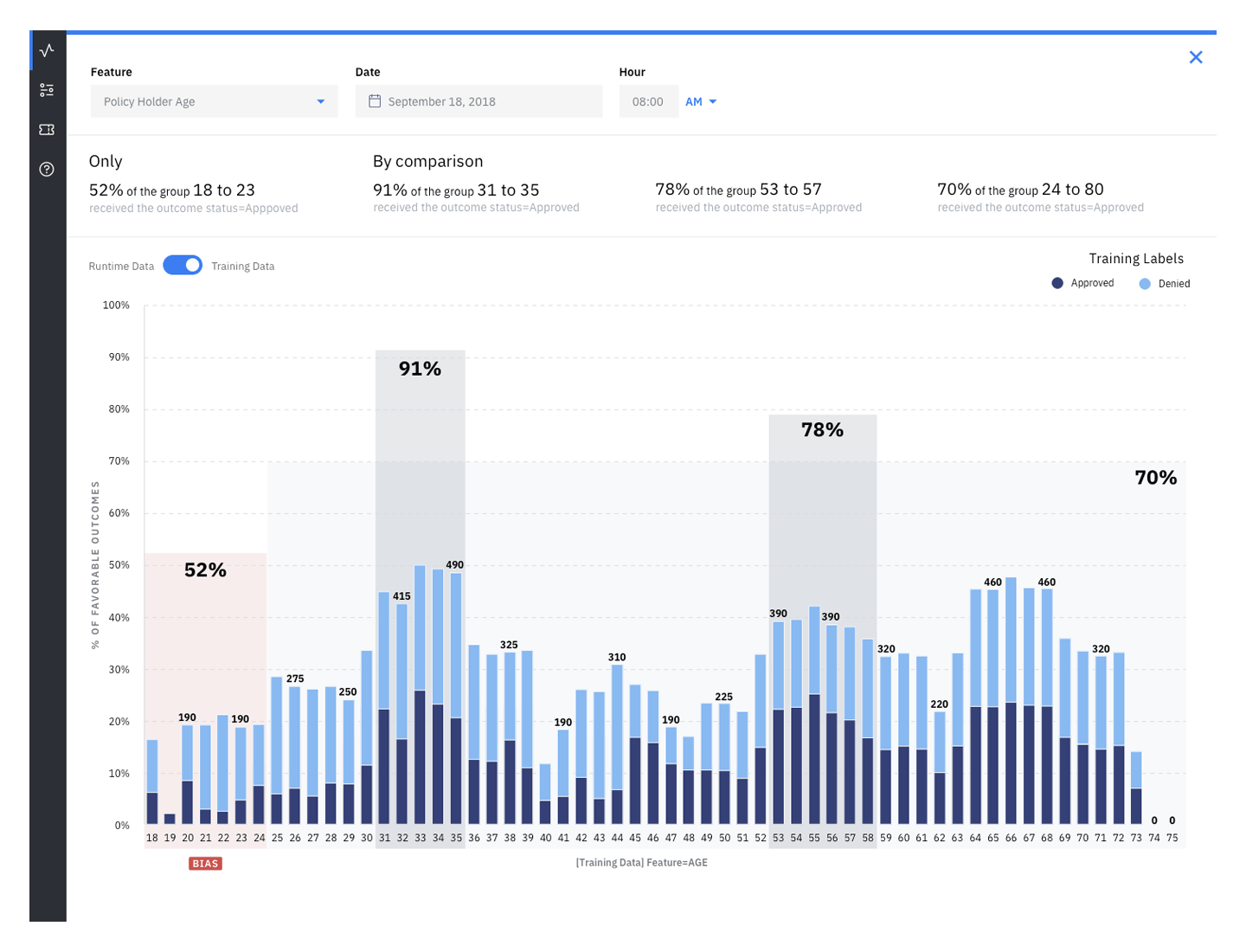

These can be done through the software's AI scanner tool, as it provides a breakdown of automated decisions via visual dashboards.

IBM is not the first company that offers businesses the tools to spot AI bias. There are also multiple companies out there that are initiating a major push towards automation, that are also scrambling to making their own tools to patch biases and other AI-related problems.

With the increased usage of AI in many industries, fixing AI biases should be a priority. With many AIs are used to automate decisions about a wide variety of issues such as policing, insurance and what information people see online, AI biases can be a huge problem.

According to Gunnar, AI bias has gone well beyond the factors such as gender and race. In one scenario of AI bias, for example, could revolve around a claims insurance process and an adjuster making a decision to approve or reject a claim.

IBM also has plans to open source the AI bias detection and mitigation toolkit via what it calls the 'AI Fairness 360 toolkit'. The toolkit provides a library of algorithms, code and tutorials. With this, the company hopes that academics, researchers and data scientists can integrate bias detection into their models, encouraging a "global collaboration around addressing bias in AI."

"It’s time to translate principles into practice,” said David Kenny, SVP of cognitive solutions at IBM. "We are giving new transparency and control to the businesses who use AI and face the most potential risk from any flawed decision making."

IBM intends to add its own professional services to work with business clients to use the software service. What this means, IBM wants to sell both an AI fix, as well as experts to help the researchers doing their job.