Photos taken in dimly lit environment should have a balance between blur and noise. This can be difficult to master without a tripod and long exposure times, and Intel is teaching AI to do that.

Researchers from the University of Illinois Urbana-Champaign and Intel have trained a neural network to process low-noise images in a room lit with only candlelights.

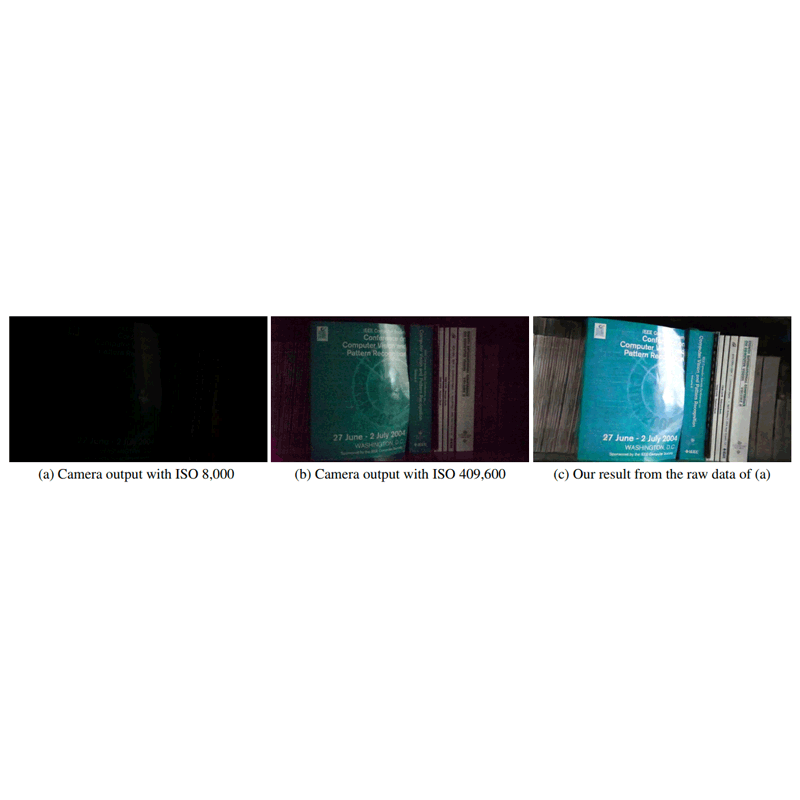

The AI then takes images which appear pitch black or full of noise, process and enhance them to make them bright and colorful.

To train what the researchers called the "See-in-the-Dark" (SID) data set, they used a Sony a7S II and some with a Fujifilm X-T2 to take two different images in limited light:

One with a short exposure (0.1 to 0.03 seconds long), and the other with a long exposure (ten to 30 seconds long), using a remote app to control the camera without touching it.

The researchers then fed the system's RAW image processor for the AI to get an image with less noise, and without odd colors.

After that, the researchers repeated the process around 5,000 times.

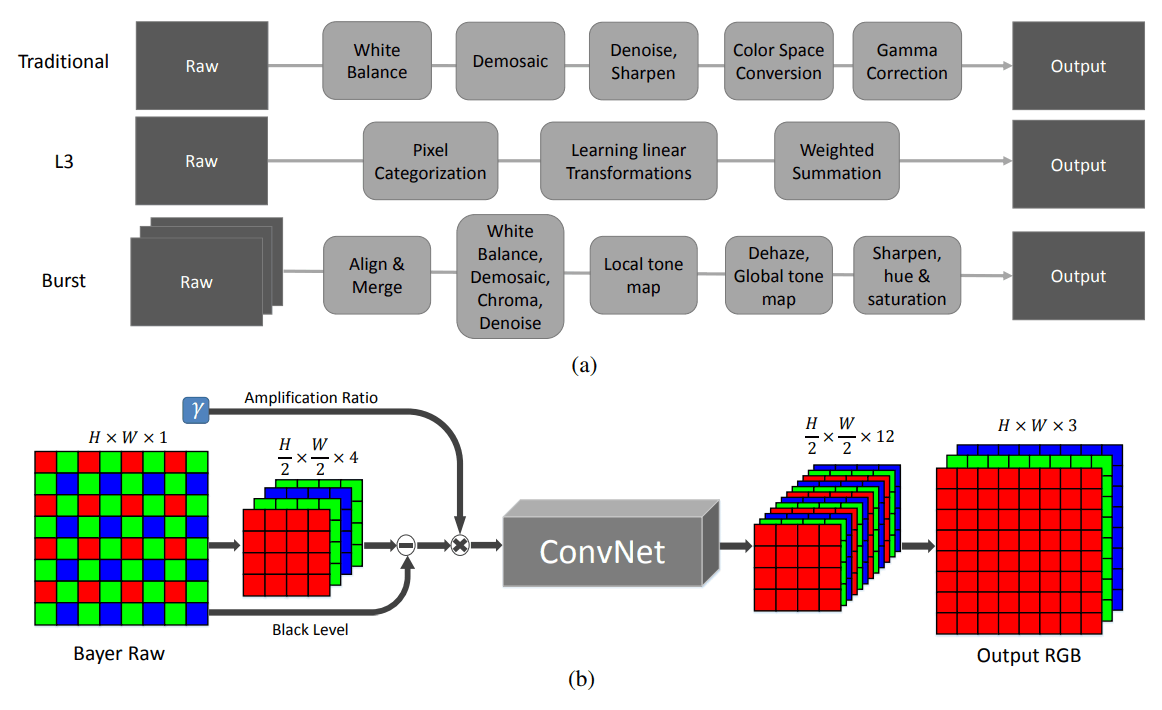

Before feeding the AI with the images, the team first separated them into different color channels, removing the black and also reducing their resolution.

RAW format was chosen instead of JPEG because with it, the algorithms can create brighter images with also less noise. The resulting images also have a more accurate white balance.

With additional research, this processing algorithm could help cameras take images with less noise in dark environment. It could help smartphones in performing better in low lights, along with enhancing handheld shots from DSLRs and mirrorless cameras, the team suggests.

Video could also benefit, since taking an image with longer exposure isn’t possible while maintaining a standard frame rate.

Initially, the sample images were result of stationary objects.

The image processing method was also slower than standards, taking 0.38 and 0.66 seconds to process at a reduced resolution. This is far too slow for burst shots standard cameras.

What's more, the data set was also designed for a specific camera sensor - without additional research on data sets for multiple sensors. What this means, the whole process would have to be repeated if the research was going to use new camera sensor.

According to the researchers, further research could look into those limitations.