Usefulness is not only judged by knowledge, but also by trustworthiness. And this is what Microsoft is after.

Since the arms race sparked by OpenAI's revelation of ChatGPT, tech companies, both large and small, have been racing to showcase their best products, leveraging the generative AI hype that has taken over the tech industry.

While Large Language Models have become increasingly smarter, they are still plagued by hallucination.

LLMs can produce information that is incorrect, misleading, or entirely fabricated, because they generate text based on patterns in the data they were trained on, meaning that they may not fully understand facts in a human sense. Some LLMs also lack real-time knowledge, and that they tend to be designed to generalize from vast datasets, which make them overfit or generate incorrect information when trying to address a novel query or a request that deviates from the patterns in their training data.

Issues in their training data, prompt ambiguity and and the fact that LLMs are designed to sound confident in their responses, can further lead to hallucinations, especially when LLMs attempt to "fill in the gaps" of their knowledge.

Microsoft wants to solve AI hallucination, with AI.

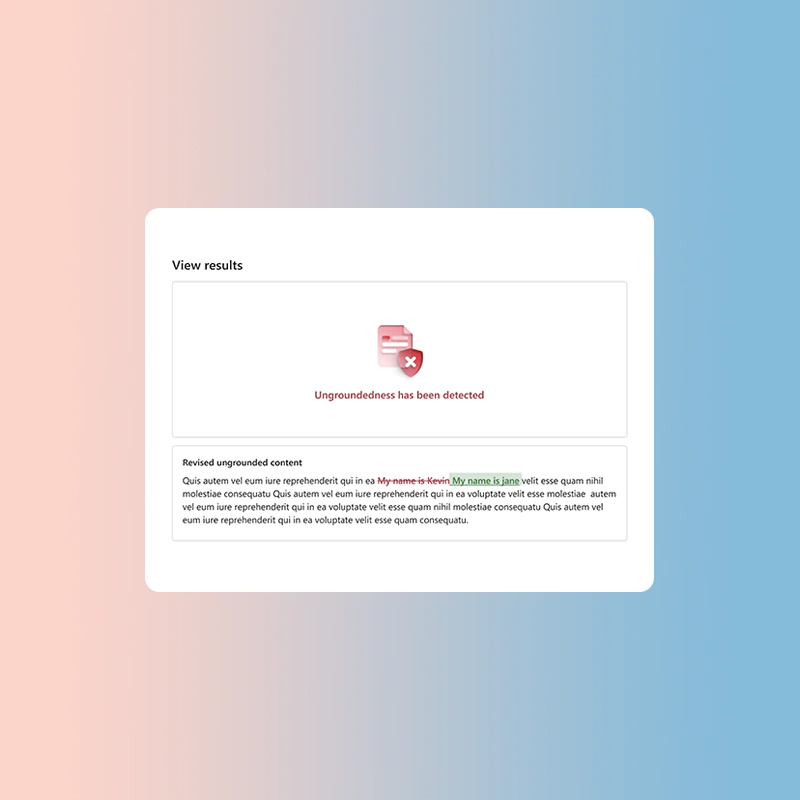

Using what it calls the 'Correct' tool, the AI-based feature can identify, flag, and rewrite inaccurate AI outputs.

In a blog post, Microsoft said that:

The idea is to use Microsoft's existing 'Groundedness Detection' to identify ungrounded or hallucinated content in AI outputs.

The approach is supposed to help developers enhance generative AI applications by pinpointing responses that lack a foundation in connected data sources.

"Since we introduced groundedness detection in March of this year, our customers have asked us: 'What else can we do with this information once it’s detected besides blocking?' This highlights a significant challenge in the rapidly evolving generative AI landscape, where traditional content filters often fall short in addressing the unique risks posed by Generative AI hallucinations," Microsoft explained.

The goal of this Correction tool is to give users the ability to "both understand and take action on ungrounded content and hallucinations."

The tool that runs on Azure AI Content Safety, can both identify and correct hallucinations in real-time before users of generative AI applications encounter them.

This should be especially useful as the demand for reliability and accuracy in AI-generated content continues to grow.

Microsoft's Correction tool is introduced as part of what Microsoft calls the 'Microsoft Trustworthy AI' initiative.

Read: Beware Of 'Hallucinating' Chat Bots That Can Provide 'Convincing Made-Up Answer'

In a separate blog post, Microsoft said that:

"At Microsoft, we have commitments to ensure Trustworthy AI and are building industry-leading supporting technology. Our commitments and capabilities go hand in hand to make sure our customers and developers are protected at every layer."

Besides the Correction tool, Microsoft also introduced 'Embedded Content Safety,' which allows customers to embed Azure AI Content Safety on devices; 'New evaluations' in Azure AI Studio to help customers assess the quality and relevancy of outputs and how often their AI application outputs protected material; and 'Protected Material Detection' for Code to help detect pre-existing content and code.

Further reading: OpenAI Wants To Prevent AI 'Hallucinations' Using What It Calls A 'Process Supervision' Method