AI is good at turning data into insights. But it's only as good as the data is was trained on.

The AI field was quite dull and boring, before OpenAI introduced its world-changing ChatGPT AI. Since then, the hype has disrupted the industry, sending practically everyone into frenzy.

While ChatGPT has proven itself as a very-capable generative AI that can help users in a variety of tasks, with its ability to maintain human-like conversation.

The thing is, generative AIs suffer from what's called "hallucinating" AI.

AI hallucinations occur when models like OpenAI's ChatGPT, or any other generative AIs like Google Bard, fabricate information entirely, behaving as if they are spouting facts.

One famous example is when Google Bard's promotional video made an false claim about the James Webb Space Telescope.

According to he OpenAI researchers in a report:

"These hallucinations are particularly problematic in domains that require multi-step reasoning, since a single logical error is enough to derail a much larger solution."

Due to the so-called "black box" of AI, even the researchers at OpenAI themselves have no idea on how to properly eliminate this hallucination.

All they can do, is try.

This is why OpenAI prominently warns users against blindly trusting ChatGPT, presenting a disclaimer that reads, "ChatGPT may produce inaccurate information about people, places, or facts."

But as the pioneer of the hype, OpenAI sees itself responsible, and because of that, it is taking up its mantle against this AI hallucinations.

To do this, the company announced that it has come up with a novel method for training AI models.

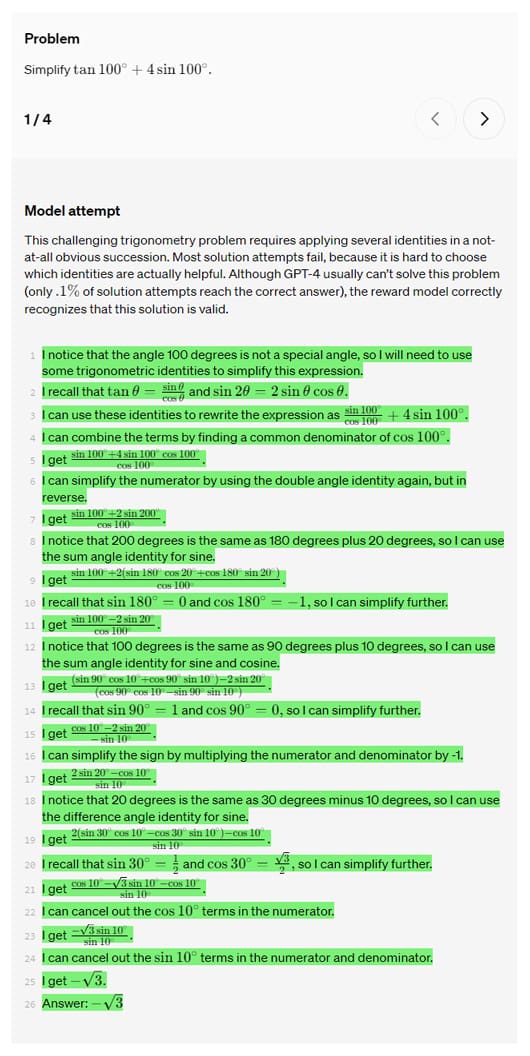

The method to fight AI fabrications, is to teach and then reward AI for each individual correct step of reasoning when it arrives at an answer, as oppose to just rewarding it when it comes to a correct final conclusion.

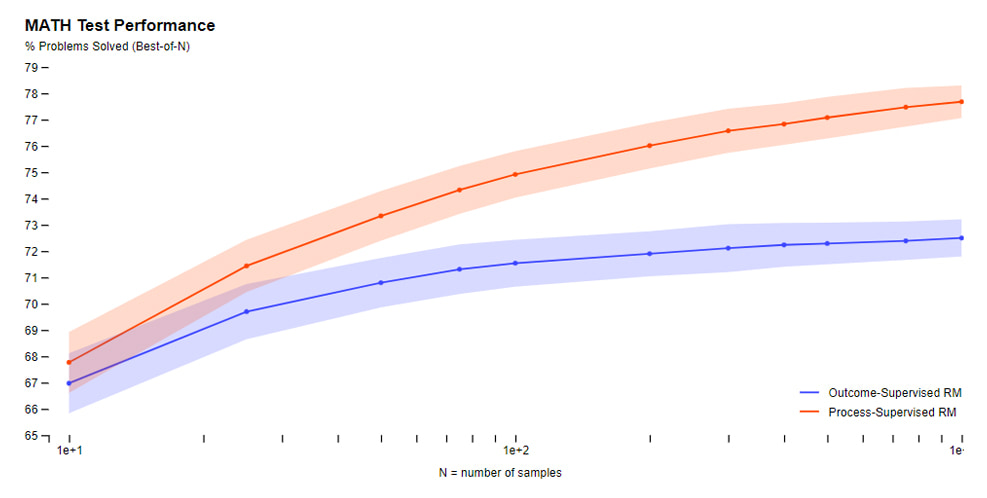

"We evaluate our process-supervised and outcome-supervised reward models using problems from the math test set," OpenAI said. "We generate many solutions for each problem and then pick the solution ranked the highest by each reward model."

The approach is called "process supervision," as opposed to "outcome supervision."

According to OpenAI, this could lead to better explainable AI, simply because the approach encourages models to follow more of a human-like chain of "thought" approach.

The research team concluded this because the process supervision also provides better scrutiny.

"Mitigating hallucinations is a critical step towards building aligned AGI," the company said.

The research comes at a time when misinformation stemming from AI systems is more hotly debated than ever.

OpenAI's ChatGPT has been front-and-center in the AI overhyped topic around the world, making others scramble for their own solutions. It's this particular tech product that accelerated the generative AI boom.

Its chatbot powered by GPT-3.5 and GPT-4, has surpassed 100 million monthly users in just two months, reportedly setting a record for the fastest-growing app ever.

While OpenAI's method to curb AI hallucination is a novel approach, some experts are skeptics and full of doubts.

This is because OpenAI has yet to revel the full datasets accompanying the examples.

OpenAI also didn't say anything about whether its method has been peer-reviewed or reviewed in another format.

Regardless, OpenAI acknowledged that it is unknown how results will pan out beyond mathematics but said that future work must explore the impact of process supervision in other domains.

"If these results generalize, we may find that process supervision gives us the best of both worlds—a method that is both more performant and more aligned than outcome supervision," OpenAI said.