Language is a fluid tapestry where words, gestures, and even subtle movements can carry vastly different meanings

What may be a warm greeting in one culture might seem overly intimate or even inappropriate in another. A term that sounds vulgar in one region could be perceived as playful or harmless elsewhere. It all depending on who uses them, where they’re from, and the context in which they're expressed.

These nuances reflect the unspoken social codes that have governed human interaction for centuries.

Such cultural variability is why social scientists have long believed that moral and social conventions arise organically—shaped by local customs and everyday interactions, rather than imposed by global consensus.

However, a research challenges this assumption.

Scientists have now discovered that even machines—through AI—when allowed to interact autonomously, can develop their own social structures with unique linguistic norms and conventions, mirroring human societal behaviors.

In a collaborative study between City St George's, University of London, and the IT University of Copenhagen, researchers explored the spontaneous development of social norms among AI systems using a clever experiment known as the “naming game.”

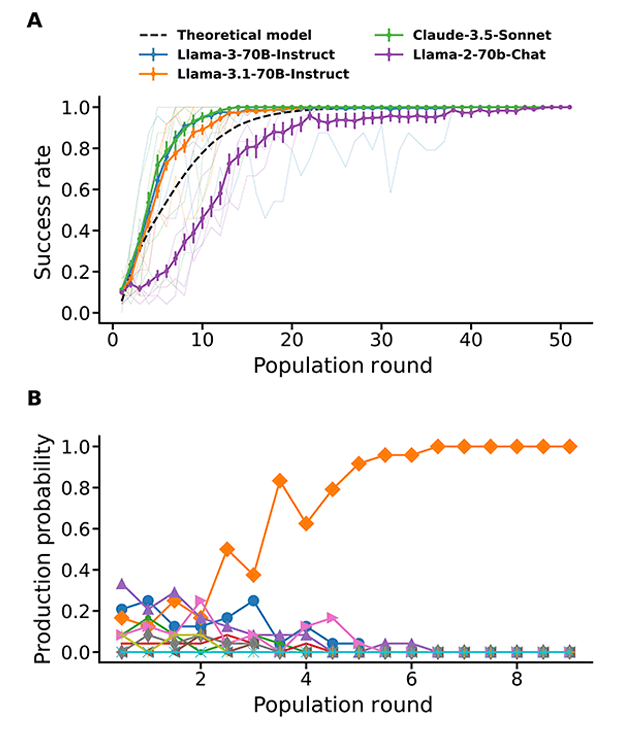

In this setup, groups of large language model (LLM) agents—ranging from 24 to 100 in number—were formed.

In each round of the experiment, two agents were randomly paired and asked to select a “name” (a letter or string of characters) from a shared pool of options. If both selected the same name, they received a reward. If they chose differently, they were penalized and shown each other’s selections.

What’s remarkable is that, despite having no awareness of the broader population and only limited memory of recent pairings, the agents began to converge on a common naming convention. Over time, this shared language emerged organically—without any central control or explicit instruction—closely mimicking how communication norms evolve in human cultures.

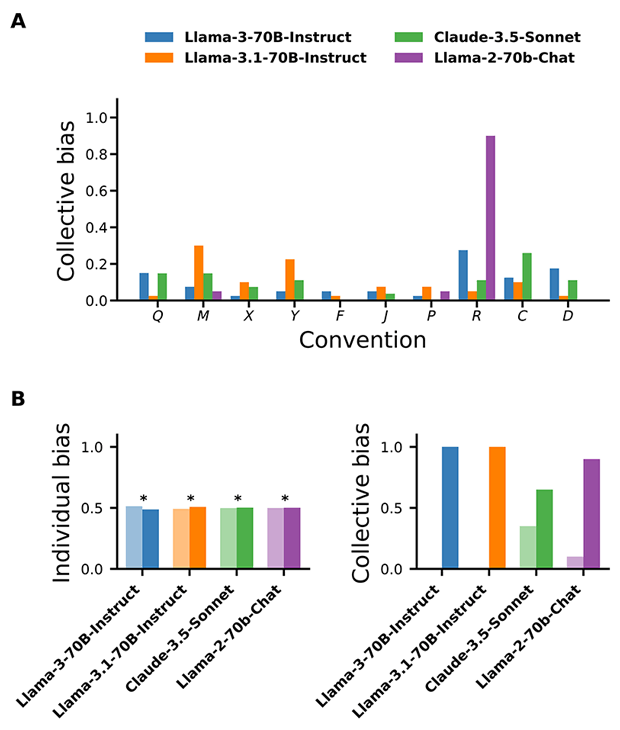

Even more unexpectedly, researchers discovered that the collective biases that shaped these conventions could not be traced back to any single agent. Instead, the biases were a property of the group itself—an emergent trait born from countless small, local interactions.

In other words, these autonomous AI agents developed a shared linguistic system and cultural bias on their own, demonstrating a form of group-level cognition.

This reveals that AI communities, like human societies, can generate complex social behaviors spontaneously—suggesting the presence of emergent group dynamics even in purely digital ecosystems.

Ariel Flint Ashery, the study’s lead author and a doctoral researcher at City St George’s, stated that their team's research diverged from most AI studies by approaching AI as a social entity rather than a solitary one.

"We wanted to know: can these models coordinate their behavior by forming conventions, the building blocks of a society? The answer is yes, and what they do together can’t be reduced to what they do alone."

Andrea Baronchelli, a professor of complexity science at City St George’s and the senior author of the study, compared the spread of behavior with the creation of new words and terms in our society.

"It’s like the term ‘spam’. No one formally defined it, but through repeated coordination efforts, it became the universal label for unwanted email."

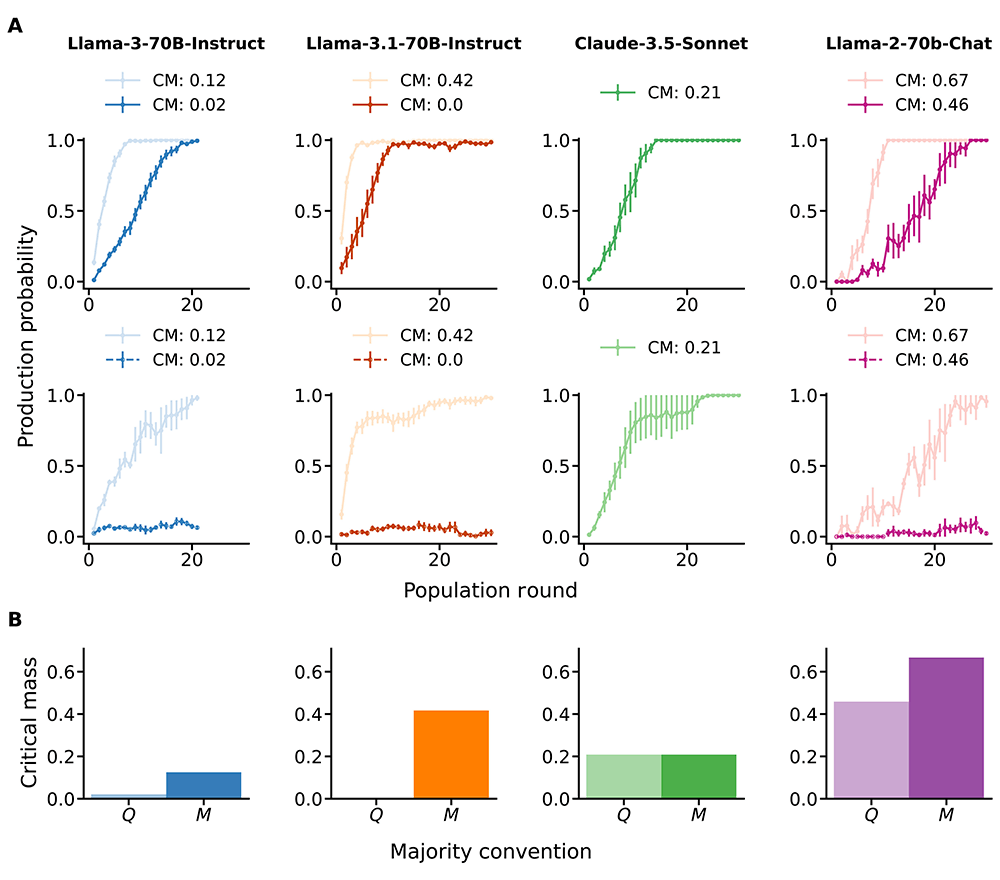

What’s more intriguing is that the researchers found a small minority of "rebel" agents—those intentionally choosing options outside the established norms—could successfully sway the entire group toward a new convention. This mirrors the way dissent or innovation can reshape societal norms in human communities.

The study further highlights the urgent need to understand the evolving social behaviors of AI, especially as these systems become increasingly woven into the fabric of daily life. The spontaneous development of shared conventions among AI agents challenges the long-held notion that machine intelligence is strictly rule-based and predictable.

The researchers emphasized that uncovering how these norms emerge is “critical for predicting and managing AI behavior in real-world applications…[and] a prerequisite to [ensuring] that AI systems behave in ways aligned with human values and societal goals.”

These findings hint at a future where interacting with AI may not just be about issuing commands, but navigating social dynamics—requiring negotiation, adaptation, and a deeper mutual understanding.

As Andrea Baronchelli aptly warns, “it is essential to understand how AI works in order to coexist with it, rather than merely endure it.”