Gemini’s Secret Weapon, Is How Google Is Making Gradients The New 'Smiling Face' Of AI

In the ever-evolving landscape of AI, designing something to represent it presents a unique challenge, and Google Google likens it to uncharted design territory.

In the ever-evolving landscape of AI, designing something to represent it presents a unique challenge, and Google Google likens it to uncharted design territory.

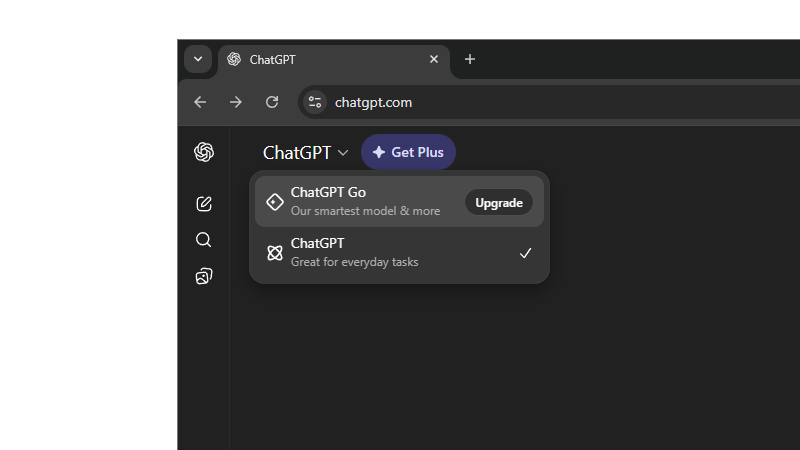

The LLM war has escalated into one of the fiercest battles in tech history.

Proton, the Swiss company renowned for its privacy-focused services, has taken another significant step in the AI landscape.

The explosive rise of large language models (LLMs) is now history. But the bang still echoes throughout the tech sphere.

What began as a breakthrough-turned-war, things have shifted towards putting the technology to more places.

The digital landscape has shifted dramatically, and the competition has never been this fierce.

In the ever-evolving landscape of digital productivity, for years, the creative industry has been anchored by a single, undisputed titan.

The large language models (LLMs) war is far from over.

It’s undeniable that people everywhere are using AI chatbots to self-diagnose their health concerns, and the trend is only growing.

The LLM war rages on fiercely into 2026, with tech companies battling for supremacy in intelligence, speed, multimodal capabilities, and real-world utility.