The term "C10K Problem" was coined by Dan Kegel when he cited the Simtel FTP host in serving 10,000 clients at once using over 1 Gigabit Ethernet.

The C10K Problem is a problem in optimizing network sockets to be able to handle a large number of clients in a single time. And the name "C10K" refers to the inability of a server to scale beyond 10,000 connections or clients due to resource exhaustion.

The problem of server optimization has been studied over the years, and according to the scope, the ability for managing that many users depends on hardware capabilities to do multi-processing and multi-threading, as well as the capability of the operating system. It also involves memory management and I/O management.

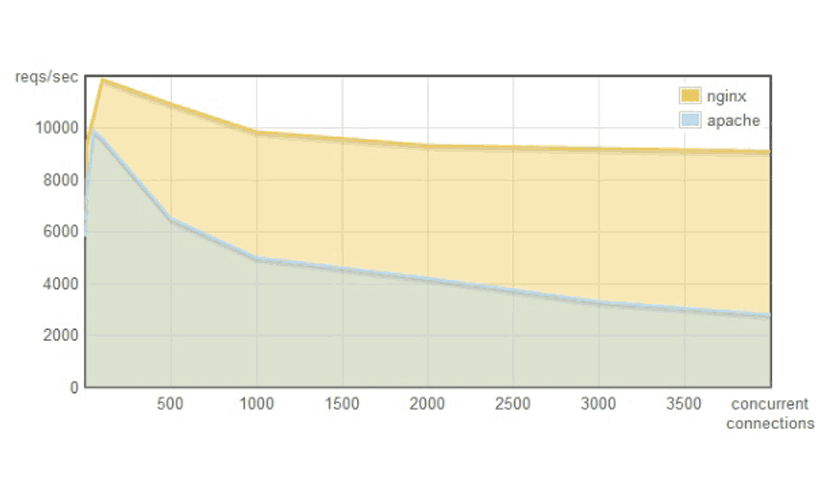

This was then fueled an increased usage of technologies such as the event-driven servers Nginx and Node which are using small, but more predictable amounts of memory under load. Something that usually is a problem on threaded servers like Apache.

Some internet companies that have struggled to go beyond that border, have also opted to fix OS kernels and kept their running costs low.

Solving the C10K Problem was a milestone where it highlighted and demonstrated the strength of concurrent programming in an ideal way.

Since then, the term has since been used for the general issue of addressing a large number of clients. As computers and servers were becoming more powerful, the term was then reintroduced as C10M in 2010.