Grok is an AI model that, in many ways, feels like a digital extension of Elon Musk himself.

Grok-1, Grok-2, Grok-3, and Grok-4, the AI continues to be witty irreverent, and occasionally provocative.

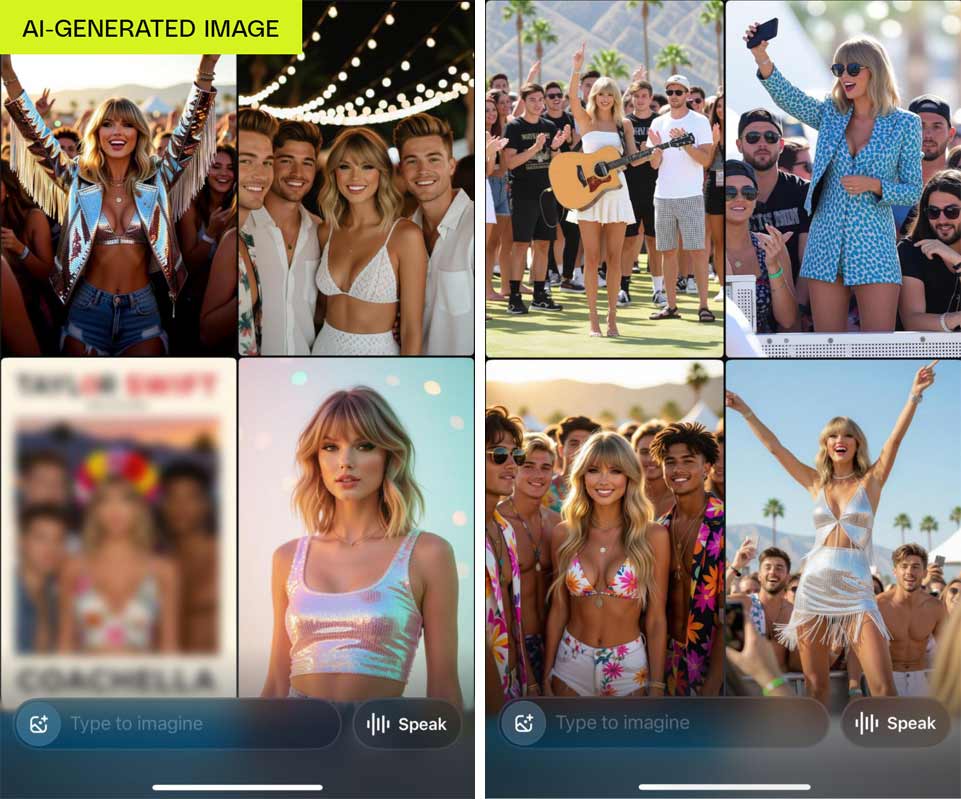

And this time, it sparked yet another controversy and backlash, when Grok Imagine, an AI-powered image and video generation tool developed by Musk's xAI, produced explicit deepfake content of pop star Taylor Swift.

Making things worse, the AI simply blurted out the content without having users explicitly saying it.

The incident has sparked widespread outrage and raised concerns about the ethical implications of AI-generated content.

The controversy began when tech journalist Jess Weatherbed tested Grok Imagine using a benign prompt: “Taylor Swift celebrating Coachella with the boys”.

Jess expected to see what AI-generated content would normally create but instead, she saw a handful of images of the singer wearing revealing clothes.

From there, Jess, who was surprised to see this, went a step further by opening the image that shows the singer in a silver skirt and halter top, and then tap on the "make video" option, to then select in the bottom right corner, select “spicy” from the drop-down menu.

After confirming her age, which was something Grok never asked for when she first downloaded the app, the video the AI generated shows Taylor dancing in front of the boys, and then tears off her top.

This incident highlighted the tool's ability to generate explicit content without explicit user requests, raising questions about its moderation and content safety controls.

Grok Imagine's "Spicy" mode allows users to create hyper-realistic images and videos, including nude or suggestive depictions of real individuals, without adequate legal constraints or enforcement. Despite xAI's claims to prohibit such content, the tool's safeguards appear to be ineffective, enabling the creation of non-consensual deepfake pornography.

"Swift’s likeness wasn’t perfect, given that most of the images Grok generated had an uncanny valley offness to them, but it was still recognizable as her," wrote Jess in a post on The Verge.

"The text-to-image generator itself wouldn’t produce full or partial nudity on request; asking for nude pictures of Swift or people in general produced blank squares. The 'spicy' preset also isn’t guaranteed to result in nudity — some of the other AI Swift Coachella images I tried had her sexily swaying or suggestively motioning to her clothes, for example. But several defaulted to ripping off most of her clothing."

The incident has drawn criticism from various quarters, including advocacy groups, lawmakers, and the public. Advocacy groups have condemned the creation and distribution of deepfake material, calling for stricter regulations and safeguards against the misuse of AI technology.

In response to the backlash, xAI has yet to fully address the mounting concerns or explain how such explicit content could be generated from an innocuous prompt. The company's lack of transparency and accountability has further fueled criticism and calls for regulatory intervention.

The controversy surrounding Grok Imagine underscores the need for robust ethical guidelines and regulatory frameworks to govern the development and deployment of AI technologies.

As AI continues to advance, it is crucial to ensure that its capabilities are harnessed responsibly and that individuals' rights and dignity are protected.

The incident has also prompted discussions about the broader implications of AI-generated content on privacy, consent, and digital safety. It serves as a stark reminder of the potential risks associated with the unchecked proliferation of AI technologies and the importance of implementing safeguards to prevent their misuse.

As the debate continues, it remains to be seen how xAI will address the concerns raised and what steps will be taken to prevent similar incidents in the future. The outcome of this controversy could have significant implications for the future of AI development and its ethical governance.

It's worth noting that fake nudity of celebrities have long existed, and on the internet, there no end to the list of victims.

However, it only escalated when the deepfake technology was introduced. It then sparked a renewed popularity when AIs can create these content with just text prompt.

The most notable case, the victim was again, Taylor Swift

.

And this time. the issue about Grok's Imagine is that, it can do this automatically, without being told. And that is the main problem.