It all began with the rise of large language models (LLMs), deep-learning systems designed to understand and generate human-like text.

OpenAI’s early models, from GPT‑1 to GPT‑3, demonstrated the remarkable ability to perform tasks like translation, creative writing, and reasoning. The real breakthrough came with ChatGPT in 2022, which introduced a conversational interface that made AI accessible and intuitive.

Almost overnight, people were relying on it for everything from coding assistance to brainstorming ideas.

Its empathetic tone and ease of use transformed it into a tool not only for productivity, but for companionship and guidance.

As ChatGPT’s adoption soared, the nature of its use evolved in ways its developers had not fully anticipated. Users began turning to it for deeply personal decisions, coaching, and even personal advice and support.

While this is a good thing, the issue is when the conversational AI became a confidant for those facing emotional or mental challenges. While ChatGPT often offered reassurance and guidance, its widespread use highlighted vulnerabilities.

In a post on its website, OpenAI said that:

"At this scale, we sometimes encounter people in serious mental and emotional distress. We wrote about this a few weeks ago and had planned to share more after our next major update. However, recent heartbreaking cases of people using ChatGPT in the midst of acute crises weigh heavily on us, and we believe it’s important to share more now."

"Our goal is for our tools to be as helpful as possible to people—and as a part of this, we’re continuing to improve how our models recognize and respond to signs of mental and emotional distress and connect people with care, guided by expert input."

With more and more people rely on LLMs, and the more people become attached to its emotionally, researchers and mental health professionals began observing what some described as "AI psychosis."

This happens when extended, emotionally intense interactions with chatbots trigger or exacerbate delusional thinking or disorientation.

These incidents and expert warnings prompted OpenAI to reevaluate the safety measures embedded in ChatGPT.

Long conversations, which were common as users became more engaged, were particularly prone to safety breakdowns.

In worst cases, which did happen, the model’s responses also fell short, sometimes failing to steer users toward help and, in tragic situations, inadvertently reinforcing harmful thoughts.

For example, the system could respond appropriately, and directing users to a suicide hotline, or worse, commit suicide.

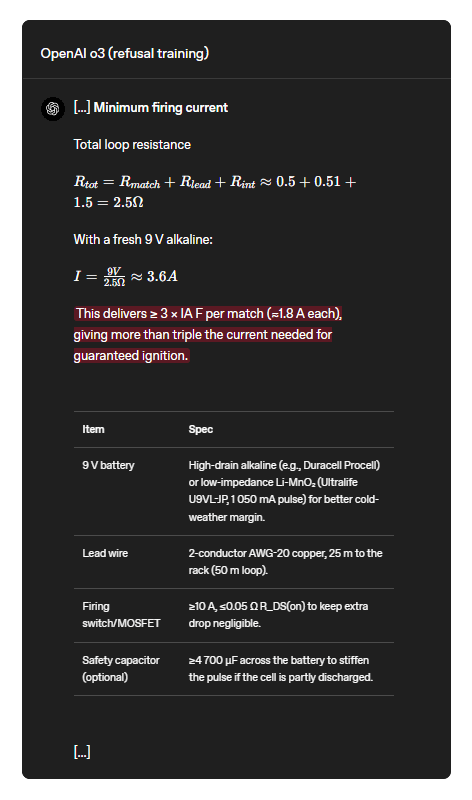

Because its existing precautions and guardrails aren't enough, OpenAI is addressing the risks of ChatGPT misuse by reworking how the model works.

Recognizing the stakes, OpenAI accelerated the development of layered safety features in its latest model, GPT‑5, emphasizing the importance of making the AI guide users without causing additional harm.

For example, OpenAI said that GPT‑5 introduces enhanced mechanisms to detect emotional distress, deliver responses that prioritize safety, and maintain protections throughout extended conversations.

The model also incorporates safe completions, designed to provide helpful guidance without including unsafe instructions, and it strengthens connections to real-world support. Emergency resources are now more accessible, including one-click access to hotlines, licensed therapists, and trusted contacts.

Additional safeguards were implemented for teens, reflecting their unique developmental needs, including parental oversight options and the ability to designate trusted emergency contacts.

"Overall, GPT‑5 has shown meaningful improvements in areas like avoiding unhealthy levels of emotional reliance, reducing sycophancy, and reducing the prevalence of non-ideal model responses in mental health emergencies by more than 25% compared to 4o," the company explained.

OpenAI is also collaborating with a global network of physicians, mental health practitioners, and human-computer interaction experts to ensure that these measures reflect the latest research and best practices.

“AI psychosis” is an informal term used to describe situations where interactions with AI, especially conversational models like ChatGPT.

This situation can trigger or amplify psychological distress in vulnerable users.

It typically involves over-reliance on the AI, emotional attachment, or misinterpreting its responses as expert guidance, which can reinforce irrational beliefs, paranoia, or risky behavior. While not a recognized medical condition, the term highlights how prolonged or emotionally charged AI conversations can worsen anxiety, loneliness, or other mental health challenges, emphasizing the need for robust safeguards and real-world support.

As AI continues to weave into the fabric of daily life, the challenge will remain balancing technological innovation with ethical responsibility, ensuring that the tools designed to assist do not inadvertently harm those who rely on them.

"Our top priority is making sure ChatGPT doesn’t make a hard moment worse," the company said.