Google's strategy for Extended Reality (XR) is fundamentally driven by the Android XR platform.

This platform, which is designed to be the industry's first unified platform supporting a spectrum of devices from immersive headsets to lightweight glasses, with the core experience built around the conversational power of Gemini. The vision is to evolve from merely smart devices to truly intelligent ones, where Gemini serves as a universal assistant that understands the user’s context, anticipates their needs, and handles tedious tasks to keep them present in the real world.

This platform-first approach, built in collaboration with key partners like Samsung and Qualcomm, ensures that all your favorite Google Play apps and experiences are integrated from the start.

The Android Show: XR Edition highlighted the immediate and future trajectory of this ecosystem, starting with a significant feature drop for the Samsung Galaxy XR headset, which is the first device powered by the platform.

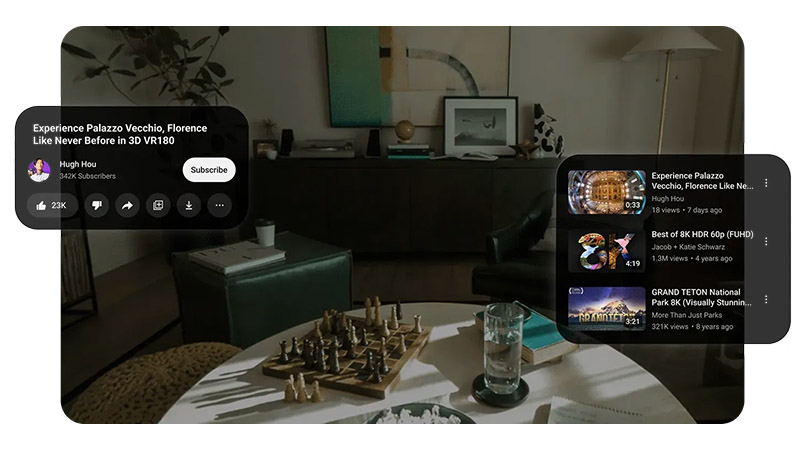

New updates for the Galaxy XR include PC Connect, a feature that allows users to wirelessly link their Windows PC to the headset, pulling their desktop or individual app windows into the XR space for a massive virtual screen perfect for gaming or productivity.

For connectivity, the new Likeness feature allows users to show up in video calls on Google Meet and other apps as a photorealistic digital avatar that mirrors their real-time facial expressions and gestures, promoting authentic communication even while wearing a headset.

Furthermore, a Travel Mode has been added to stabilize the view, allowing users to safely enjoy the headset as a personal cinema or workspace while in motion on a flight. A major system-level feature, Auto Spatialization, is slated to arrive next year, which will give users the ability to transform virtually any 2D content, including games and videos, into an immersive 3D experience in real-time with the press of a button.

ICYMI: See the biggest announcements from The Android Show | XR Edition — from glasses to headsets and everything in between. Catch the highlights https://t.co/PseNcRlkK8 #TheAndroidShow pic.twitter.com/vZBXVSM5QC

— Android (@Android) December 8, 2025

Moving beyond headsets, the platform is expanding to other form factors, notably two types of AI-powered glasses that are being developed in partnership with eyewear brands Warby Parker and Gentle Monster, emphasizing that they are "eyewear first."

The first model is designed for screen-free assistance, using a combination of built-in speakers, microphones, and a camera for hands-free interaction with Gemini, enabling functions like live translating conversations or taking photos. The second model, Display AI glasses, adds a small, transparent display that privately provides contextual information, such as navigation cues or real-time translation captions.

Demos showed Gemini being able to identify objects in the environment, retain that memory for later queries, and utilize the advanced "Nano Banana" image editing model to instantly modify photos taken with the glasses.

A new category of device, the wired XR glasses, was also introduced, exemplified by XREAL’s Project Aura, which is positioned to bridge the gap between full headsets and lightweight glasses.

Project Aura uses optical see-through lenses with a 70-degree field of view to overlay digital windows onto the real world, creating a portable, high-immersion canvas for taking your workspace or entertainment on the go. The glasses are tethered to a "Puck" that houses the main computer and battery, which also functions as a trackpad.

AI glasses that translate conversations live and bring immersive VR on-the-go... that's the vision.

See more at https://t.co/VoC06B4Ofl#TheAndroidShow pic.twitter.com/50Pd5s8iVz— Android (@Android) December 8, 2025

To support this expanding ecosystem, Google has released Developer Preview 3 of the Android XR SDK, which opens up development for AI glasses and includes new libraries like Jetpack Glimmer for building beautiful glasses-optimized UIs and Jetpack Projected for bringing existing Android mobile apps directly to AI glasses.

This new set of tools is already being utilized by partners like Uber and GetYourGuide to create context-aware experiences, such as providing heads-up directions for Uber pickups or guided city food tours.

Google emphasizes that if you are already developing for Android, you are already developing for Android XR.

Next-gen video calls

A personal theater at 30,000 feet

Your PC with an infinite screen

All this and more are coming to the Galaxy XR headset https://t.co/VoC06B4Ofl#TheAndroidShow pic.twitter.com/wYyA2Kl0Qe— Android (@Android) December 8, 2025

It's worth mentioning that the history of virtual reality (VR) and augmented reality (AR) has seen several cycles of intense excitement followed by market retrenchment, but the current phase is defined by the integration of sophisticated AI, which Google now sees as the key to mass consumer adoption.

The concept of immersive reality dates back nearly two centuries to the stereoscope in the 1830s, but the modern era of consumer XR began in the early 2010s with two distinct approaches.

On the AR side, Google Glass (launched publicly in 2013) embodied the promise of ambient, subtle computing: a device that overlaid basic digital information onto the real world. However, it was plagued by social stigma, privacy concerns over its camera, and limited utility, leading to its eventual failure in the consumer market.

Simultaneously, Oculus (founded in 2012, later acquired by Facebook/Meta) ignited the modern VR boom, focusing on high-fidelity gaming and completely immersive worlds.

This kick-started a hardware race among companies like HTC, Sony, and Meta to perfect the headset experience, but mass adoption remained constrained by clunky hardware, high cost, limited content, and the isolation of being completely cut off from the real world.

The market did not precisely "plummet" due to AI, but rather, the initial hype surrounding XR plateaued as it failed to move beyond niche markets like gaming and enterprise training.

The true disruption came as the exponential rise of AI shifted the focus away from pure immersion (VR) to intelligent, real-world utility (AR and AI). Devices were still too bulky, too expensive, and the user interface. Relying on awkward gestures or physical controllers felt disconnected from the conversational, hands-free interaction users were beginning to expect from digital assistants.

The success of large language models like Gemini demonstrated that users prioritize an invisible, contextual, and deeply helpful AI experience. This insight has redefined the problem for XR: the core challenge is no longer just how to render a digital world, but how to make the technology disappear while making the user's real world more intelligent.

Today, at The Android Show | XR Edition, we announced futuristic features coming to Android XR, new devices, partners, features, developer tools, and so much more.

Read up on exciting updates here https://t.co/libOelAmv4— Android (@Android) December 8, 2025

Google's strategy to revive and unify the Extended Reality (XR) market centers entirely on its Android XR platform and the omnipresence of Gemini AI.

By building Android XR on the familiar Android foundation, Google aims to solve the problem of content and fragmentation, ensuring that apps and developer tools are consistent across the entire XR spectrum.

The central innovation, however, is positioning Gemini as the primary user interface. Instead of forcing users to learn complex spatial gestures, Gemini allows for conversational, contextual, and hands-free interaction, essentially creating an "operating system within the operating system."

This approach directly addresses the historical pain points of VR/AR: like hardware problem, emphasizing real-world utility, and unifying ecosystem.