We tend to believe images better than text. But these days, image manipulation is easy, and this is why we became more convinced by videos to reveal facts better.

But no, videos can also be tampered with. If it's not Hollywood, it's deepfake or other sort of video tampering trickery with tools, which many of them are available from the internet.

Since videos have become a crucial tool for law enforcement, video tampering can cause huge problems.

With the combination of "deepfake" video manipulation technology and security issues that plague so many connected devices, it has become increasingly difficult to confirm the integrity of a footage.

This is why researchers are trying to put the available technologies at their disposal to come up with solutions.

One of which, is called the 'Amber Authenticate'.

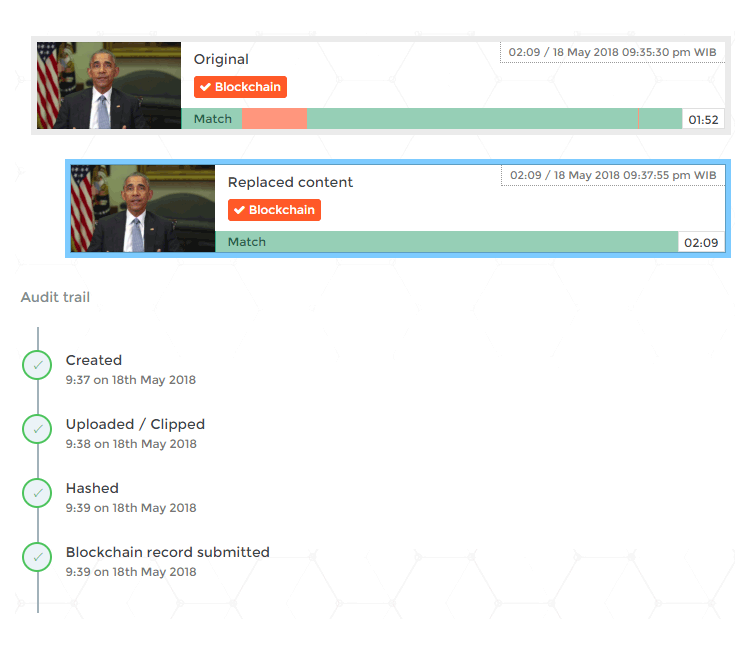

This tool can be installed on a device to run in the background, to analyze videos at user-determined intervals.

When running, the tool generates hashes of cryptographically scrambled representations of the data the device records, to then record the information on a public blockchain.

If that tool runs another video footage of the same kind, but has been tampered with (has its audio or video data changed), the algorithms that scan the video will find that the hashes are different.

This will determine that the video has indeed been manipulated.

According to Amber CEO Shamir Allibhai:

"What we’re worried about is that, when you couple that with deepfakes, you can not only add or delete evidence but what happens when you can manipulate it? Once it’s entered into evidence it’s really hard to say what's a fake. Detection is always one step behind. With this approach it’s binary: Either the hash matches or it doesn’t, and it's all publicly verifiable."

A tool like his can appeal human right activists, free speech advocates, and law enforcement watchdogs that wary of potential cover ups.

The idea is that manufacturers of products like CCTVs and body cameras can license this kind of tool and run it on their devices.

"I’ve been taking the technology and putting it on a body camera, because there's no authentication mechanism right now on any of the cameras," explained Josh Mitchell, Amber Security Consultant.

"The fact that there’s nothing protecting that evidence from a malicious party is worrying, and manufacturers don’t seem very motivated to do anything. So if we have a provable, demonstrable prototype we can show that there are ways to ensure that all parties have faith in the video and how it was captured."

But to make this tool useful, manufacturers/users must also set the interval to balance system constraints on devices with what a camera may be filming.

Creating hashes every 30 seconds on a police body camera, for example, can allow quick and subtle scanning, but may slip through some potentially impactful manipulations. On the other hand, setting the intervals to every second on a small surveillance camera can be an overkill.

So there should be a balance between needs and the hardware's capabilities.

Allibhai said that Authenticate wants to make this project transparent and open to vetting by outside experts.

Previously, the U.S. Defense Advanced Research Projects Agency (DARPA) has also created a method to spot deepfakes and video manipulation, but using AI.