With computers becoming more powerful and capable, and Artificial Intelligence getting smarter, creating deepfakes is just a couple of clicks away.

It's so easy and cheap to make, but it's so difficult to spot. Even humans with trained eyes can fail when trying to differentiate real footage from a faked one, let alone casual consumers of the web.

And this causes a big problem.

Deepfake was originally introduced as a way to swap celebrities' faces with porn stars' bodies. With its ability to manipulate videos in a way that they are difficult to distinguish, people can leverage this fact for various of other malicious purposes.

While humans can fail, computers can excel.

At the 2019 Black Hat conference, the team at ZeroFOX presented techniques for identifying deepfake videos using AI tools to look for only a specific thing inside video, and another tool to automate the process.

According to ZeroFOX CTO Mike Price on the announcement:

"One of the first points I want to make: humans are horrible detection machines."

Price cited a study called 'FaceForensics', which found that humans could only identify real images 80 percent of the time.

"That army of human analysts you're going to deploy to detect deepfakes is not going to work."

Machines can identify fake and forgery in videos in ways much better than humans, but that is possible under certain conditions.

For example, AIs used in the technology can look for physiological elements, such as blinking and breathing. However, as deepfakes are becoming more realistic, the AIs that learned from more varied footage can mask those traits, or even completely fake them.

Examining the PRNU patterns in footage, which are the imperfections unique to the light sensor of specific camera models, can help identification. But once multiple camera angles are involved, the approach can becomes less useful.

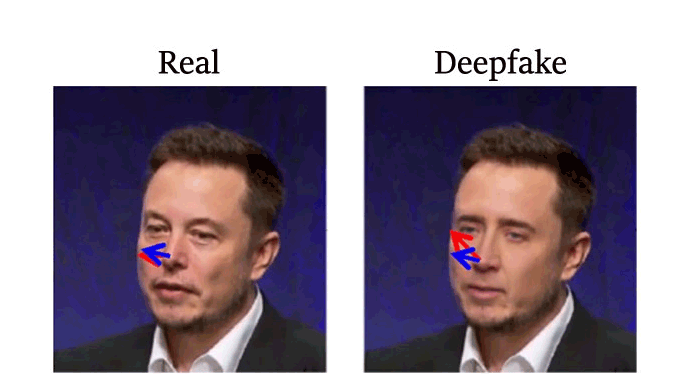

There is also other method which looks deep into pixels, to see whether they have been realigned, contain artifacts or misalignments in face symmetry.

Unfortunately, all methods for spotting deepfakes, according to Price, share one universal flaw: their detection capabilities go down as the quality of the video goes down.

"More than likely, you're going to have access to the compressed video," said Price, explaining that deepfakes to be effective need to be compressed and shared on various platforms.

Knowing the limitations of AI-powered deepfake detectors, Price has a solution.

Called 'Mouthnet', the tool looks only at the subject's mouth.

In theory, this should work, because according to Price, deepfakes need to edit the mouth of the target in order to make them say something. "You may not touch the eyes, you may not touch the nose, you do have to touch the mouth," he explained.

To train the AI, the team used 200 deepfake videos, and set the model against 100 videos that were not used in training.

The result was: 41 percent of the deepfakes were detected, but 10 percent were misclassified. Mouthnet was able to identify the fakes 53 percent of the time, when it comes to distinguishing single images taken from videos.

No, the numbers aren't jaw-dropping, but Matt Price said that the training materials were particularly difficult, so he's happy with the result. Over time, with more training and tweaking, the AI should improve its capability.

Another tool the team created, is called 'Deepstar', which is an "open source toolkit developed by ZeroFOX based on research into deepfake videos and the difficulty in quickly developing and enhancing detection capabilities."

The tool "automates some of the labor-intensive tasks required," the company said in a blog post.

"With the release of Deepstar, researchers and defenders will have an additional tool in their toolkit to assist in streamlining the process of deepfake detection research."

"With the likely abuse of deepfakes as part of an effort to misinform the public, we felt it was important to contribute our toolkit back to the community that has already done some great work, and to help defenders improve their ability to prepare for future challenges in this area."