After deepfake was introduced, its popularity makes everybody a potential target of fakery.

The technology was initially introduced as a way to easily put celebrities' faces on porn stars' bodies. This was followed by the creations of footage of high-profile figures and politicians saying what they weren't suppose to say.

Since then, the fight to curb fake news went from an uphill battle to something even more exhausting. But not impossible.

Deepfake videos can be quite realistic. And with more training and enhancements, the AI can create even more convincing footage. But just because the fakery can be so realistic, it doesn't mean that deepfakes don't have flaws.

Yes, they can deceive human eyes. But when algorithms are deployed to help, deepfakes can be spotted quite easily.

In an article by The Conversations, Siwei Lyu, a Professor of Computer Science; Director, Computer Vision and Machine Learning Lab, University at Albany, State University of New York, said that:

"Now, our research can identify the manipulation of a video by looking closely at the pixels of specific frames. Taking one step further, we also developed an active measure to protect individuals from becoming victims of deepfakes."

In short, while the human eyes see from a broader picture, algorithms can navigate far closer, down to the size of pixels.

Here, in their two research papers (1) (2), the researchers described ways to detect deepfakes by spotting flaws that can’t be fixed easily by the fakers.

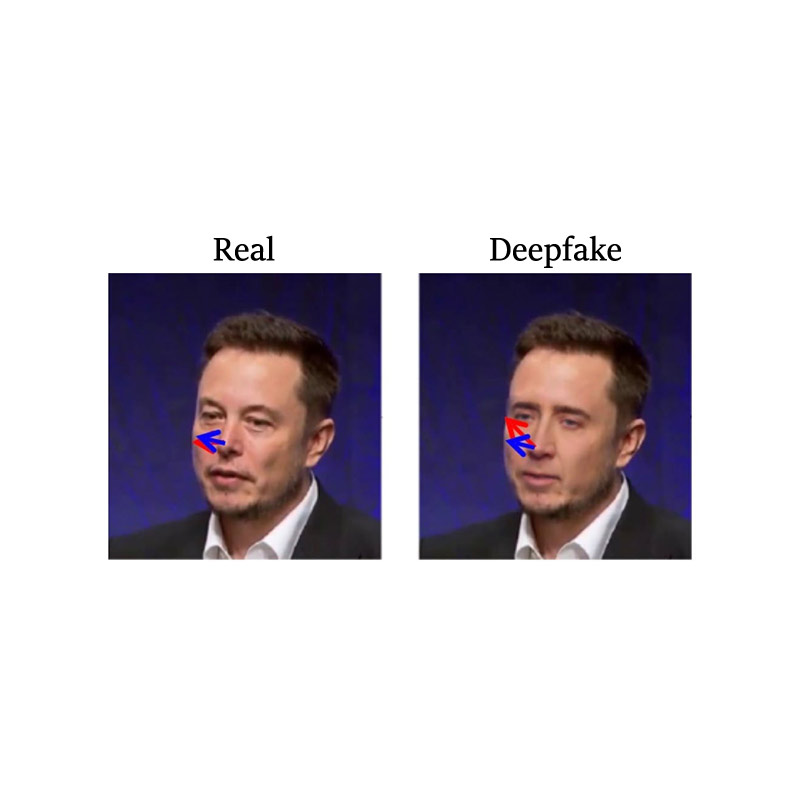

The researchers found that when a deepfake video is created, the synthesis algorithm generating the new facial expressions cannot always match the exact positioning of the subject's face, the lightning condition, or the distance to the camera.

In order to create deepfakes, the algorithm needs to make the subject's faces to blend into the surroundings. What this means, the algorithm needs to make some adjustments, like rotating, resizing or distorting.

This process apparently leaves digital artifacts in the resulting image.

Early deepfake videos have this flaws practically visible to the human eyes. From distortion to blurry borders or artificially smooth skin. Even the untrained eyes can catch these flaws. While deepfakes have become more realistic, the transformation is still evidence.

With algorithms, these flaws can be spotted, even when trained eyes can’t see the differences.

The researchers said that deepfake algorithms are not yet capable of fabricating faces in 3D. Instead, they generate a 2D image of the face, and try to rotate, resize and distort them to fit the subject's movement in the original video.

"They don’t yet do this very well, which provides an opportunity for detection," added Lyu. "We designed an algorithm that calculates which way the person’s nose is pointing in an image. It also measures which way the head is pointing, calculated using the contour of the face. In a real video of an actual person’s head, those should all line up quite predictably. In deepfakes, though, they’re often misaligned."

Whether used as weapon for revenge, to manipulate financial markets or to destabilize international relations, deepfakes are indeed a threat. Anyone can be the subject of fakery.

The science of detecting deepfakes is an arms race, between the creators of deepfake videos that are on the move to create more convincing fakery, with researchers in finding ways to fight deepfake creators at their own game.

For example, previous attempts to fight deepfakes, include DARPA in creating tools to automatically spot deepfakes, to 'Amber Authenticate' which aims to protect videos from deepfakes using cryptography and blockchain, GitHub in banning any deepfake source codes on its platform, and Virginia in the U.S. becoming one of the very first than bans deepfakes by law.

And this research by Lyu, is just another step up.

On top of the deepfake-detecting algorithm, the researchers also found ways to add specially designed noise to digital photographs or videos that are invisible to human eyes but can fool face detection algorithms. This technology can conceal the pixel patterns face detectors use to locate a face, and creates decoys that suggest there is a face where there isn't one.

Read: How Artificial Intelligence Can Be Tricked And Fooled By 'Adversarial Examples'

When this technology is widespread, the internet which has become the source for deepfakes' training data, will have less real faces (not yet tampered with the invisible noise). This should pollute the training data, making future deepfake footage worse.

This in turn should slow down the process of people creating deepfakes, and also make deepfakes more flawed and easier to detect.

"As we develop this algorithm, we hope to be able to apply it to any images that someone is uploading to social media or another online site," continued Lyu.

"During the upload process, perhaps, they might be asked, 'Do you want to protect the faces in this video or image against being used in deepfakes?' If the user chooses yes, then the algorithm could add the digital noise, letting people online see the faces but effectively hiding them from algorithms that might seek to impersonate them."