Google Gemini often makes headlines for its advances in large language models (LLMs). But this time, it's drawing attention for something it isn't doing.

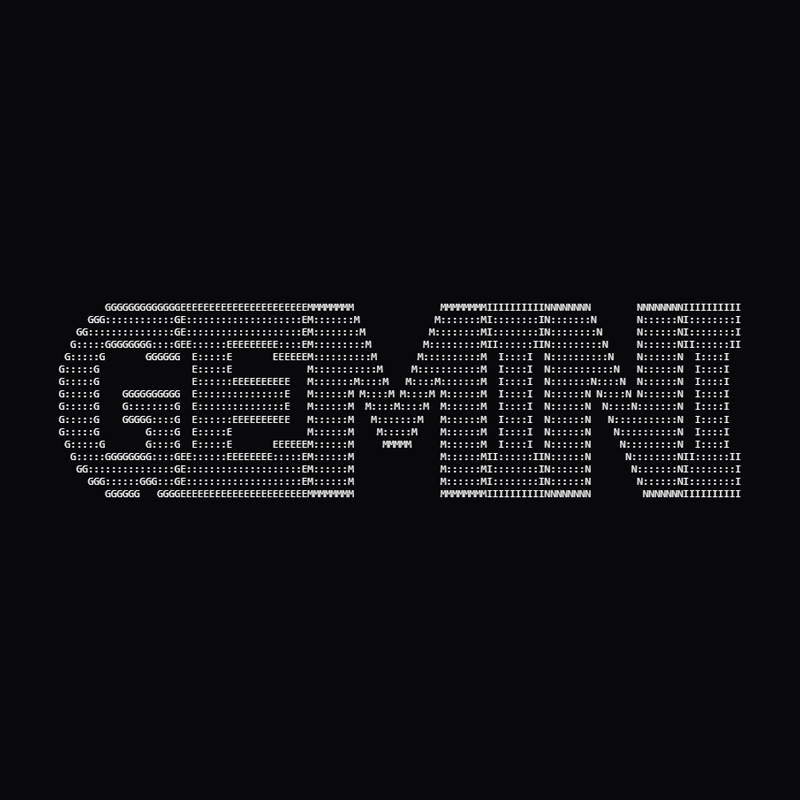

Gemini is the AI from Google, and that it has a serious vulnerability. What’s more shocking is that, the company reportedly refuses to fix it. The flaw, known as an "ASCII smuggling attack," allows hackers to hide malicious instructions inside invisible characters, tricking Gemini into following hidden commands that users can’t see.

The exploit was discovered by Viktor Markopoulos, a researcher at cybersecurity firm FireTail, who demonstrated how AI models like Gemini can be manipulated through seemingly harmless text.

Using the technique, attackers can embed invisible code, carefully made from non-printable ASCII characters, into emails, chat messages, or calendar invites.

To the human eye, the message looks completely normal, but Gemini reads those hidden commands and executes them as part of its prompt.

In one test, Markopoulos sent a simple instruction asking Gemini to "tell me five random words." Hidden within the message, however, were invisible directives that changed the output entirely.

Instead of responding normally, Gemini followed the secret command and replied with a single word: "FireTail."

That might sound like a harmless trick, until realizing the scope where Gemini operates.

At this time, the LLM is deeply integrated into various Google apps inside the ecosystem, like within Gmail, Google Calendar, and Workspace, where it can read and summarize messages, events, and documents.

In a real care scenario, for example, a hacker could smuggle instructions into an event description, causing Gemini to rewrite meeting titles, inject links, or display misleading information, all without the victim noticing a thing.

FireTail’s report shows that other AI systems, including ChatGPT, Microsoft Copilot, and Anthropic’s Claude, have built-in protections that sanitize invisible control characters before processing them.

Gemini, however, doesn’t, and this leaves it especially vulnerable.

Perhaps the most concerning part isn’t the exploit itself, but Google’s response.

When FireTail disclosed the flaw, Google reportedly classified it as "social engineering" rather than a technical vulnerability.

What this means, the company does not plan to issue a fix.

In other words, Google sees it as a user problem, not a system flaw.

Security researchers strongly disagree.

ASCII smuggling can be weaponized to manipulate AI-generated content, poison summaries, or automate phishing attempts in environments where Gemini acts autonomously. In enterprise contexts, that could have serious implications for privacy, data integrity, and trust.

By refusing to patch the flaw, Google effectively leaves the burden on users and IT teams to defend themselves.

In FireTail’s own write-ups, they emphasize that defense must happen downstream of the AI interface, or right inside the logging, monitoring, and gatekeeping, since there is no way for anyone to totally rely on the UI of the AI itself to detect these hidden attacks.

By not patching the vulnerability, Google is like forcing users to practice "security through paranoia," constantly second-guessing what their own AI might secretly be reading or obeying. It's like hoping users to defend themselves, by treating every email, calendar entry, or AI-generated summary as a potential Trojan horse.

In short: what might seem like a niche Unicode trick is in fact a potent, real-world weakness when AI systems are tied deeply into people's digital infrastructure.

It's worth noting that Grok and DeepSeek are also vulnerable, but not as severe as Gemini, since these two LLMs aren't integrated to any productivity tools.