Artificial Intelligence is still a growing trend, and still humans don't know much about them. But learning along the way, we continue to create better machine learning software to solve more tasks.

While computers can beat humans at chess and Go, but it's still far from capable in being trusted with lives. For example, the danger of self-driving cars were highlighted when Tesla's autonomous car collided with a truck when its AI mistook it for a cloud, killing its passenger.

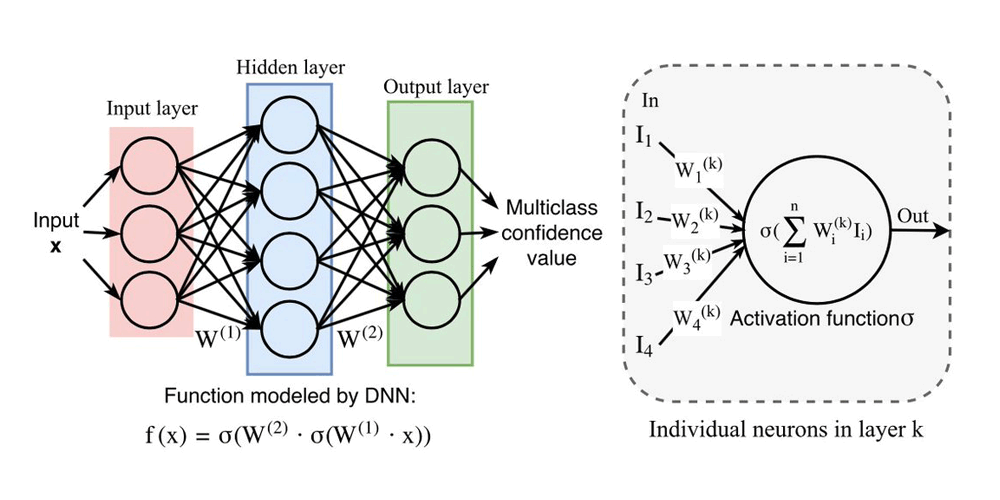

AI's machine learning called deep learning, is modeled after the human brain. With layers of artificial neurons process and consolidate information, it's able to develop a set of rules to solve complex problems

However, humans still don't understand how exactly AI makes decisions using its deep learning systems. Often, AIs make decision in what's called a "black box", inside something that humans can't readily understand why a neural network chooses one thing over another.

This black box exists because AI uses machine-learning to perform millions of tests in a short amount of time, come up with a solution, and continues to perform more millions of test to become better and to be able to create an even better solution.

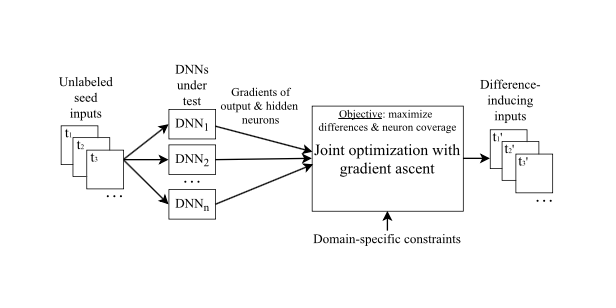

Researchers at Columbia and Lehigh Universities have created a method for error-correcting deep learning networks. Called 'DeepXplore', the tool helped the researchers to reverse-engineer complex AIs, and see a work-around for the "black box" problem.

The method for solving the black box issue involves the software in throwing a series of problems to the AI up to a point that it can't quite understand anymore

The automated "white box" testing using DeepXplore software exposes flaws in a neural-network by tricking AIs into making mistakes. It's a way for the researchers to automatically error-check the thousands to millions of neurons in a deep learning neural network

Debugging the neural networks in self-driving cars is slow and tedious process, and there is no way of measuring how thoroughly logic within the network has been checked for errors. Manually-generated test images can be randomly fed into the network until one triggers a wrong decision, telling the car to steer into the guardrail, for example, instead of away.

Co-developer Suman Jana of Columbia University told EurekAlert:

A faster technique, called adversarial testing, automatically generates test images it alters incrementally until an image is able to trick the system.

DeepXplore is able to find a wider variety of bugs than random or adversarial testing by using the network itself to generate test images that are likely to cause neuron clusters to make conflicting decisions. To simulate real-world conditions, photos are lightened and darkened.

The incremental increases or decreases in lighting, mimicking the effect of dust on a camera lens, or a person or object blocking the camera's view, for example, might cause an AI designed to steer a vehicle to be unable to decide which direction to go.

This is because the change can cause one set of neurons to tell the car to turn left, and others set to tell it to go right. Inferring that the first set misclassified the photo, DeepXplore automatically retrains the network to recognize the darker image and fix the bug.

Using optimization techniques, researchers have designed DeepXplore to trigger as many conflicting decisions with its test images as it can while maximizing the number of neurons activated.

Through optimization, DeepXplore is able to trigger up to a 100 percent neuron activation within a system. What this means, DeepXplore sweeps the entire AI network it's dealing with to see errors and try to cause the problem. So it's a "break everything" approach for testers to debug and error-check advanced neural networks and its AI integration.

With this, the researchers aim to isolate and eliminate the biases that cause AI to reach conclusions that could either endanger life or discriminate it. It's one of the biggest challenges facing machine-learning developers.

Aaccording to co-developer Kexin Pei, of Columbia University:

The AI Now Institute has cautioned important government facilities against the use of black box AI systems until we can really figure them out. But with DeepXplore, we're expecting that time to be sooner than predicted.