On social media networks, hashtags have become ubiquitous. And their usage however, can be good and bad.

Hashtags are those words or phrases preceded by a hash mark (#). On social media, they can be used within a post to identify keywords or topics of interest, and facilitate a search for them. But here, the helpful functionality has opened the door to the darkest corners of social media and the internet.

It was discovered that Instagram hosted quite some users who trade images of sexually exploited children, as if they were collectibles.

All those users have to do, is conduct searches using the 'correct' hashtags.

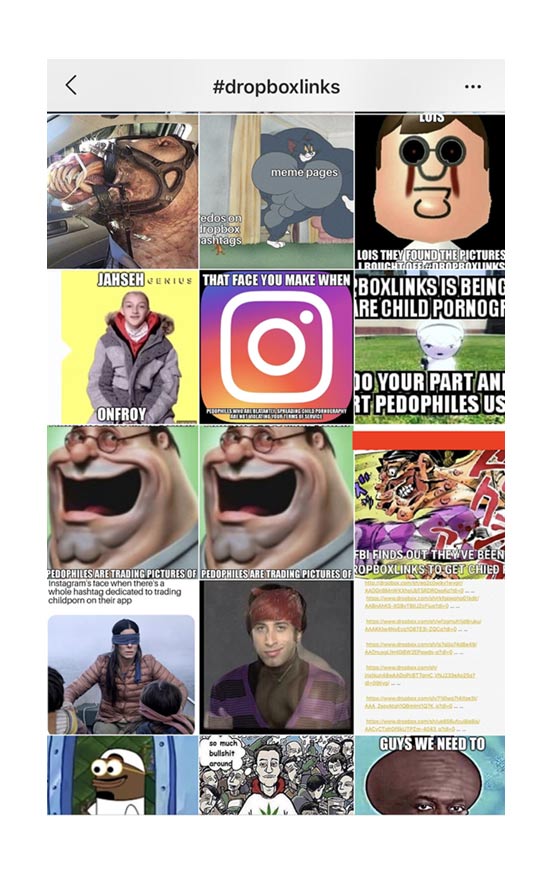

These exploitative image transactions started out with one of several hashtags, mainly the #dropboxlinks which was one of the most popular. Following that hashtag with more correct hashtags, users can land on posts, to then start conversations where they can allegedly swap links of Dropbox folders containing illicit contents.

“DM young girl dropbox,” said one of the traders. "DM me slaves," said another. "I’ll trade, have all nude girl videos," posted another. "Young boys only," one person posted several times.

Among those pedophiles, there was only one rule: send first, to then receive from others.

Previously, Instagram has noted that hashtags do have some problems. Each time Instagram acknowledges offending hashtags, the platform makes its move by restricting them, but still some remains and lurk under its radar.

Since users can create any hashtag imaginable, it's difficult for the platform to police them all.

"Keeping children and young people safe on Instagram is hugely important to us,” said Instagram's spokesperson. "We do not allow content that endangers children, and we have blocked the hashtags in question.”

The platform also said it is “developing technology which proactively finds child nudity and child exploitative content when it’s uploaded so we can act quickly."

But because Instagram didn't react quickly enough to prevent such things to happen, the teens who first discovered these pedophiles' rings of hashtags went furious and took the matters into their own hands, as they started flooding the hashtag with thousands of meme images, making it increasingly difficult for pedophiles traders to find others to swap links with.

Days after the problem surfaced, Instagram finally filled the hashtag(s). But still, the accounts trading child sexual abuse imagery, remain.

With them realizing that Instagram is becoming aware of their operations, many are attempting to move their tradings to other platforms, such as WhatsApp and Kik. Some started selling their caches to those interested via PayPal or Bitcoin payments.

Dropbox that sees the issue as a crime, said:

Further reading: Google And Facebook Ad Networks Had A Child Porn Problem: Scrambling For A Fix