Facebook is not a media company. But no matter how hard the company repeatedly said that it isn't, people are seeing it as one.

Because Facebook doesn't see itself as media company, it never have the responsibility to remove fake news reports and contents, because essentially, the process is an editorial function that Facebook doesn't really have.

But with the platform being plagued with fake news and false reports, Facebook is receiving numerous complaints, saying that the company cannot control the flow of information. Judging by its influence, Facebook can indeed affect people's thought, which most have agreed during the U.S. election back in 2016.

To address the issue, Facebook that wants to ensure the best user experience, wants to lessen its platform from being used as a political influence.

To do this, Facebook starts implementing some measures to crack down on two specific elements of annoyance and/or concern.

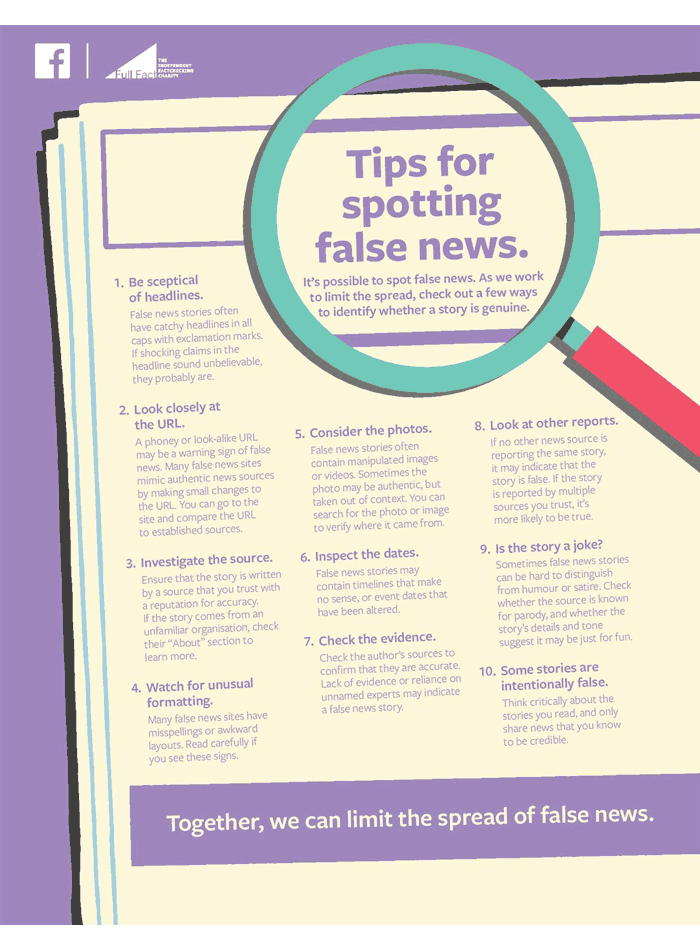

First of all, Facebook is getting more proactive in stopping the spread of political misinformation. Here, the social media giant is banning false information regarding voting requirements. It also fact-checks fake reports of violence.

Facebook has set clear parameters around how the process should work, and this would help the company in cracking down information to ensure it lessens its potential contribution to political impacts.

In addition to this, Facebook has also announced an updated algorithms for its News Feed, which demote links to websites that re-publish and redistribute content without permission, and flood those web pages with ads. To do this, Facebook will catch out sites that post duplicate content by implementing the algorithms which compare the main text content of a page with all other text content to find potential matches.

How the algorithms work, is similar to Google's duplicate content detection system, despite less technical. The algorithms are meant to demote pages where a conflict is detected.

"We’re rolling out an update so people see fewer posts that link out to low-quality sites that predominantly copy and republish content from other sites without providing unique value," explained Facebook.

No, Facebook is not a media company, and it's not intending to be one.

But the changes should provide an increased flexibility for Facebook to start removing fake news and questionable contents from its platform. Facebook doesn't have an editorial license and it doesn't want one. Facebook is not making any calls on what is and isn't true in general terms.

What it does, is just giving itself a way to strictly pick and choose which content is allowed and which isn't.

The feature, alongside Facebook's ad transparency tools, third--party fact-checking on reports, restrictions on issues ads and so forth, should help Facebook in becoming a better place for its users, while at the same time driving itself away from political scandals.

If everything works as intended that is.