Facebook, the social network giant, owns the world's largest photo library. It has been using facial recognition software to help people tag photos since 2010. On March 17th, 2014, the company announced that it has developed a software that advances in artificial intelligence and "learning" to identify people at near "human performance" levels. This means that Facebook will able to identify photos as well as you can.

Facebook, the social network giant, owns the world's largest photo library. It has been using facial recognition software to help people tag photos since 2010. On March 17th, 2014, the company announced that it has developed a software that advances in artificial intelligence and "learning" to identify people at near "human performance" levels. This means that Facebook will able to identify photos as well as you can.

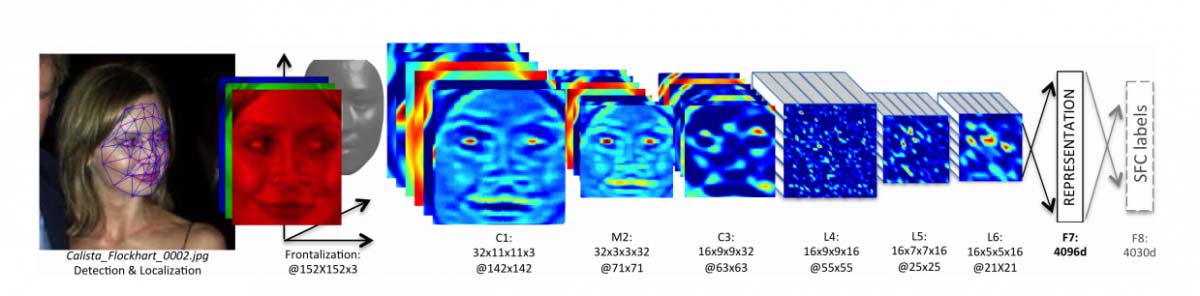

The project named "DeepFace" can process images in two steps. The first step, the software adjust the angle of the face in question so that it's looking forward to the camera using a 3D model for rotating faces virtually based on what a face on a head looks like. In the next step, DeepFace simulates a neural network (software meant to imitate animals' central nervous system) to retrieve the numerical description of the reoriented face. This information is then compared to the description of the photos. And if both information is similar enough, the software decides that the photos are of the same person.

Facebook's current facial recognition software is able to suggest friends to tag when users upload a photo, using information like the distance between the eyes, the position of the nose relative to the eyes in profile pictures and already tagged photos. Although at moments, this feature has got better and better, Facebook may sometimes suggest the wrong people to tag because of similarities in facial structures between multiple friends. This can be fixed with DeepFace which uses techniques from deep learning, a field of artificial intelligence specializing in understanding irregular types of data.

A paper called "DeepFace: Closing the Gap to Human-Level Performance in Face Verification" said that:

In modern face recognition, the conventional pipeline consists of four stages: detect => align => represent => classify. We revisit both the alignment step and the representation step by employing explicit 3D face modeling in order to apply a piecewise affine transformation, and derive a face representation from a nine-layer deep neural network.

DeepFace uses 120 million parameters in locally connected layers to identify these faces and was developed by using a database of about 4.4 million labeled faces from 4,030 Facebook accounts. This means that each identity had an average of over a thousand samples for testing.

The algorithms have also been successfully tested for facial verification within YouTube videos, but this was challenging because the imagery was not as sharp compared to photos.

In a recent research done by Facebook and MIT's Technology Review, a person is asked whether two familiar faces belong to the same person. The person usually guesses correct at 97.53 percent. Facebook's DeepFace scores 97.25 percent on the same challenge.

CEO Mark Zuckerberg has expressed deep interest in building out Facebook's artificial intelligence capabilities when speaking to investors in the past. His ambition actually stretches beyond facial recognition to analyzing the text of status updates and comments to decipher mood and context.

Yaniv Taigman, one of Facebook's artificial intelligence scientists, said that the error rate has been reduced by over 25 percent relative to earlier software that handles the same task. Taigman co-founded Face.com in 2007, which was acquired by Facebook. Prior to the acquisition, Face.com built apps and APIs that could scan billions of photos every month and tag faces in those photos. Taigman developed DeepFace with fellow Facebook research scientists Ming Yang and Marc’Aurelio Ranzato, along with Tel Aviv University faculty member Lior Wolf.

With DeepFace, Facebook has essentially caught up to humans when it comes to remembering a face. While it's still in its development phase, the algorithms does not affect Facebook's 1.2 billion users.