Google is quietly but decisively expanding what Gemini can be, turning it from a conversational assistant into a platform for building tools.

In an update, 'Opal,' which is Google’s experimental no-code mini app builder, is now embedded directly into the Gemini web app. Instead of living as a separate Labs experiment, Opal is becoming part of how users create and customize Gemini itself, signaling a shift toward user-built AI workflows rather than one-size-fits-all chat experiences.

Opal allows anyone to create AI-powered mini apps using natural language, without writing code.

Inside Gemini on the web, it appears as a new type of experimental Gem, making it possible to spin up reusable tools on the fly.

These mini apps can be designed for specific tasks, reused across sessions, and shared more easily than before, turning Gemini into something closer to a personal AI toolkit rather than a single assistant personality.

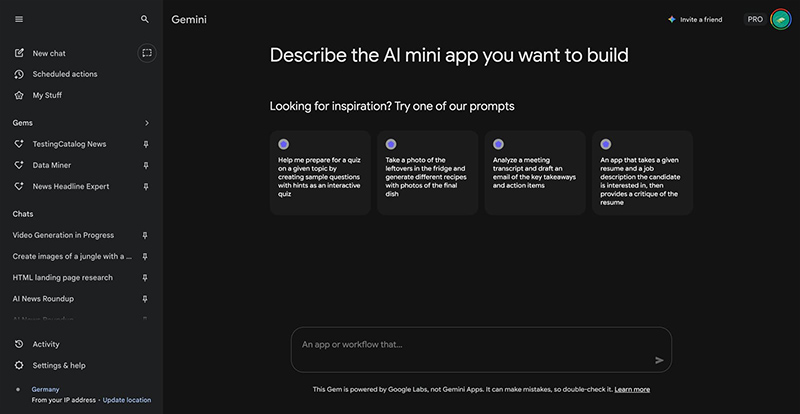

A key improvement comes from changes to Opal’s visual editor.

Opal, meet @Geminiapp.

We’ve now brought Opal, our tool for building AI-powered mini apps, directly into the Gemini web app as an experimental Gem. You can find the Opal Gem in your Gems manager and start creating reusable mini apps to unlock even more customized Gemini…— Google Labs (@GoogleLabs) December 17, 2025

Prompts are no longer just blocks of text; they can now be viewed as structured lists of steps that show exactly how a mini app works.

This makes it much easier to understand, edit, and refine workflows, even for users who are not deeply technical. The system effectively translates conversational instructions into a transparent workflow, lowering the barrier to experimentation while making behavior more predictable.

For users who want deeper control, Google is keeping the advanced path open.

From within Gemini, creators can jump directly to the Opal Builder on opal.google, where more granular editing options are available. This split approach lets beginners stay within Gemini’s simplified interface while power users can fine-tune logic, prompts, and structure in a dedicated environment.

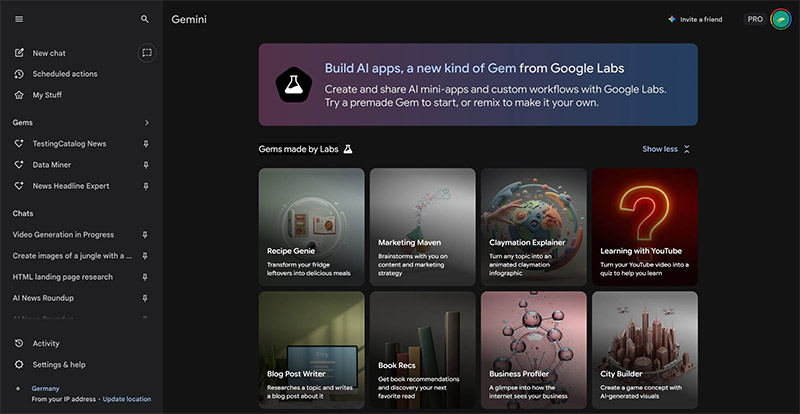

The integration also changes how Gems are managed.

The updated Gems Manager now separates Google Labs-built Gems from personal and custom ones, with Opal workflows appearing seamlessly under a Labs section.

Existing Opal creations automatically migrate into this space, avoiding friction for early testers. When creating a new Gem, users are guided through a workflow builder that auto-generates steps, system prompts, and even visual elements, all of which can be previewed instantly with text or voice input.

Sharing has also been streamlined. Instead of relying on external permissions, Gems built with Opal can now be published with a shareable link and launched full screen, making them feel more like lightweight apps than experiments. This positions Gemini not just as an AI assistant, but as a hub where small, purpose-built AI tools can be created, tested, and distributed.

However, there are still limits.

Opal inside Gemini is rolling out gradually, appears restricted to certain regions such as the U.S., and is currently available only in English.

It also operates outside of Gemini Apps Activity, meaning usage data isn’t managed under the same controls as standard Gemini interactions. These constraints underline its experimental status, even as Google pushes it closer to the core Gemini experience.

Taken together, the move reflects Google’s broader strategy following the momentum of Gemini 3: deepen integration, reduce friction, and encourage users to build on top of the platform rather than just talk to it.

By folding Opal directly into Gemini, Google is betting that the next phase of AI adoption won’t be driven only by better answers, but by giving users the power to shape how AI works for them.