TensorFlow is Google's free and open-source software library for dataflow and differentiable programming for a range of tasks.

It includes extensible ecosystem of libraries, tools and community support to build and deploy machine learning powered applications.

For developers of mobile and IoT devices, Google has introduced TensorFlow Lite 1.0, with improvements that include selective registration and quantization during and after training for faster, smaller models.

TensorFlow Lite 1.0 is specially designed for building projects associated with AI models for mobile and IoT devices.

As those devices tend to have lower capacity if compared to their larger counterparts, this lite version is meant to help developers in speeding up the creation and release of AI models, as well as shrinking any unwanted processes for deployment.

In short, TensorFlow Lite is a lightweight TensorFlow, which takes machine learning down a mobile-friendly path.

For example, TensorFlow Lite can run on Raspberry Pi and the Coral Dev boards which was earlier unveiled by Google.

Further reading: Google's Open-Sourced TensorFlow AI Software: Signaling A Big Change

"We are going to fully support it," said TensorFlow engineering director Rajat Monga. "We’re not going to break things and make sure we guarantee its compatibility. I think a lot of people who deploy this on phones want those guarantees."

Just like the original TensorFlow or most other AI models, TensorFlow Lite also begins with training. But after that, it converts the data to create models for mobile devices.

According to TensorFlow Lite engineer Raziel Alvarez at the TensorFlow Dev Summit. held at Google offices in Sunnyvale, California, TensorFlow Lite is already being deployed by more than two billion devices.

TensorFlow Lite increasingly makes TensorFlow Mobile obsolete, except for those developers who want to utilize it for training, but a solution is in the works, added Alvarez.

Lite was first introduced at the I/O developer conference in May 2017, and in a developer preview later that year.

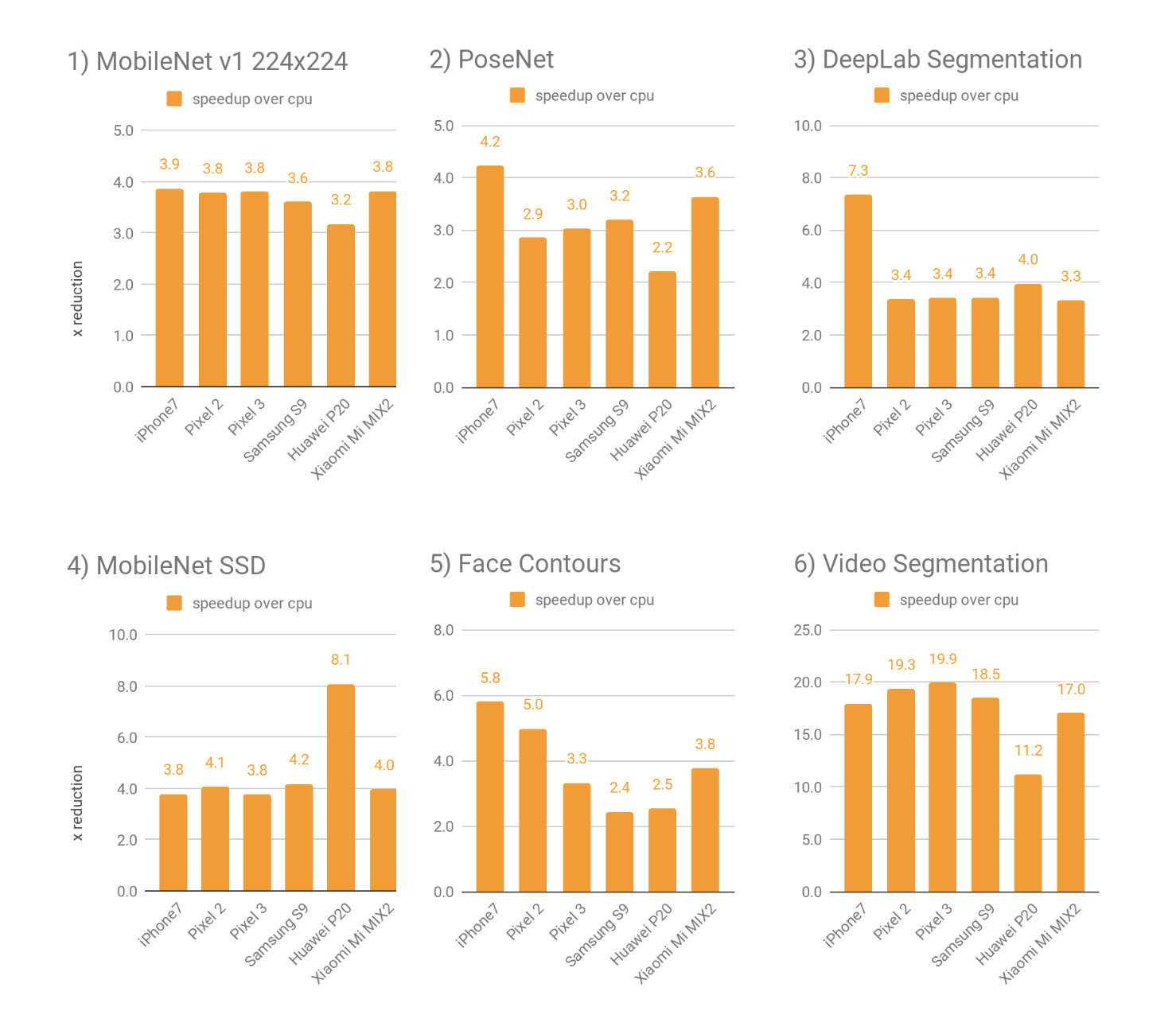

According to Google, TensorFlow Lite is fast, as its mobile GPU acceleration with delegates can make model deployment up to 7 times faster. What's more, Edge TPU delegates can speed up things 64 times faster than a floating point CPU.

And as for quantization, for some models, it has led to 4 times the compression.

This is also why Google is confident, as it already uses TensorFlow Lite for its apps and services, including Gboard, Google Photos, AutoML, and Nest.

It has also been implemented on the Google Assistant for all computation for CPU models when it needs to respond to queries when the user is offline.

Overall, the TensorFlow team has done a lot of backend work to enhance its usability and feasibility.

And following TensorFlow Lite, Google has also released an alpha release of TensorFlow 2.0 for a simplified user experience; TensorFlow.js 1.0; and the version 0.2 release of TensorFlow for developers who write code in Apple’s programming language Swift.

Google has also released TensorFlow Federated and TensorFlow Privacy.

Going forward, Google plans to make GPU delegates generally available, control flow support, several CPU performance optimizations, expanded coverage, and finalize APIs to make it generally available.