In an era where computers and mobile devices are common household items, we have become increasingly glued to screens, trusting them as the source of information.

From news to entertainment, to sports and politics, they can all be consumed easily with the internet available in more places. Apparently, they also include fake news.

If fighting fake news has become much of a hassle, things are getting even harder as AI has enabled the creations of so-called "deepfakes" in 2017.

In a step forward in AI, researchers have experimented and dramatically extent deepfake with something that is even more convincing.

The development comes from an international team of researchers, Technical University of Munich, the University of Bath, Technicolor, and led by Germany’s Max Planck Institute for Informatics. They have created a deep-learning AI system which is able to edit the facial expression of actors to accurately match dubbed voices.

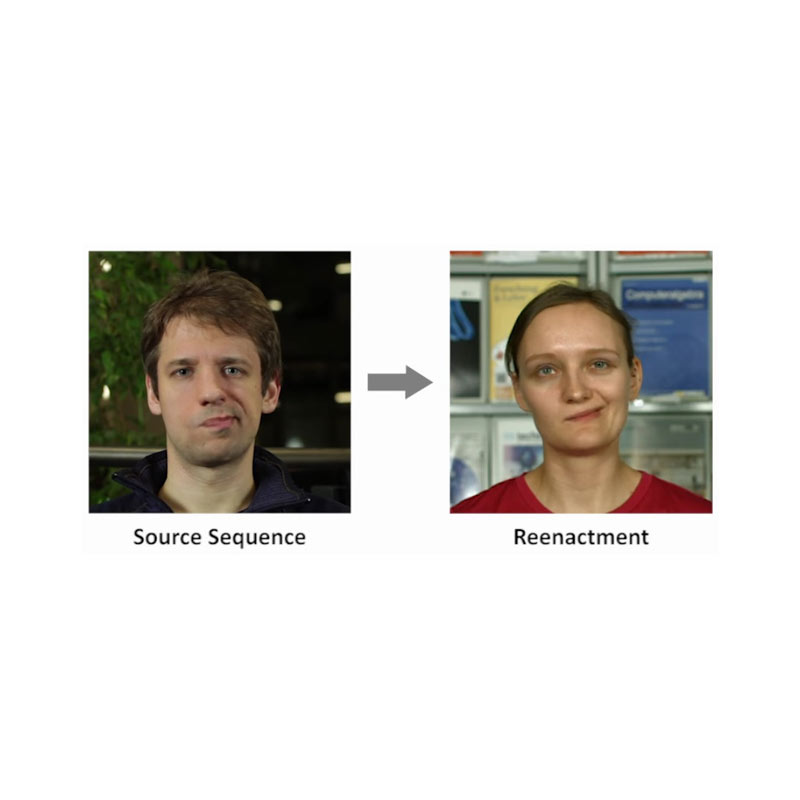

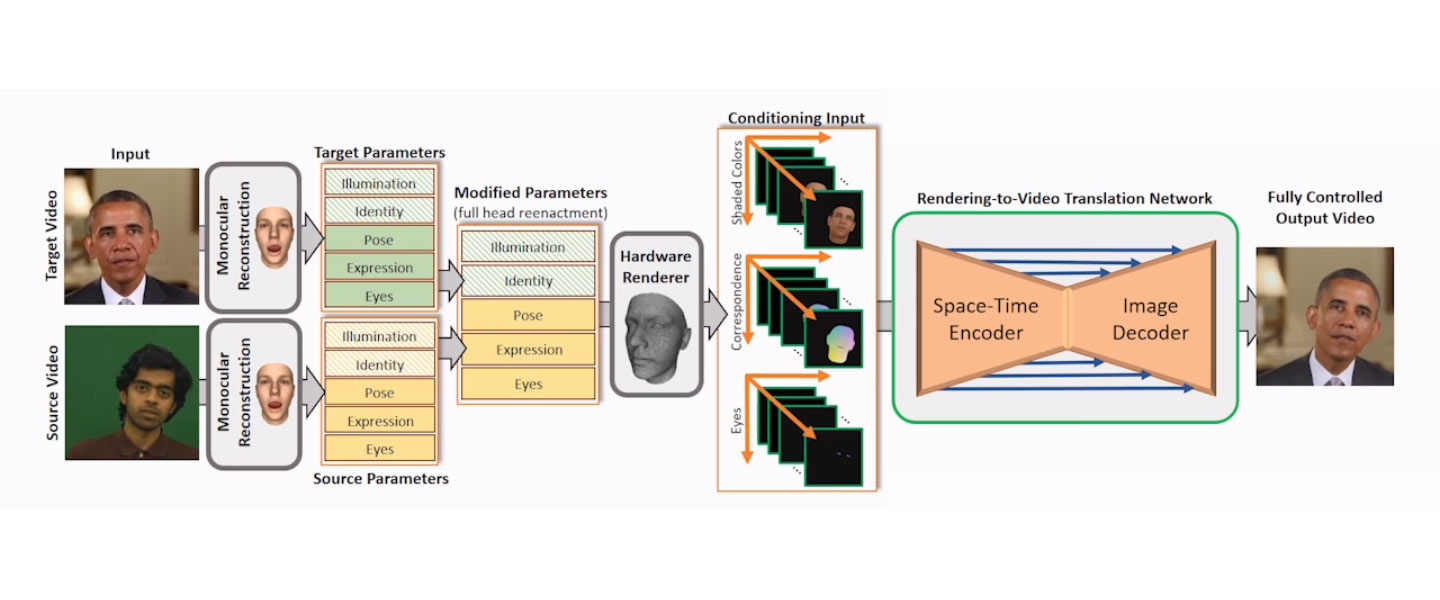

Calling it ‘Deep Video Portraits’, the technology can create eerily convincing fake videos as the AI can transfer full 3D head position, head poses and rotation, tweaks gaze, animates the eyes and eyebrows from a source actor to a portrait video of a target actor.

The core of 'Deep Video Portraits' is a generative neural network with a novel space-time architecture.

The network takes as input synthetic renderings of a face model, based on which it predicts photo-realistic video frames for a given target actor. The AI can render the realism using adversarial training, and as a result, the researchers were able to create modified target videos that mimic the behavior of the synthetically-created input.

To enable source-to-target video re-animation, the researchers rendered a synthetic target video with the reconstructed head animation parameters from a source video, and feed it into the trained network.

"With the ability to freely recombine source and target parameters, we are able to demonstrate a large variety of video rewrite applications without explicitly modeling hair, body or background."

"It works by using model-based 3-D face performance capture to record the detailed movements of the eyebrows, mouth, nose, and head position of the dubbing actor in a video," explained Hyeongwoo Kim, one of the researchers from the Max Planck Institute for Informatics. "It then transposes these movements onto the ‘target’ actor in the film to accurately sync the lips and facial movements with the new audio."

In the experiment, the researchers reenacted the full head using interactive user-controlled editing, and made high-fidelity visual dubbing.

Read: DARPA Creates Tools To Automatically Spot Deepfakes And Other AI-Made Fakeries

'Deep Video Portraits' is the title of a paper submitted for consideration at the SIGGRAPH 2018 conference in Vancouver, Canada.

The researchers suggest that one possible real-world application for this technology could be in the movie industry, where the technology could automate tasks and making things easier and more affordable to manipulate footage to match dubbed foreign vocal tracks.

This would create an effect where dubbing plays more seamlessly, a huge advantage to previous dubbing methods where results are mismatched and sometimes comedic due to the mouth and the dubbed voice don't sync and correspond with each other.

But just like deepfakes, it's difficult to see this technology, and not see the potential for being misused. Like deepfakes, AI has opened the possibilities of even casual users to create fake videos with terrifying implications.

'Deep Video Portraits' here is an advancement in AI technology that also shares its own scary consequences.