WhatsApp is one of the most popular messaging app in the market. And for that reason, many things can go wrong.

With more than a billion users using WhatsApp as their method of communicating, the messaging app is plagued with many malicious actors spamming their contact list.

Then there are other kinds of spammers, including bots, that kept on cluttering the app with unwanted automated messages.

While memes are welcome, but fake news and hoaxes aren't. This is why WhatsApp is trying its best to eliminate those unwanted messages, before they mislead people into something unintended.

The company has released a whitepaper stating that it removes more than 2 million spam accounts every month, and 74 percent of that is a result of the app's machine learning algorithms.

But here is the thing: WhatsApp messages are protected using end-to-end encryption protocol from Signal. What this means, in theory, nobody can intercept and read messages in plain text, including WhatsApp.

So how can this machine learning technology mark spam users without even reading their messages?

Related: How WhatsApp Reduces Spam Without Even Reading Your Encrypted Messages

The company’s software engineer, Matt Jones, explained that abusers use many techniques.

This include people using custom devices with multiple SIMs, as well as people using specially coded simulators that masquerade as users, just to run multiple instances of WhatsApp.

Through these techniques, people that want to spread fake information, can easily amplify their voice.

One example was when a viral video in India that alleged people in it were child kidnappers, angering many people, leading to multiple lynchings in the country.

WhatsApp has put some precautions in place, including limiting the number of messages users can forward, as well as labeling forwarded messages so recipients will know that messages they receive are forwarded.

That is not necessarily enough. People are getting smarter every time, and bad actors have found many holes they can exploit.

What WhatsApp did to catch these people without having to break its encryption or reading the contents of messages, is by using a technique it calls 'user actions'. This include scanning registration metadata and the rate of sending messages.

This way, the messaging app can understand users' behavior when abusing its system, without having to decrypt any messages.

Jones explained that the company uses three checkpoints to ban accounts:

- At registration: WhatsApp uses users' phone number as well as coordinates to verify them. The machine learning technology then goes to work by analyzing some basic information such as device details, IP address and carrier information to catch malicious accounts.

So if it ever finds a computer network trying register accounts in bulk, or a phone number similar to the one which was misused, trying to register again, the system can ban them.

The company said that out of the 2 million accounts it bans every month, 20 percent of them are caught at registration.

- During messaging: The app also considers things like whether an account has a 'typing…' indicator, or if it sends 100 messages in 10 seconds within five minutes of registering.

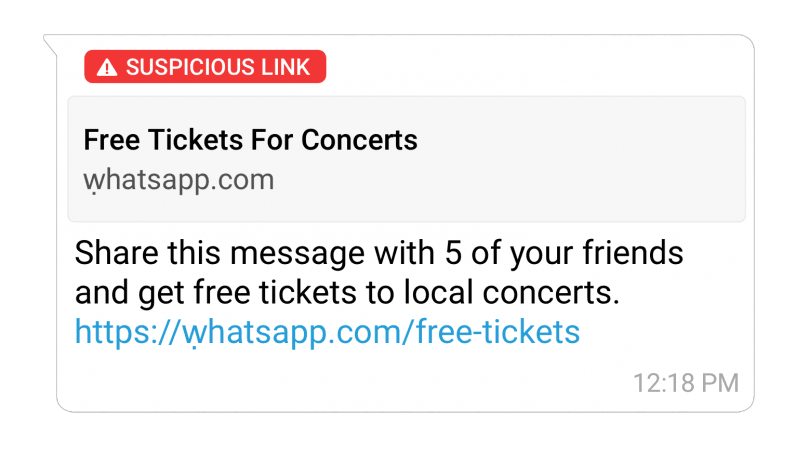

Additionally, if a spam account is sending malicious links, WhatsApp can mark them as suspicious.

- User reporting: WhatsApp can also remove spammers and abusers when they’re reported by others.

However, it also makes sure that a group of users is not targeting an individual through extensive reporting. To do that, the company's algorithms checks if the phone numbers that are reporting a specific user have ever interacted with it.

WhatsApp here uses Facebook's Immune System model, which performs real-time checks on every read or write action to define abusive behavior and train its machine learning systems.

Apart from these three methods, WhatsApp has also expanded the limit for forwarding messages to a maximum of five accounts globally, in order to prevent the spread of spam by humans.

The company is also making sure that its algorithms can catch users using WhatsApp on modified APK files (app installation files for Android devices).

While these methods can certainly help WhatsApp police its platform to prevent spammers and abusers to reign, but it's still not perfect.

Again, the reason is its end-to-end encryption. This severely restrict WhatsApp in analyzing users' message to find certain keywords, for example.

Malicious actors can constantly create new groups around the same topic, and repeatedly add the same users to them. This can render the option to report and leave a group less effective.

Those people can also act "more human" by not spamming users immediately after signing up, for example. This too can fool the algorithms.

But this news should be a welcome move. As WhatsApp continues its reign as one of the most popular messaging app, it should take spam problems seriously, more than ever.