We humans can do something successfully well because we have learned how to do it. It involves past experience, knowledge, as well as reasoning. The latter explains the way we do it.

AI can also the same thing. It learns from mistakes to get the experience, and with time, it learns how to do things based on its knowledge. But the one thing it doesn't show, is reasoning. This so-called black box makes us unaware of what goes on inside the brains of machine learning.

MIT’s Lincoln Laboratory Intelligence and Decision Technologies Group wants to show that AI reasoning by unveiling a neural network capable in doing this. The AI is MIT's strategy to tackle the black box problem, as a tool to combat biased AIs.

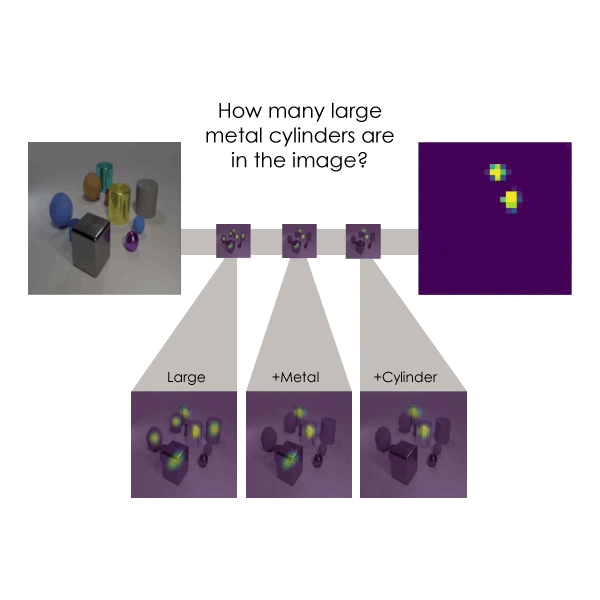

Dubbed the Transparency by Design Network (TbD-net), MIT’s AI is a neural network designed to answer complex questions about images. It does this by parsing queries by breaking them down into subtasks that are then handled by different modules.

"Understanding how a neural network comes to its decisions has been a long-standing challenge for artificial intelligence (AI) researchers," explained MIT.

"As the neural part of their name suggests, neural networks are brain-inspired AI systems intended to replicate the way that humans learn. They consist of input and output layers, and layers in between that transform the input into the correct output. Some deep neural networks have grown so complex that it’s practically impossible to follow this transformation process. That's why they are referred to as "black box” systems, with their exact goings-on inside opaque even to the engineers who build them."

The problem happens because neural networks lack an effective mechanism for enabling humans to understand their reasoning process.

"Progress on improving performance in visual reasoning has come at the cost of interpretability,” said Ryan Soklaski, who built TbD-net with fellow researchers Arjun Majumdar, David Mascharka, and Philip Tran.

TbD-net uses a collection of modules, which are small neural networks specialized to perform specific subtasks.

"A child is presented with a picture of various shapes and is asked to find the big red circle. To come to the answer, she goes through a few steps of reasoning: First, find all the big things; next, find the big things that are red; and finally, pick out the big red thing that’s a circle," said MIT on its website post.

We learn how to interpret this world based on our reasoning. And according to MIT, "so, too, do neural networks."

TbD-net here, visually renders its thought process as it solves problems. This allows human analysts to interpret its decision-making process.

For example, if the AI is asked to determine the color of “the large square” in a picture showing several different shapes of varying size and color, the neural network would start by breaking down the question into subtasks and assigns the appropriate module to fulfill its part.

In this case, it starts by using a module capable of looking for "large" objects, and then display a heatmap indicating which objects the AI believes to be large. Then the AI will start another module capable of determining which of the chosen large objects were squares. And finally, the AI would then use another module to determine the large square's color.

The AI outputs its answer alongside a visual representation of the process by which it came to that conclusion.

Related: Researchers Unveil 'DeepXplore', A Tool To Debug The 'Black Box' Of AI's Deep Learning

The researchers evaluated the model using a visual question-answering dataset consisting of 70,000 training images and 700,000 questions, with test and validation sets of 15,000 images and 150,000 questions. The initial model achieved 98.7 percent test accuracy on the dataset.

Because the network works transparently, the researchers can interpret an AI's results, allowing them to teach the AI to correct its incorrect assumptions, refining the model. And the end result was a performance of 99.1 percent accuracy.

"Our model provides straightforward, interpretable outputs at every stage of the visual reasoning process," said Mascharka.

"Breaking a complex chain of reasoning into a series of smaller subproblems, each of which can be solved independently and composed, is a powerful and intuitive means for reasoning." said Majumdar.

According to the researchers, the results surpass previous best-performing visual reasoning models.

This strategy should solve the black box problem, giving valuable insights to how deep learning algorithms work. This can help humans understand computers' intentions when deployed to tackle complex real-world tasks. Like machines that are capable of harming human lives, such as self-driving cars, when it comes into a situation where it crashes itself to pedestrians to save the occupants.

AIs shouldn't be trusted unless it can tell how it arrives at its conclusions, and MIT is trying to solve that by inspecting the reasoning process so that humans can understand why and how a model could make the right/wrong decisions.

Related: Researchers Taught AI To Explain Its Reasoning: Solving The 'Black Box' Problem