To make AI know better about the real world, it needs to understand it better.

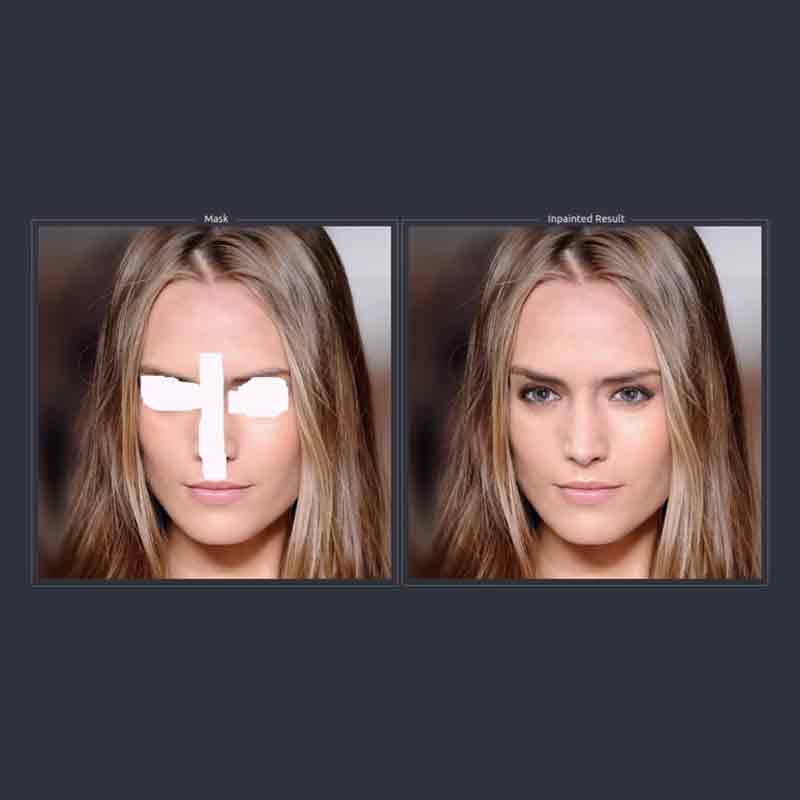

Previous deep learning AI have focused on rectangular regions located around the center of the image. But Nvidia revealed that its AI was capable in creating parts of an image that has been deleted or modified.

According to the researchers, this is the first time that a neural network can process irregular shaped holes on images.

The word here is "image inpainting".

Here, it can reconstruct images that missed some pixels, or have some parts removed, and replace them with computer-generated alternatives.

The goal of the AI is to create a model of an image so it can work to fill the irregular gap by recreating it.

The AI is capable in producing semantically meaningful predictions that incorporate smoothly with the rest of the image, without the need for any additional post-processing or blending operation.

Nvidia's researchers explained the difference between this method of inpainting images with deep learning on their published paper.

"Our model can robustly handle holes of any shape, size location, or distance from the image borders," said Nvidia's researchers on their research paper.

As seen on the video above, Nvidia's AI was capable in recreating an image, without suffering the same problem as other filling techniques. The recreated image has no granular degradation, or blurred edges which require further editing with different brushes and levels of smoothness or opacity.

Here, the AI can recreate what a seasoned graphic designers minutes or even hours to accomplish, almost instantly.

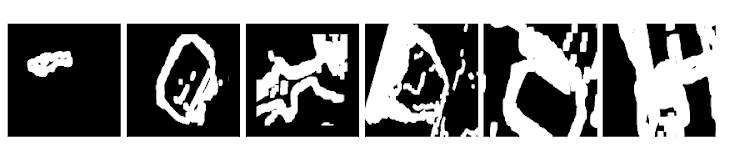

The AI uses deep neural network to create masks and partial convolutional predictions. This in essence, is recreating an invisible layer where the AI can manipulate until it 'feels' as through the image is complete.

The training involves the team in generating 55,116 masks of random streaks and holes of arbitrary shapes and sizes. They also generated nearly 25,000 for testing. These were further categorized into six categories based on sizes relative to the input image, in order to improve reconstruction accuracy.

Then the team used Nvidia's Tesla V100 GPUs and cuDNN-accelerated PyTorch deep learning framework to train the neural network by applying the generated masks to images from the ImageNet, Places2 and CelebA-HQ datasets.

During the training phase, the holes or the missing parts were introduced into complete training images from the above datasets. This enabled the network to learn to reconstruct the missing pixels.