Computers can easily alter images. They can also create realistic images. But they have difficulties when doing both.

Researchers from Microsoft and the University of Science and Technology of China came up with a model for image generation AI that is based on a variational autoencoder generative adversarial network. This makes the AI capable in synthesizing natural images in what are known as fine-grained categories.

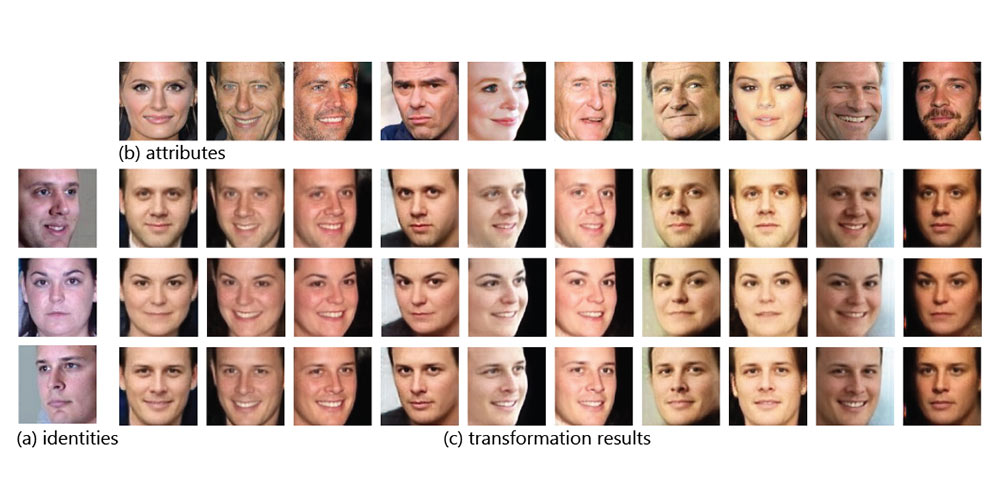

What this means, the AI can create natural identity-preserving images that are all synthesized.

Microsoft gave an example of a photographer's common objective: taking a group photo that includes dozens of subjects where all the people inside it are captured with their eyes open and even smiling. But most of the time, one shot is never enough. Let's say there is one person seen squinting and one is frowning.

Usually, AI can't fix that, at least easily because it has no understanding of how those people look. But with the technology, the photo can be totally transformed into a completely convincing version.

The challenge here, is to build an effective generative models of natural images that grapple with a key problem in computer vision.

The researchers' goal was to generate very diverse and yet realistic images by giving a computer only a finite number of latent parameters as related to the natural distribution of any image in the world.

To solve this, they came up with a generative model to capture that data. The researchers opted for an approach using generative adversarial networks combined with a variational auto-encoder to come up with their learning framework.

“What we have here is technology that can synthesize faces while preserving the identity of the faces we generate, in a controllable fashion. With our technology, the great thing is that I could literally render a smiling face for each of the participants in the shot,” explains Gang Hua, principal researcher at Microsoft Research in Redmond, Washington.

The model approaches a given image as a composition of label and latent attributes in a probabilistic model.

Then, by varying the fine-grained category label (for example, names of specific celebrities) that would be fed into the generative model, the researchers were able to synthesize images in specific categories only by using random values that are based with the latent attributes.

According to Gang Hua, the principal researcher at Microsoft, the team's approach uses two aspects.

"First, we adopted a cross-entropy loss for the discriminative and classifier network but opted for a mean discrepancy objective for the generative network." This produces asymmetric loss during the training. However, according to Hua, this loss "actually makes the training of the GANs more stable."

"We designed an asymmetric loss to address the instability issue in training of vanilla GANs that specifically addresses numerical difficulties when matching two non-overlapping distributions.

The second approach was adopting an encoder that enables the computer to learn the relationship between the latent space and use pairwise feature to match and retain the structure of the synthesized images.

These methods include using one input images of a subject that would product an identity vector. Combining that with other input face image (not the same person), the AI can extract more attribute vectors to correlate pose, emotion or lightning. These new identity vectors are then combined to synthesize a new fact for the subject.

"Our technology addressed a fundamental challenge in image generation, that of the controllability of identity factors. This allows us to generate images as we want them to look," said Hua.

As for the benefits, Hua sees the technology as an aid that can benefit image recognition, video understanding and arts.