When we humans listen to others speaking without seeing, we often build a mental model for the way how the person can possibly look.

Each person's voice is unique based on the fact that voice is a result of "the mechanics of speech production". This include: age, gender, the shape of the mouth, facial bone structure, and thin or full lips. These can all affect the sound we generate.

And in addition to the above, the way we sound is also affected by the language we speak, our accent, the speed we speak, and pronunciations of words.

Artificial Intelligence (AI) is a field that is heavily developed and learned.

After researchers from over the world create AIs for different purposes, researchers from Massachusetts Institute of Technology (MIT) pulled a huge feat forward, by creating an AI capable of predicting and reconstructing how a person looks like, based on his/her voice.

The AI is called the 'Speech2Face', and what the it does, is analyzing a short audio clip of a subject, to then reconstruct how the person might look like in real life.

While the AI is far from perfect, but it clearly shows how terrifying sophisticated AIs can be when learning even from a tiny snippet of data.

In a paper published on arXiv, the team at MIT describes said that:

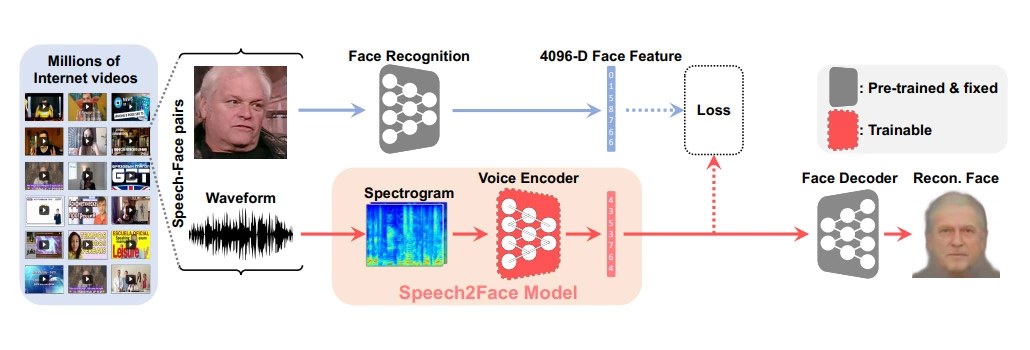

"During training, our model learns voice-face correlations that allow it to produce images that capture various physical attributes of the speakers such as age, gender and ethnicity. This is done in a self-supervised manner, by utilizing the natural co-occurrence of faces and speech in Internet videos, without the need to model attributes explicitly."

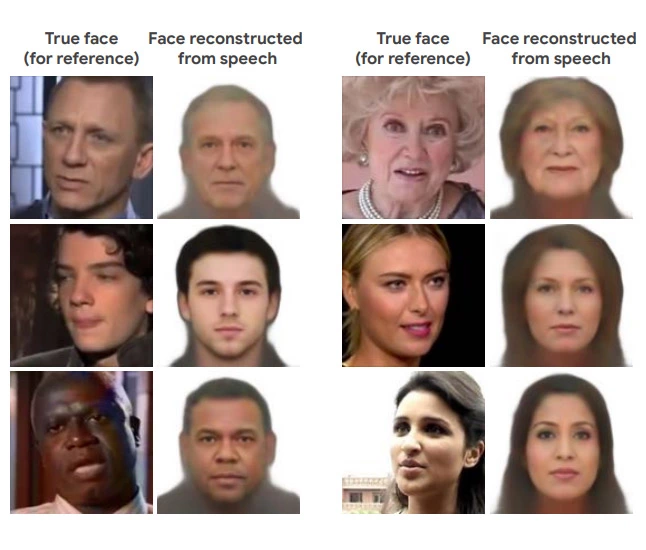

"We evaluate and numerically quantify how--and in what manner--our Speech2Face reconstructions, obtained directly from audio, resemble the true face images of the speakers."

And here, the results are astonishing.

The AI uses generative adversarial network (GAN) to "match several biometric characteristics of the speaker," resulting in "matching accuracies that are much better than chance."

Based on the fact that AIs can be manipulated and misused, the MIT researchers even caution on the project's GitHub page, acknowledging that the technology raises concerning questions about privacy and discrimination.

"Although this is a purely academic investigation, we feel that it is important to explicitly discuss in the paper a set of ethical considerations due to the potential sensitivity of facial information," suggesting that "any further investigation or practical use of this technology will be carefully tested to ensure that the training data is representative of the intended user population."

This project by MIT isn’t the first of its kind.

Previously, researchers from at Carnegie Mellon University have also published a paper detailing an AI with similar algorithm, which they presented at the World Economic Forum in 2018.

The AI is also capable of recreating a speaker’s physical characteristics based on just voice recordings.