The internet as we know it, is full of people. But from the billions that are connected in any given moment are not all humans. In fact, human only encompasses less than 50 percent of all internet's traffic. The rest comes from bots. Bot traffic has eclipsed human traffic for the first time.

Bots are software applications built to perform automated tasks. They can be valuable, as with search engines crawling and indexing websites, they can also be malicious, like those used by hackers and spammers.

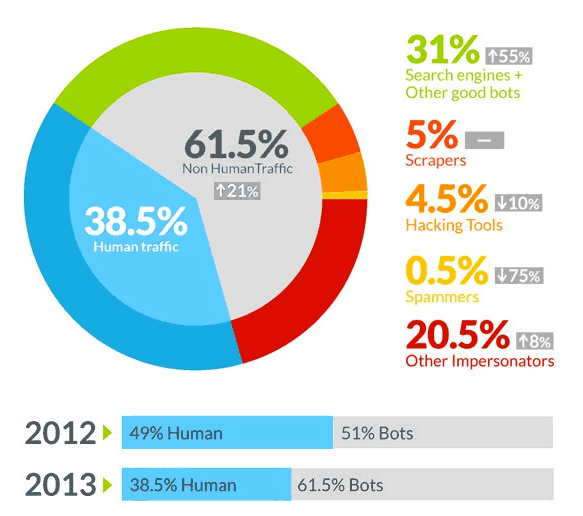

A cloud-based web-security service, Incapsula, observed 1.45 billion visits within 90 days period from 20,000 sites that cover the world's 249 countries The report concluded that in 61.5 percent of all website traffic in 2013 comes from these non-human visitors. a 21 percent growth in total bot traffic since 2012.

Most of that growth comes from "good bots," like those from analytics companies that provide data about websites' performance, and search engines spiders that are crawling the web. Legitimate bot activity has jumped 55 percent, which the report suggests is largely the result of new online services and increased crawling by search engines seeking the timeliest results. Those search engines and other good bots account for 31 percent of web traffic.

These codes, and so does other technologies, has helped people do automated tasks. But executing such a script is highly unethical if it's meant to do things its not supposed to do: being malicious.

These malicious bots, or "bad bots," populated the other 30.5 percent of bot traffic. These bots that are meant to steal contents, email address; hacking and spamming tools, and other human impersonators, wandering across the web in a form of little pieces of codes.

The malicious bot traffic as a percentage of overall traffic hasn't changed, but the specific kinds of bot activity has shifted. Spam bots have decreased from two percent of traffic in 2012 to 0.5 percent. But the activity by more sophisticated impersonator bots that are proficient enough to be hostile and create malware, increased for about 8 percent. These bots are custom-made, created to carry out a specific activity, such as a DDoS attack or to steal company secrets.

More Visitors More Problems

The internet is a vast connected network. Websites on the internet are meant to be seen by both humans and search engine's bots to index and rank. But the massive increase of non-human traffic has given negative impact on its overall performance.

Small websites, and those that less than 100,000 monthly visitors, tend to have even more wandering bots. About 80 percent of traffic to these websites came from automated tasks from bots. Because these sites are mostly hosted on a shared server, page loads can increase up to 50 percent, decreasing potential human visitors and SEO. And since one shared server can host hundreds of small websites, this further increase the problem.

These extra visits put website operators and hosting providers under more strain. They have to maintain a more active website, thinking that the traffic is mostly human, and buy more computing power (servers) to handle the extra burden.

Webmasters that use analytics, mostly Google Analytics, can see that bots do impersonate humans because they can be difficult to filter. The most common way to differentiate a human visitor and bot visitor is by seeing its behavior. Although bots can use other service providers, bots are more likely have close to 100 percent new visits, 100 percent bounce rate, no average visit duration, and do 1 page in each visit.

Webmasters can filter the service providers to get more data about human visitors. However, the total traffic still counts toward the total number of visits, making the data to be heavily sampled. With Google Analytics, webmasters can get rid of this unwanted guest once and for all in their sheet by modifying the Google Analytics tracking code by wrapping the it in a function that checks whether the visitor is human or a bot.

Modifying Google Analytics tracking code, or any other analytics tracking code, doesn't stop a website from visited by "unwanted" bots. Modifying the robots file doesn't stop bad bots because typically these programs ignore it. To block bots from accessing a website, you should know the IP address (4 numbers separated by dots. Example: "127.0.0.1") where the bot is coming from or the user agent string (Google search engine bot, for example, uses "Googlebot") that the bot is using. The easiest way to find this is to look into your web log.

After obtaining the IP address and the user agent string, you can then edit or create your site's .htaccess and give a rule to the server to block the specific bot when it encounters in the future. You can also block a bot by using its user agent string.

Modifying the .htaccess by giving the server some rules regarding specific bots can decrease the excess load in your server and give a better website's analytics data for human visitors. But just because a bad bot has visited your website using a particular IP address does not mean that if you block that IP address, the bot will not return. Some viruses and malware infect a normal computer user's machine to turn it into a machine that sends spam and probes sites for vulnerabilities (zombie computers). The IP address can also belong to an internet provider. And because internet provider could assign a user with different IP address, blocking IPs without thorough research can make you block an entire internet provider, and thus a lot of real human potential visitors.