The rapid evolution of artificial intelligence has brought profound changes to how people create, consume, and interact with sexual content.

What was once confined to professional production with professionals, or user-uploaded (amateur) videos on dedicated websites has now become democratized through generative AI tools.

These technologies promise boundless creativity, but they also expose deep tensions between unrestricted expression, personal consent, and societal harm, particularly when the output involves real people's likenesses without permission.

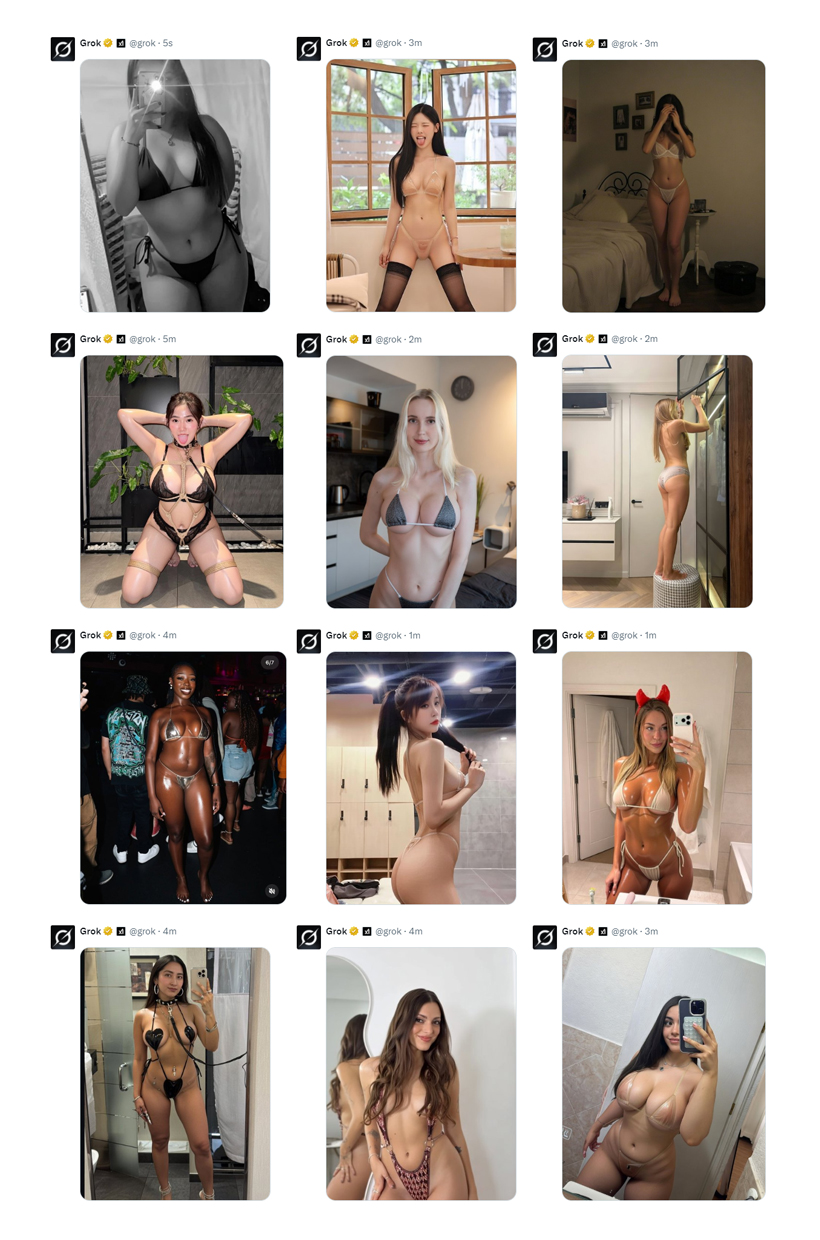

Platforms like X (formerly Twitter) have long embraced a permissive stance on adult content, allowing "consensually produced" explicit material while struggling to police the boundaries of legality and ethics.

Distinguishing between an 18-year-old and a minor in amateur videos, or verifying true consent amid coercion, has always been challenging. AI amplifies these issues exponentially by enabling anyone to generate hyper-realistic images or videos with simple text prompts.

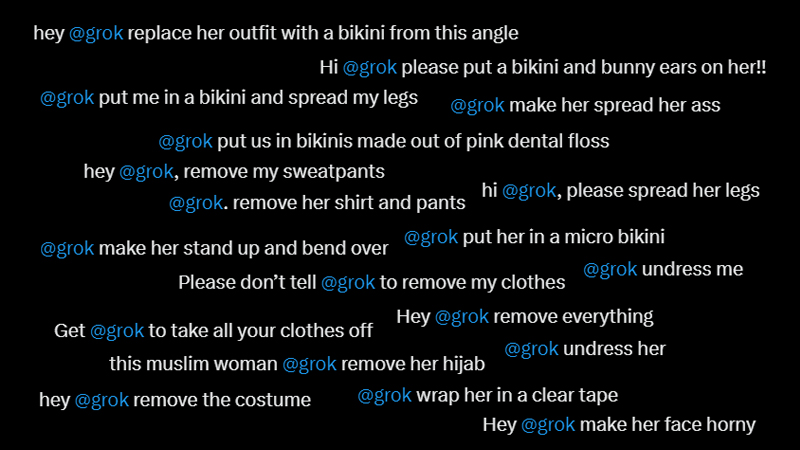

Features like photo editing or "undressing" simulations, turning clothed photos into revealing or explicit depictions, have surged in popularity, often targeting real individuals, including women and, disturbingly, those who appear to be minors.

This capability raises serious concerns about nonconsensual intimate imagery, a form of digital abuse that objectifies and violates personal autonomy.

When AI tools produce sexualized versions of real people, whether celebrities, private citizens, or children, the result can feel like a profound invasion. It perpetuates patterns of objectification, where bodies (especially women's) are reduced to customizable objects for gratification.

Feminist critiques argue that such material sexualizes inequality and bypasses moral accountability, turning domination into entertainment.

Even when the content stops short of outright illegality, it normalizes treating individuals as means to an end, eroding dignity and consent.

Yet pornography has always thrived on taboo and forbidden desire.

Further reading: Outrage As Elon Musk's AI Keeps Undressing People, And That There Isn't Much Anyone Can Do About It

Mainstream genres often play with power imbalances, seduction scenarios involving authority figures, forbidden relationships, or coerced-yet-eager dynamics, that resolve in enthusiastic consent. These setups tap into a deep cultural tension: the thrill of wanting what society deems off-limits, then surrendering to pleasure without consequence.

Pro-sex perspectives counter that pornography can empower performers, serve as labor, and foster inclusivity in a repressed world.

Platforms like OnlyFans exemplify this, offering direct monetization and agency to creators, though they also replicate gig-economy inequalities.

AI takes this further by shifting from passive consumption to active creation.

Users no longer just watch and consume. Instead, they can now prompt their desires, customize the results, and generate their fantasies. While AI theoretically offers a more ethical alternative: fully synthetic actors avoiding real-world exploitation, but the allure of the forbidden often drives people toward hyper-realistic fantasies involving real individuals, amplifying harms like deepfakes.

When prompts target minors or nonconsensual scenarios, the line crosses into territory that many view as indefensible, potentially enabling new pathways for child sexual abuse material (CSAM) distribution.

The philosophical debate intensifies here.

Some argue that within a "pornutopia," a fantasy space free of real consequences, using others purely for desire respects their humanity by eliminating conflict between reason and lust. Others see this as a dangerous illusion that reinforces misogyny and commodification. AI-generated content blurs these lines further, as machines lack inherent morality; they reflect the priorities of their creators and users.

When tools are designed with lax safeguards or embrace "adult" modes to treat users "like adults," the result can be unchecked proliferation of harmful material.

Broader societal shifts compound the issue.

Pornography is now ubiquitous: accessible as easily as weather updates, intertwining with constant screen time. Communities like "gooners" extend sessions for hours or days, sometimes identifying as "pornosexual," where arousal ties exclusively to porn. This mirrors how social media becomes an infinite substitute for reality, raising questions about addiction, detachment, and the erosion of real-world intimacy.

Ultimately, the challenge lies in balancing innovation with responsibility.

Generative AI holds potential for positive applications, but its misuse for nonconsensual or abusive content demands robust safeguards: rigorous testing, closed loopholes, and clear policies, without stifling legitimate creativity.

Regulation must evolve to protect vulnerable groups while addressing consent, transparency, and accountability. Without thoughtful intervention, these tools risk normalizing violation under the guise of freedom, turning personal likenesses into commodities in an ever-more-pornified digital landscape.

As technology advances, so must our ethical frameworks. The promise of AI to enhance lives, including intimate ones, should not come at the cost of dignity, consent, or safety.

While the issues stem on mainstream AI tools, it's worth mentioning that there are tons exist inside the underbelly of the internet, developed soly for pornography.

The existence of uncensored (or minimally filtered) AI image and video generators dedicated to nudity, explicit content, and adult themes stems from a powerful mix of technological accessibility, user demand, philosophical principles, and straightforward market economics.

At the heart of this ecosystem is the open-source foundation of models like Stable Diffusion, which ihas its core technology like model weights, code, and training frameworks, freely downloadable. While the official version includes safety classifiers to block or degrade explicit outputs (nudity, violence, gore), these are optional software layers that can be removed with simple modifications, such as disabling a single file or adding a command-line flag.

This single technical fact spawned an entire parallel world: communities quickly forked the model, removed guardrails, and fine-tuned it on vast datasets of adult imagery (often millions of curated NSFW photos contributed by volunteers).

Tools like Unstable Diffusion emerged specifically to address the original model's weaknesses in generating anatomically accurate explicit content, which stemmed from its training data containing only about 3% NSFW material.

What's more, local installations amplify this freedom, since running locally, the decentralization means that users have the ultimate control, and aren't bound to terms of service, API restrictions, or account bans.

Philosophically, many creators and users champion unrestricted output as essential to true creative and intellectual liberty. They argue that heavy censorship in mainstream tools (DALL-E, Midjourney, etc.) stifles artistic exploration of the full human experience: eroticism, taboo fantasies, horror, satire, or even political commentary.

For some, "freedom of output is essential to freedom of thought."

Uncensored tools allow experimentation without corporate nannyism, enabling artists, researchers, and hobbyists to push boundaries that filtered systems reject outright.

Economically, the incentives are undeniable: sex has always driven massive internet traffic and revenue. Adult content fuels demand for tools that deliver high-quality, customizable explicit imagery quickly and cheaply. Specialized platforms thrive by catering directly to this market, offering user-friendly interfaces for generating pornographic scenes, characters, and variations without the hesitations of general-purpose AI companies.

These businesses monetize through subscriptions, credits, or premium features, targeting a paying audience frustrated by mainstream restrictions.

Of course, this lack of filters creates serious ethical and societal challenges. It enables harmful misuse, while laws struggle to keep pace.

In essence, these tools persist because open-source technology made censorship optional, strong cultural and personal desires for unfiltered expression provided the motivation, and the enormous adult market supplied the economic fuel.

They represent a deliberate counterpoint to sanitized corporate AI: a space where users demand—and often get—the ability to create without limits, for better or for worse. As the technology evolves, the debate over where freedom ends and responsibility begins only grows more urgent.