Google is the largest search engine of the web. It's its quest to crawl and index the whole World Wide Web, and rank them accordingly.

While the web is entirely online, and in one way or another, the inter-connected world is using numerous protocols to ensure their connectivity, there should be a way for the many that are on the web to understand each other's condition.

This is where HTTP status codes are used, as well as Network and DNS information.

First of, HTTP response status codes are issued by a server in response to a client's request made to the server. The status codes are separated into five classes or categories, with the first digit of the status code defines the class of response, while the last two digits do not have any classifying or categorization role.

And Google deals with these status codes, as well as Networks and DNS information. The company said that some can affect SEO in a bad way.

In a post, Google said that:

According to the search giant, every HTTP status code has a different meaning, but often the outcome of the request is the same.

"For example, there are multiple status codes that signal redirection, but their outcome is the same," said Google.

It is explained that Google Search Console generates error messages for status codes in the 4xx–5xx, and also 3xx, which means failed redirections. Google will continue trying by following the redirects, but by only hopping 10 times before giving up.

What webmasters and web owners aim to maintain, is the 2xx status code, which according to Google, is a response that allows it to index.

In a more detail explanation:

2xx (success) is when Google considers the content for indexing. If the content suggests an error, for example an empty page or an error message, Search Console will show a soft 404 error.

- 200 (success) and 201 (created): Googlebot passes on the content to the indexing pipeline. The indexing systems may index the content, but that's not guaranteed.

- 202 (accepted): Googlebot waits for the content for a limited time, then passes on whatever it received to the indexing pipeline. The timeout is user agent dependent, for example Googlebot Smartphone may have a different timeout than Googlebot Image.

- 204 (no content): Googlebot signals the indexing pipeline that it received no content. Search Console may show a soft 404 error in the site's Index Coverage report.

3xx (redirects) is an HTTP status code that means a redirect is happening, and Google that follows up to 10 redirect hops cannot receive content after hopping that many times.

- 301 (moved permanently) and 308 (moved permanently): Googlebot follows the redirect, and the indexing pipeline uses the redirect as a strong signal that the redirect target should be canonical.

- 302 (found), 307 (temporary redirect) and 303 (see other): Googlebot follows the redirect, and the indexing pipeline uses the redirect as a weak signal that the redirect target should be canonical.

- 304 (not modified): Googlebot signals the indexing pipeline that the content is the same as last time it was crawled. The indexing pipeline may recalculate signals for the URL, but otherwise the status code has no effect on indexing.

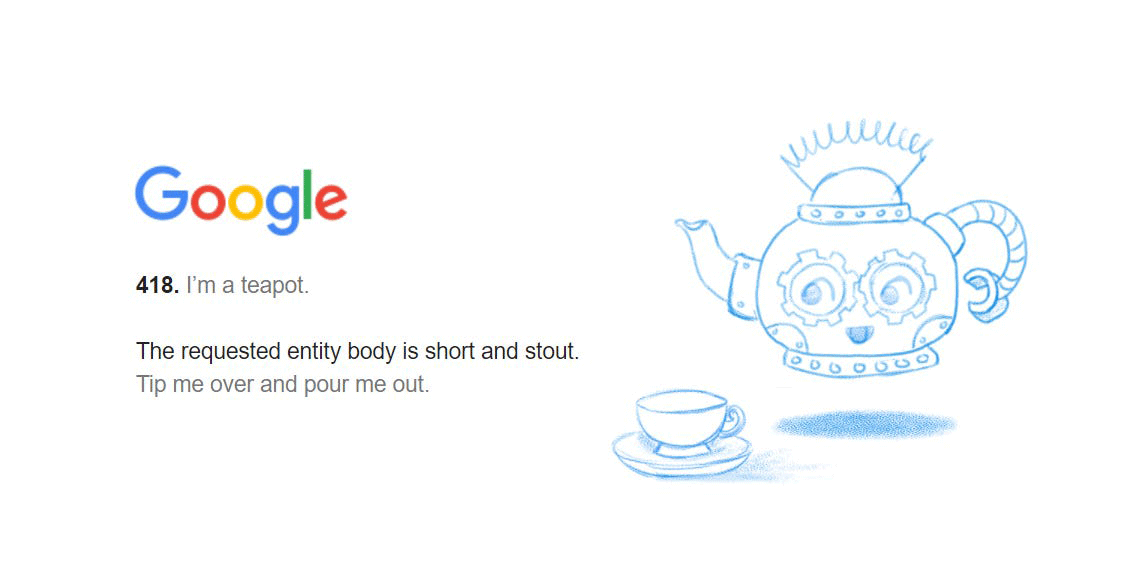

4xx (client errors) is when Google's indexing pipeline doesn't consider URLs that return a 4xx status code for indexing. URLs that are already indexed but later return a 4xx status code can have the URLs removed from the index.

- 400 (bad request), 401 (unauthorized), 403 (forbidden), 404 (not found), 410 (gone) and 411 (length required): All 4xx errors, except 429, are treated the same.

Googlebot signals the indexing pipeline that the content doesn't exist. The indexing pipeline removes the URL from the index if it was previously indexed. Newly encountered 404 pages aren't processed. The crawling frequency gradually decreases. Don't use 401 and 403 status codes for limiting the crawl rate. The 4xx status codes, except 429, have no effect on crawl rate.

- 429 (too many requests): Googlebot treats the 429 status code as a signal that the server is overloaded, and it's considered a server error.

5xx (server errors) are just like 429 server errors, in which prompts Google's crawlers to temporarily slow down with crawling. Already indexed URLs are preserved in the index, but eventually dropped.

- 500 (internal server error), 502 (bad gateway) and 503 (service unavailable): Googlebot decreases the crawl rate for the site. The decrease in crawl rate is proportionate to the number of individual URLs that are returning a server error. Google's indexing pipeline removes from the index URLs that persistently return a server error.

It should be noted that internet-capable software can recognize more than the above codes. There about one hundred HTTPS status codes. The above list however, is the top status codes that Googlebot encountered on the web.

And as for Network and DNS errors, Google said that they have quick and negative effects on a URL's presence in Google Search.

"Googlebot treats network timeouts, connection reset, and DNS errors similarly to 5xx server errors."

What this means, whenever its Googlebot encounters network or DNS errors, it will immediately slow down its activity, simply because it considers the errors as sign that the server may not be able to handle the serving load.

Already indexed URLs that are unreachable will be removed from Google's index within days.

Read: How Google Search Works, And How It Can Show You The Things You Want