Child exploitation and child abuse aren't legal, and in many ways, disturbing.

While humans can be taught to understand what is appropriate and what isn't, algorithms find it more difficult to understand. This is what the search engine Bing is experiencing.

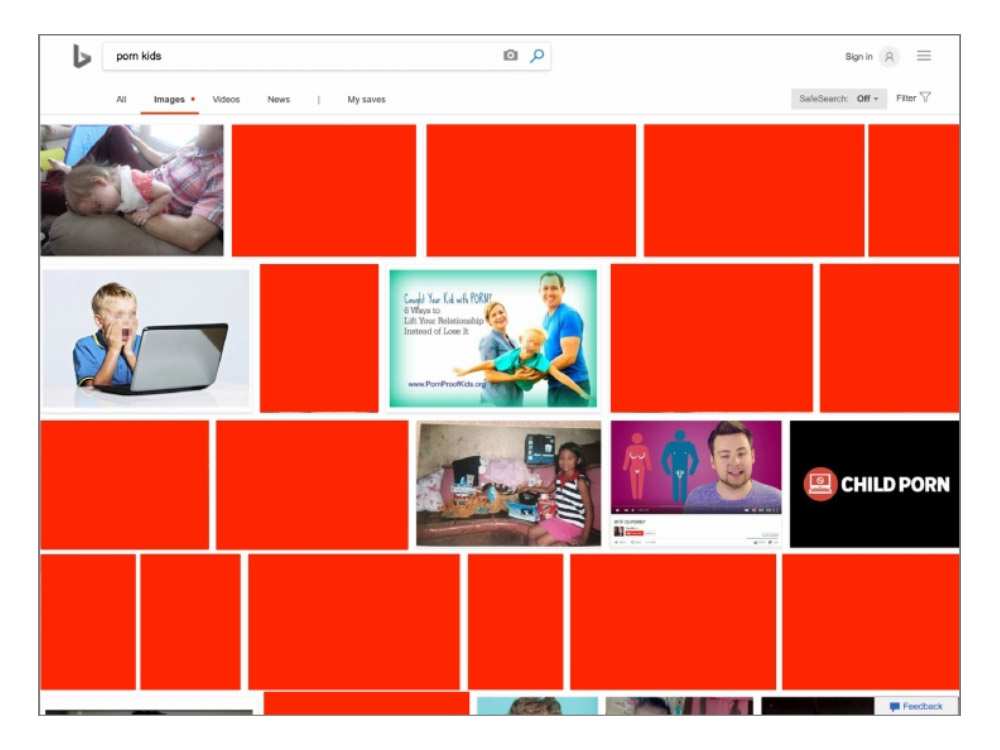

In a report by online safety firm AntiToxin Technologies, it was discovered that those illegal images are somehow easy to find on Bing. What's worse in some cases is that, the search engine may even suggest pedophiles with more child pornography to see.

"Bing, not only makes child pornography available in search results, but also assists with suggested keyword phrases and associated images," the report says.

AntiToxin conducted this research between December 30 and January 7 with Bing SafeSearch turned off.

During that time, the researchers found that when people searched for certain terms related to child sexual abuse, the search engine returned images showing this form of abuse.

As for the search engine's suggestions, the related images can be shown on both the results page as well and the automatic suggestions in the search bar.

Responding to this issue, Microsoft chief vice president of Bing and AI products, Jordi Ribas, said that the publication of these illegal images is unacceptable, and the company has now fixed the problem:

"We acted immediately to remove them, but we also want to prevent any other similar violations in the future. We’re focused on learning from this so we can make any other improvements needed," he said.

While Microsoft claims that the issue is no longer present, AntiToxin's researchers still find some queries from the report still accessible.

For this reason, Bing said that it's working on adding more terms, such as "child sexual abuse", into its content flagging categories.

When asked about how such incident could have occurred, a Microsoft spokesperson said that:

Previously, it was found that bad actors were spreading abusive images through Dropbox links using certain Instagram hashtags.

And before that, AntiToxin also found that Google And Facebook ad networks were having a child porn problem after evidence shows that ads for major brands were placed in "child abuse discovery apps."

While this incident is not Bing's intention to happen, this simply shows how tech companies should never be dependent on algorithms and image recognition technology because they are far from perfect.

Companies can leverage AI to do the dirty work and the heavy lifting, but when it comes to morality and ethics, they should understand that computers don't have any.

This is an example of what can happen when tech companies refuse to adequately reinvest the profits they earn into ensuring the security of their own customers and society at large.

As for Bing, this may be a PR nightmare. After all, Microsoft has been working on numerous efforts to tackle the spread of images depicting child sex abuse on the web.

AntiToxin CEO Zohar Levkovitz said that that tech platforms should double down on their efforts to prevent such content from being distributed on their platforms.