Traditionally, translation tools translate one language to another, by first translating it to English. This makes English an intermediary language (IL).

English as a IL is often needed because it's assumed that as the most commonly spoken language, it has the most information occurring in other languages. What's more, using English corpus as IL allows AI to learn from millions if not billions of translated documents that have already been made available.

In other words, English as IL allows translator software to work with significant gain.

Most spoken languages would in the beginning use English as IL. But over time, their respective databases will grow to an extent that English as the IL is no longer needed.

And this is proven by Facebook.

The social giant has open-sourced an AI model that can translate between any pair of 100 languages without first translating them to English as an intermediary step.

In a blog post, Facebook research assistant Angela Fan said that:

“A single model that supports all languages, dialects, and modalities will help us better serve more people, keep translations up to date, and create new experiences for billions of people equally. This work brings us closer to this goal.”

Calling it with a very technical name 'M2M-100' , the AI is launched only as a research project.

The model that has been open-sourced on GitHub, was trained on a dataset of 7.5 billion sentence pairs from 2,200 translation directions, across 100 languages.

Facebook said that all of the sources it used are open sourced and are publicly available data.

To manage the scale of the mining, the researchers focused on language translations that were most commonly requested and avoided the rarer ones, such as Sinhala-Javanese.

The team then grouped the languages into 14 different groups, based on linguistic, geographic, and cultural similarities. This approach was chosen because people in countries with languages that share these characteristics would be more likely to benefit from translations between them.

And when dealing with languages that lacked the quality translation data, the researchers used a method called "back-translation" in order to generate synthetic translations that can supplement the mined data.

With the combined methods and techniques, Facebook managed to create "the first multilingual machine translation (MMT) model that can translate between any pair of 100 languages without relying on English data."

“When translating, say, Chinese to French, most English-centric multilingual models train on Chinese to English and English to French, because English training data is the most widely available,” said Fan.

“Our model directly trains on Chinese to French data to better preserve meaning.”

Facebook suggests that M2M-100 outperformed English-centric systems by 10 points on the BLEU metric for assessing machine translations.

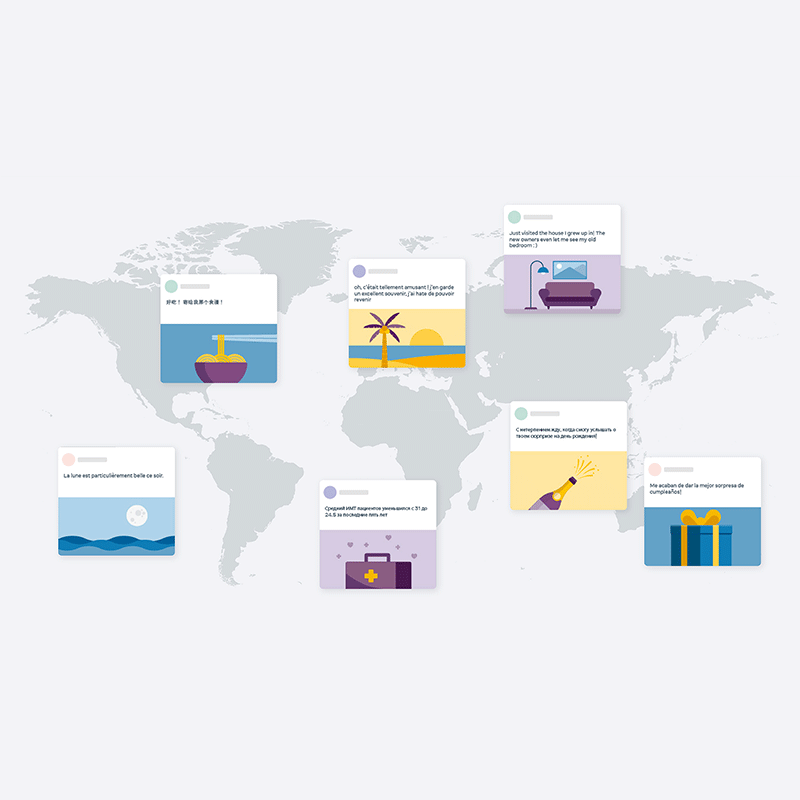

The model has not yet been incorporated in any products, but the tests suggest it could eventually be used to translate posts on Facebook's News Feed, where two-thirds of the contents are written in languages other than English.